Tech Topic | April 2022 Hearing Review

By Christopher Slugocki, PhD, and Petri Korhonen, MSc

This study uses the electroencephalogram (EEG) and synchronous “alpha” activity over the parietal lobe of the brain to assess listening effort. The researchers find that the intelligibility advantages offered by Signia Augmented Focus hearing aids lead to less effortful listening across a range of SNRs typical of difficult listening environments.

“Success is dependent on effort”

– Sophocles

Hearing science has come to appreciate the relevance of “effort” as a factor for determining communication success in difficult listening conditions. Most of us accept that our day-to-day lives will involve settings (eg, classrooms, busy restaurants, public transit) or situations (eg, washing dishes, driving a car) where understanding speech requires an elevated degree of effort. Though the precise nature of this mental exertion is still debated, the consensus reached in Pichora-Fuller et al1 defines “listening effort” as the allocation of cognitive resources to overcome obstacles or challenges to achieving listening-oriented goals. However, ask most hearing aid wearers how it feels to follow speech in a noisy environment, and they might give you a simpler description: It’s hard!

For those with a hearing loss, minimizing effort can help them keep from tuning out of conversations, disengaging socially, or abandoning their hearing aids. More importantly, limiting the effort needed to engage socially can help listeners avoid unnecessary stress2 and fatigue3 both of which, over time, may lead to negative health outcomes.4,5 Ensuring that listening does not feel too effortful is thereby critical to listeners’ communication success.

One way that hearing aid manufacturers try to make listening less effortful is by developing features that improve the signal-to-noise ratio (SNR) for speech. The efficacy of these features, such as directional microphones and noise reduction (NR) algorithms, is then verified using speech-in-noise tests that often quantify the minimum SNRs required to achieve certain levels of intelligibility. While useful, this approach assumes that listeners will exert as much effort as needed to accomplish a task, and no more, so long as the task is not too difficult and optimal task performance is considered a worthwhile goal.6 Hence, if a new hearing aid feature improves wearers’ speech reception thresholds, then we assume this feature also reduces wearers’ listening effort.

The problem with this reasoning is that it assumes task performance/difficulty is equal to the actual effort that a listener experiences. Consider two new hearing aid features that produce similarly good levels of intelligibility (eg, 80%) at an SNR below the expected performance plateau (eg, 5 dB). Now, imagine the first of these features works by better isolating/processing the speech signal, whereas the second works by aggressively filtering the background noise. If the aggressive noise filter incidentally distorts the speech signal, then it would be reasonable to expect listeners to expend more effort to make sense of, or “fill-in,” these distorted acoustic details7 compared to the feature that provides a cleaner speech signal. This is because “filling in” distorted or missing information demands cognitive resources that could instead be used for other tasks, such as trying to process what was said and/or to formulate a clever or interesting response. In this example, if speech intelligibility was our only outcome measure, we would miss an otherwise meaningful difference in efficacy between the two features.

As such, hearing researchers have used different behavioral and physiological methods to quantify listening effort as separate from task performance (for a review, see Francis & Love8). Behavioral methods often involve subjective self-reports or measures of performance on secondary tasks like memory tasks or dual-task paradigms. Some newer speech-in-noise tests, like the Repeat-Recall Test (RRT),9 even integrate the collection of self-reported listening effort to help clinicians better understand the difficulties of their patients and the effects of different hearing aid technologies. Although self-reports have great clinical value, in that they are easy to collect and directly reflect a listener’s conscious awareness of effort, they may be biased by listeners’ internal states and retrospective expectations about how they should have performed under the test conditions.

Memory and dual-task measures get closer to measuring actual exerted effort by loading capacity-limited cognitive systems, such as working memory, which are also thought to be involved in speech perception.10 These measures assume that listeners are always optimizing performance for the primary speech task but cannot prevent listeners from reorienting their cognitive resources between primary and secondary tasks during testing.

Physiological measures of listening effort are also numerous and include electrical skin conductance, heart rate and heart rate variability, blood pressure, and pupil dilation. These measures are all related to the autonomic nervous system and how it adjusts the function of different organs based on states of rest and action (effort).8 As such, most of these are peripheral or secondary to the actual neural processes responsible for processing speech.

One might then argue that measuring effort as it relates to the application of cognitive resources would be best done by recording activity from the brain itself. Fortunately, ongoing brain waves (voltage oscillations) reflecting the activity of masses of neurons can be quite easily captured by the electroencephalogram (EEG). These brain waves are typically grouped into “bands” based on their rates of oscillation, where greater energy in a band suggests greater synchrony of neural activity at that range of rates.

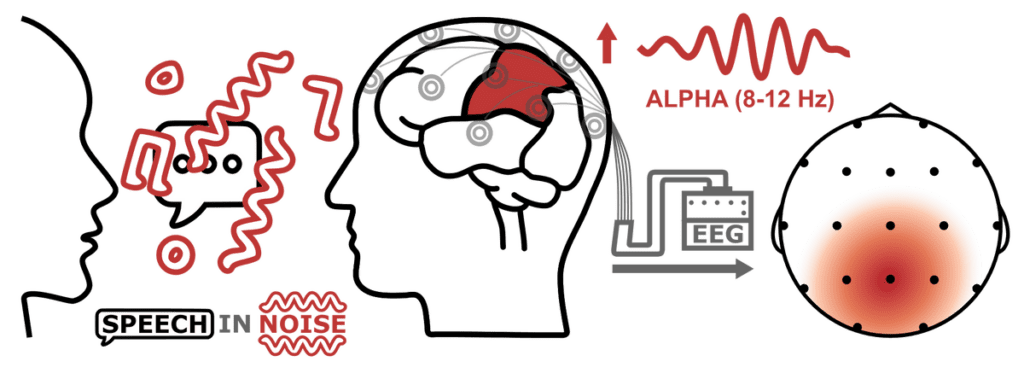

Brain waves in the alpha band (8-12 Hz)—in particular those measured over posterior regions of the head (ie, parietal/temporal/occipital lobes)—have recently come under interest as possible neural signatures of listening effort. Specifically, increased alpha power (synchronization) over parietal regions has been correlated with the difficulty of speech tasks and with pupil dilation when listening to speech in noise at different SNRs.11 These findings have led researchers to suggest that synchronization in the alpha band reflects the action of inhibitory mechanisms that act to suppress task-irrelevant stimuli, such as visual stimuli or competing noise.12-14 In this way, measuring alpha power may offer an objective real-time estimate of listening effort during speech-in-noise tasks (Figure 1) and allow us to examine the relative efficacies of different hearing aid technologies for reducing listening effort.

Figure 1. During challenging listening conditions, such as trying to understand speech in noise (left), the brain may exhibit a temporary increase in synchronous “alpha” activity over the parietal lobe (red lobe, middle). This alpha activity is thought to partially inhibit the processing of non-auditory (ie, visual) or otherwise competing sensory information. We can quantify changes in alpha activity by measuring power in the electroencephalogram (EEG) between 8–12 Hz. Greater alpha power over parietal electrodes (right) is interpreted as greater listening effort.

Recently, the Signia Augmented Xperience (AX) platform introduced a new and innovative signal processing paradigm called Augmented Focus (AF).15 This AF technology is enabled by Signia’s beamformer which splits the incoming sound into two separate signal streams. Thus, AF enables the hearing aid to process sounds coming from the front and the back separately, with different gains, time constants, and NR settings. This split processing increases the contrast between sounds arriving from the front versus back, and makes speech easier to understand in noise compared to conventional (non-AF) adaptive directional microphones.16

Previously, we have shown that AF technology also increases the level of background noise that listeners are willing to accept while listening to speech17 and helps listeners’ brains make and evaluate predictions about a single talker in a cocktail party scenario.18

In the present study, we sought to extend these findings by measuring alpha activity in aided listeners with a hearing loss to evaluate whether AF technology reduces the effort required to understand speech in background noise. Based on our previous work, we predicted alpha power would be lowest (ie, least effort) when listeners were tested in the AF condition, followed by the non-AF directional condition, and highest (ie, most effort) in a non-AF condition using omnidirectional microphone processing.

Study Methods

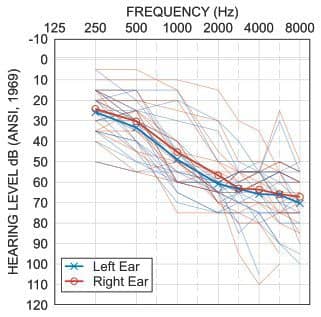

Participants. A total of 18 native English speakers (mean age = 71 years, SD = 13, range = 38–85 years, 11 female) with a symmetrical sensorineural hearing loss (Figure 2) and normal cognitive function participated in this study. Only three participants were not current hearing aid wearers.

Hearing Aids. We compared a full-feature version of the Signia AX with a version that did not have AF. Hearing aids were programmed using the latest version of Signia Connexx fitting software. Participants were fit according to the NAL-NL2 formula at 100% prescription gain. The AF hearing aid was programmed and tested with the default universal program. The non-AF hearing aid was programmed and tested in a standard omnidirectional microphone mode (non-AF-omn) and in an automatic adaptive directional microphone mode (non-AF-dirm). Features other than noise reduction and feedback cancellation were disabled.

Procedure. Alpha activity was measured while aided listeners performed a modified version of the RRT.9 On each trial of the RRT, listeners heard six sentences that were either all declarative and semantically meaningful (high context) or semantically meaningless but still syntactically valid (low context). Sentences were constructed at a fourth-grade reading level. Listeners were instructed to repeat each sentence just as they had heard it, to the best of their abilities. Sentence materials were presented at 65 dB SPL from a loudspeaker located directly in front (0° azimuth).

Simultaneous continuous noise was presented from two loudspeakers located behind the listener at 135° and 225° at one of three SNRs: -5, 0, or 5 dB. The SNR remained fixed at one level for each trial of six sentences. In addition, ongoing two-talker babble noise was presented from four loudspeakers (45°, 135°, 225°, 315°) at 45 dB SPL. Speech-in-noise performance was scored based on correct repetition of 20 target words distributed among the six trial sentences (3-4 words per sentence). Performance was scored separately for each combination of sentence context (high and low), SNR (-5, 0, and 5 dB), and hearing aid condition (non-AF-omn, non-AF-dirm, AF).

Listener EEG was recorded during the RRT using 19 Ag/AgCl sintered electrodes positioned according to the 10-20 system.19 High forehead was used as ground and bilateral earlobe electrodes were used as reference. Alpha activity was quantified by power in the EEG spectrum between 8-12 Hz.

Results

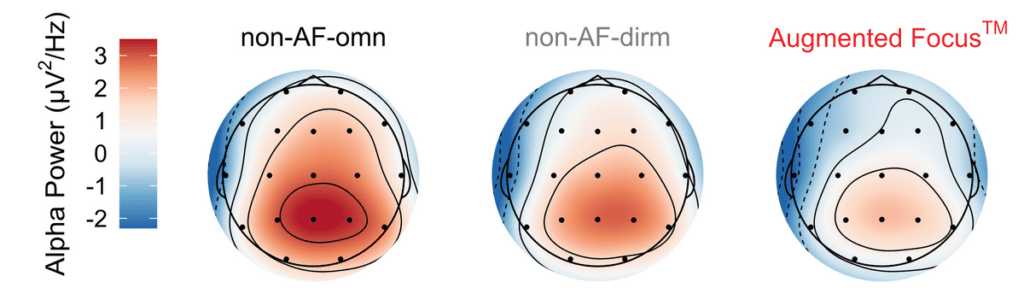

Figure 3 shows the average scalp topography of listeners’ alpha activity across all SNRs and sentence contexts for each of the three hearing aid conditions. Average alpha power measured over parietal (mid-to-back of head) electrodes, shown by the red shading, was highest when listeners were tested in the non-AF-omn condition and lowest when tested in the AF condition. Alpha activity measured in non-AF-dirm condition was intermediate.

Figure 3. Average scalp topography of alpha activity measured in three different hearing aid conditions. Greater alpha activity is represented by deeper red shading. Black dots represent the placement of 19 electrodes used to record listener EEG. Topography outside of the head represents activity of electrodes below the midline. Contour lines are plotted for positive (solid) and negative (dashed) alpha power.

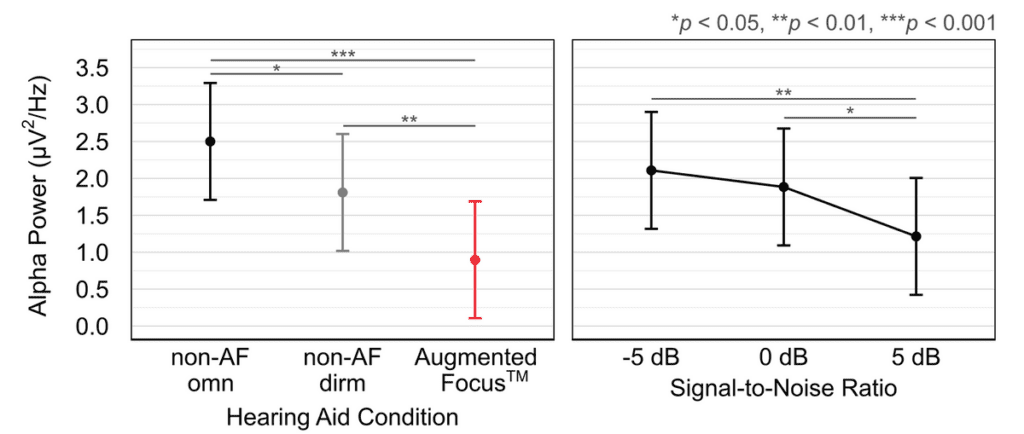

For each listener, we then calculated the average of alpha power measured at parietal electrodes for each combination of sentence context, SNR, and hearing aid condition. Statistical analysis of these data using linear mixed effects models confirmed that listener alpha power was significantly affected by both hearing aid condition (χ2(2) = 33.05, p < 0.001) and by SNR (χ2(2) = 11.05, p < 0.01). Specifically, post-hoc contrasts showed that alpha power was significantly lower in the AF condition than in either the non-AF-omn or non-AF-dirm conditions. Alpha power was also significantly lower in the non-AF-dirm condition compared to the non-AF-omn condition (Figure 4, left). In addition, post-hoc tests of the SNR effect showed that alpha power was significantly lower at an SNR of 5 dB compared to SNRs of 0 or -5 dB; average alpha power did not differ significantly between 0 and -5 dB (Figure 4, right).

Figure 4. Significant effects of Hearing Aid (left panel) and SNR (right panel) conditions on listener alpha power. Points represent estimated marginal means and error bars represent 95% confidence intervals of these means. Horizontal bars indicate significant post-hoc contrasts.

The effects on alpha power agreed with listener speech-in-noise performance which was also significantly affected by hearing aid condition (χ2(2) = 273.50, p < 0.001) and SNR (χ2(2) = 617.00, p < 0.001). However, unlike alpha power, speech-in-noise performance was also significantly affected by sentence context (χ2(1) = 22.46, p < 0.001). Whereas speech-in-noise performance improved with increasing SNR in all conditions, performance was always best in the AF condition, followed by non-AF-dirm and then non-AF-omn. Speech-in-noise performance was also better for high context than for low context speech materials, as reported previously by Slugocki et al.18

Together, these results suggest that AF technology improves speech-in-noise performance and reduces the effort required to understand speech across problematic SNRs typically encountered by hearing aid wearers. These results also support the RRT as a tool for studying listening effort in listeners with a hearing loss.

Discussion

The results of this study expand our knowledge of how Signia AX with Augmented Focus (AF) technology helps listeners to understand speech in difficult listening situations. Similar to Jensen et al,16 we observed that AF outperformed a conventional directional system (non-AF-dirm) in comparative measures of speech recognition. As expected, we further observed that both AF and non-AF-dirm outperformed an omnidirectional system (non-AF-omn). Differences in alpha power between these hearing aid conditions extend our behavioral results to suggest that the intelligibility advantages of Augmented Focus lead to less effortful listening across a range of SNRs typical of difficult listening environments.

The alpha power results also support prior speculation that Augmented Focus technology provides the auditory brain with the cues it needs to group and maintain the acoustic features of a single talker as separate from the surrounding noise/babble without the need to invoke top-down (ie, cognitively demanding/effortful) processes.18 The bottom-up nature of Signia AX processing advantages may also explain why AF wearers are willing to accept greater levels of background noise, while still maintaining speech understanding, across a range of input speech levels when tested with AF-enabled devices compared to those with conventional directionality.17

Together, these studies suggest that the benefits of Signia AX with Augmented Focus translate beyond performance improvements on laboratory speech-in-noise tests; they actually improve how wearer’s feel about listening to speech in challenging conditions.

Correspondence can be addressed to Dr Slugocki at: [email protected].

Citation for this article: Slugocki C, Korhonen P. Split-processing in hearing aids reduces neural signatures of speech-in-noise listening effort. Hearing Review. 2022;29(4):20-23.

References

- Pichora-Fuller MK, Kramer SE, Eckert MA, et al. Hearing impairment and cognitive energy: The framework for understanding effortful listening (FUEL). Ear Hear. 2016;37:5S-27S.

- Kramer SE, Teunissen CE, Zekveld AA. Cortisol, Chromogranin A, and pupillary responses evoked by speech recognition tasks in normally hearing and hard-of-hearing listeners: A pilot study. Ear Hear. 2016;37:126S-135S.

- Hornsby BWY. The effects of hearing aid use on listening effort and mental fatigue associated with sustained speech processing demands. Ear Hear. 2013;34(5):523-534.

- Kramer SE, Kapteyn TS, Kuik DJ, Deeg DJH. The association of hearing impairment and chronic diseases with psychosocial health status in older age. J Aging Health. 2002;14(1):122-137.

- Livingston G, Huntley J, Sommerlad A, et al. Dementia prevention, intervention, and care: 2020 report of the Lancet Commission. Lancet. 2020;396(10248):413-446.

- Gendolla GHE, Richter M. Effort mobilization when the self is involved: Some lessons from the cardiovascular system. Rev Gen Psychol. 2010;14(3):212-226.

- Shinn-Cunningham BG. Object-based auditory and visual attention. Trends Cogn Sci. 2008;12(5):182-186.

- Francis AL, Love J. Listening effort: Are we measuring cognition or affect, or both? Wiley Interdiscip Rev: Cogn Sci. 2020;11(1):e1514.

- Slugocki C, Kuk F, Korhonen P. Development and clinical applications of the ORCA Repeat and Recall Test (RRT). Hearing Review. 2018;25(12):22-26.

- Gagne J-P, Besser J, Lemke U. Behavioral assessment of listening effort using a dual-task paradigm: A review. Trends Hear. 2017;21:1-25.

- McMahon CM, Boisvert I, de Lissa P, et al. Monitoring alpha oscillations and pupil dilation across a performance-intensity function. Front Psychol. 2016;7.

- Dimitrijevic A Smith ML, Kadis DS, Moore DR. Cortical alpha oscillations predict speech intelligibility. Front Hum Neurosci. 2017;11.

- Dimitrijevic A, Smith ML, Kadis DS, Moore DR. Neural indices of listening effort in noisy environments. Sci Rep. 2019;9(11278).

- Strauss A, Wöstmann M, Obleser J. Cortical alpha oscillations as a tool for auditory selective inhibition. Front Hum Neurosci. 2014;8.

- Best S, Serman M, Taylor B, Høydal EH. Backgrounder Augmented Focus [Signia white paper]. https://www.signia-library.com/scientific_marketing/backgrounder-augmented-focus/. Published May 31, 2021.

- Jensen NS, Høydal EH, Branda E, Weber J. Augmenting speech recognition with a new split-processing paradigm. Hearing Review. 2021;28(6):24-27.

- Kuk F, Slugocki C, Davis-Ruperto N, Korhonen P. Measuring the effect of adaptive directionality and split processing on noise acceptance at multiple input levels. Int J Audiol. 2022;1-9. DOI:10.1080/14992027.2021.2022789.

- Slugocki C, Kuk F, Korhonen P, Ruperto N. Using the mismatch negativity (MMN) to evaluate split-processing in hearing aids. Hearing Review. 2021;28(10):20-23.

- Klem GH, Lüders HO, Jasper HH, Elger C. The ten-twenty electrode system of the International Federation. The International Federation of Clinical Neurophysiology. Electroencephalogr Clin Neurophysiol.1999;52:3-6.

Sorry about this shut down taking place!

What we need to do is simple: Ensure the integrity of the signal at the presynaptic stage, and control the synaptic codes at this point before impacting with the ribbon synapses. This means the excessive energy values need to be cut off between the cochlea and the outer segment of the cochlea. This will bring down the noise level by at least 25 db SPL at the ribbon synapses!