Spontaneous gesture can help children learn, whether they use a spoken language or sign language, according to a new article published online by Philosophical Transactions of the Royal Society B and will appear in the September 19 print issue of the journal which is a theme issue on “Language as a Multimodal Phenomenon.”

Previous research by Susan Goldin-Meadow, the Beardsley Ruml Distinguished Service Professor in the Department of Psychology, has found that gesture helps children develop their language, learning, and cognitive skills. As one of the nation’s leading authorities on language learning and gesture, she has also studied how using gesture helps older children improve their mathematical skills.

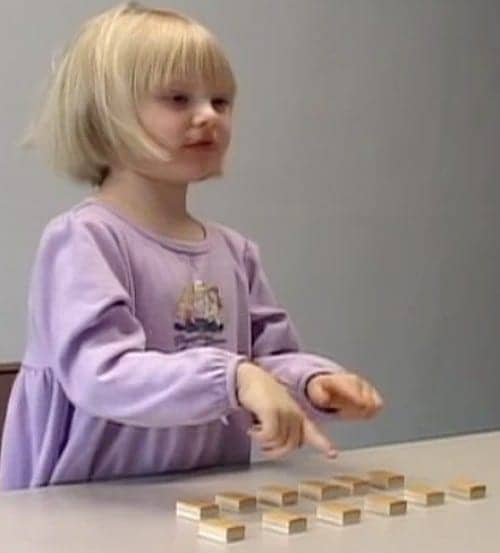

Using gestures helps children develop basic learning and cognitive skills, aiding them in problem-solving tasks. Photo courtesy of Laura Tharsen/Susan Goldin-Meadow Lab.

Goldin-Meadow’s new study examines how gesturing contributes to language learning in hearing and in deaf children. She concludes that gesture is a flexible way of communicating, one that can work with language to communicate or, if necessary, can itself become language.

“Children who can hear use gesture along with speech to communicate as they acquire spoken language,” says Goldin-Meadow. “Those gesture-plus-word combinations precede and predict the acquisition of word combinations that convey the same notions. The findings make it clear that children have an understanding of these notions before they are able to express them in speech.”

In addition to children who learned spoken languages, Goldin-Meadow studied children who learned sign language from their parents. She found that they too use gestures as they use American Sign Language. These gestures predict learning, just like the gestures that accompany speech.

Finally, Goldin-Meadow looked at deaf children whose hearing losses prevented them from learning spoken language, and whose hearing parents had not presented them with conventional sign language. These children use homemade gesture systems, called homesign, to communicate. Homesign shares properties in common with natural languages but is not a full-blown language, perhaps because the children lack “a community of communication partners,” Goldin-Meadow writes. Nevertheless, homesign can be the “first step toward an established sign language.” In Nicaragua, individual gesture systems blossomed into a more complex shared system when homesigners were brought together for the first time.

These findings provide insight into gesture’s contribution to learning. Gesture plays a role in learning for signers even though it is in the same modality as sign. As a result, gesture cannot aid learners simply by providing a second modality. Rather, gesture adds imagery to the categorical distinctions that form the core of both spoken and sign languages.

Goldin-Meadow concludes that gesture can be the basis for a self-made language, assuming linguistic forms and functions when other vehicles are not available. But when a conventional spoken or sign language is present, gesture works along with language, helping to promote learning.

Source: University of Chicago News