Tech Topic | March 2022 Hearing Review

By Andreas Seelisch, MSc; Sally Norris (PhD Candidate), and Robert Koch, MESc

Simulation appears both sensitive to identifying training needs related to REM and other core competencies, such as cerumen management and earmold impressions, as well as capable of providing the necessary outlet for follow-up training. It also offers valuable opportunities for between-clinician comparison.

Hearing healthcare training programs and professional licensure are designed to ensure adequate training and preparedness for clinical practice, yet clinical staff show tremendous variability with various skillsets, especially with real-ear measurement (REM). This variability can be the result of numerous factors, including academic training background, years of experience, differing clinical experiences and practice settings, and/or the value individuals/organizations place on REM. Variability of this nature threatens organizations desiring to maintain the highest level of care.

REM is widely considered best practice, yet research confirms inconsistent use of verification procedures in general, with estimates of routine use for fittings in the United States between 35%-55%.1,2 Even where equipment exists and guidelines are in place for routine use, evidence has shown an even smaller minority of hearing care professionals match to prescriptive fitting algorithms (eg, NAL-NL2, DSL, etc), despite research indicating that 80% of patients would prefer being fit in this manner.2,3 Poor proficiency with REM can manifest in several ways, including poor match to target due to shallow probe-tube placement.4 As such, there is significant rationale for ensuring staff are proficient with core equipment and key processes.

Evaluating proficiency in a private-practice setting, however, can be challenging. Evaluating staff during appointments with patients might impair rapport building, erode patient confidence in the clinician and/or organization, or expose patients to additional discomfort/risk. Traditional solutions like conducting measures on the coach or other staff members involve constraints, such as cost effectiveness, coordination, practicality, or even effective observation.

Simulation as a Solution

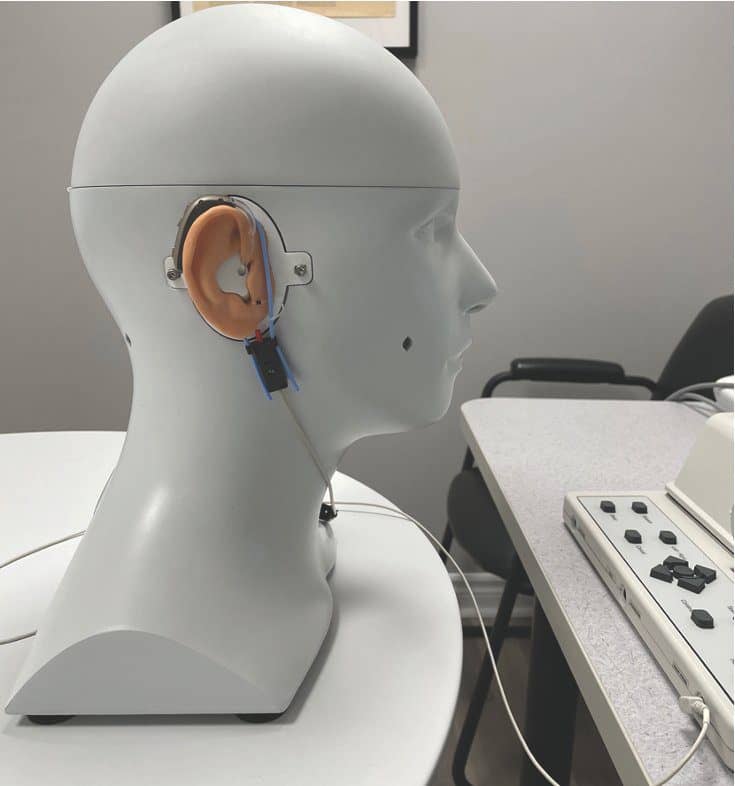

Simulation may provide the opportunity to evaluate clinical proficiency in a manner that is safe, practical, and efficient. CARL, a patient simulator developed by AHead Simulations, was designed to support training and has been gaining popularity in hearing healthcare education since 2018 (Figure 1).5,6 CARL features an anthropomorphic head and shoulder model with silicon-based ears developed directly from clinical CT scans of real ears. These ears match the anatomy of the auricle, external auditory meatus, and tympanic membrane with contours and ear canal acoustics that fall within clinical norms.7 Multiple anatomies can be exchanged on the same manikin to simulate different real-ear acoustics. The manikin features a camera that provides an in-canal view of CARL’s ears while facilitating an estimate of the distance to the tympanic membrane. The coach has an in-canal view of otoscopy, probe-tube placement, and hearing aid positioning in real time while the clinician is working.

Figure 1. The CARL manikin set up in front of an Audioscan Verifit1 to conduct a hearing aid fitting.

Our study aimed to determine the feasibility of using CARL to establish clinicians’ baseline performance, proficiency with REM, and adherence to standards of practice. Additionally, an estimate of the functionality of CARL as a vehicle for opportunity-specific feedback and follow-up training was made.

Protocol for Baseline Measurement of Clinical Skill

A multiple case study semi-structured “sandbox” approach was used with a cross section of 22 clinicians who were evaluated using CARL in a simulated mock fitting.8 All clinicians completed a 2-hour session using CARL while being observed by a coach whose role within the organization includes coaching, training, and standards of practice. Clinician experience ranged from new graduates to 10+ years of clinical practice. Their academic training backgrounds included college level diplomas, nationally trained master’s degrees, international master’s level degrees, and international doctorate of audiology programs.

Clinicians were given the following instructions: “Please complete a mock fitting on CARL using a typical hearing loss and appropriate hearing aid of your choosing, verifying the results to your satisfaction.” Clinicians were encouraged to discuss their reasoning throughout. Once complete, the ear on CARL was changed from a small canal to a large canal or vice versa, differences to which the clinician was blinded.

Throughout the process, the coach identified areas for improvement in technical use of the equipment and its relevant features, as well as workflow efficiency. Open-ended questioning was used throughout the process to capture insights into clinical reasoning, decision-making, theoretical knowledge, operating procedures, and their own internal “standards.”

Probe placement data, including distance to the tympanic membrane as well as instances when contact with the tympanic membrane was detected, were also collected. In cases where probe placement was deemed poor (ie, greater than 5mm), measures were repeated and differences in gain were captured.8

Beyond Probe-tube Measures

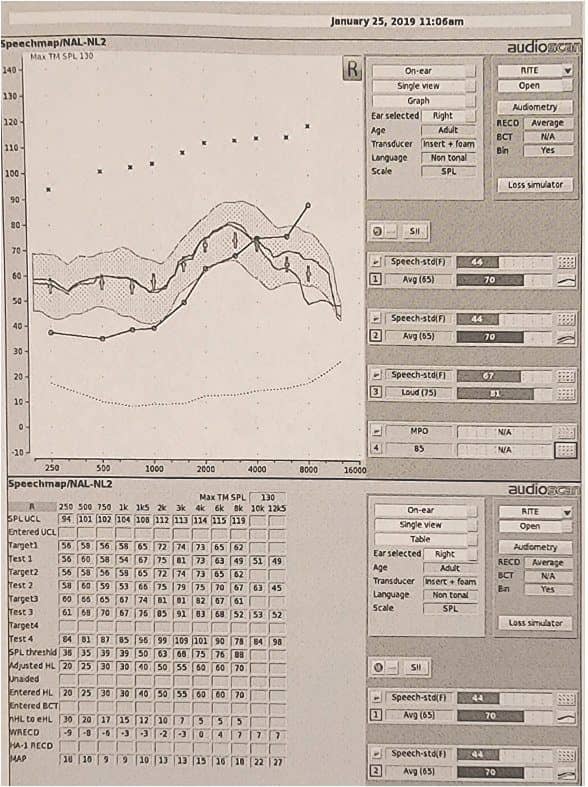

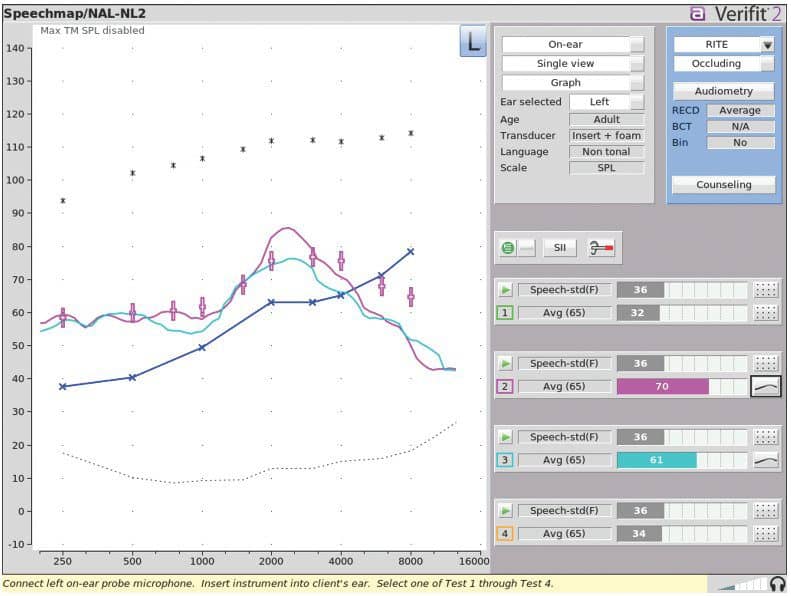

The average probe-tube placement depth was estimated at 4 mm with sufficient insertion depth obtained in 71% of cases, and the tympanic membrane was contacted at least once in 55% of cases. In cases of suboptimal probe placement, repeating measures with ideal placement could show differences of as much as 18dB at 8 kHz (Figure 2). While this quantitative data provided salient teachable moments focused on improving patient comfort or fit-to-target, the profound benefits to simulation were more subjective and qualitative. The most notable outcome from this process was that it offered universal benefit while being tailored to the individual clinician. Outcomes were highly variable and case-specific with respect to the degree and form of benefit.

Figure 2. Example illustrating significant high-frequency differences between poor probe placement in Test 1 and corrected placement in Test 2 (a difference of 18 dB at 8000 Hz).

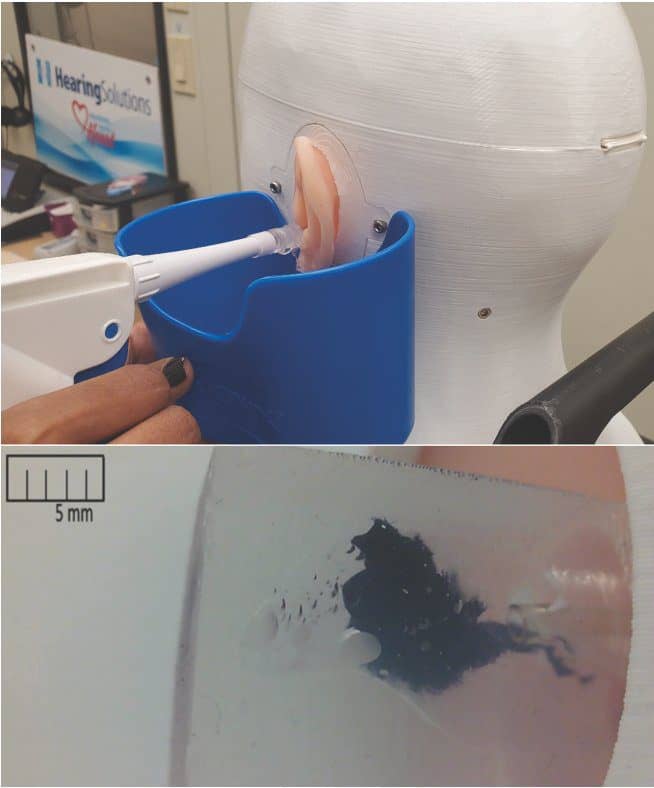

In seven cases (32%) of simulation, CARL was able to determine that additional training was required. Of these cases, three (43%) required clear remedial training, one (14%) benefitted from additional exposure to pediatric impressions, and four (57%) were identified to benefit from additional cerumen management skills (Figure 3). Additional simulation sessions were able to support each of these training scenarios and only the single pediatric case required additional equipment in the form of a pediatric manikin (Baby-CARL). The remaining 15 cases (68%) were found through simulation to meet a minimum standard of competency in operating standards. In this manner, CARL was an effective tool for internal certification. Each group benefited from the simulation process because it supported them in their clinical practice.

Figure 3. CARL being used for follow-up training on cerumen management; most training sessions prompted by this study were for cerumen management with the in-canal view supporting a better understanding of fluid dynamics and the impact changes in position have on successful removal.

Clinicians were separated into categories, with 12 achieving “high” performance (55%), 7 “adequate” performance (32%), and 3 “low” performance (14%) who were recommended for remedial training. Those who performed at a “high” level tended to require less feedback, with direction focused on improving workflow or reinforcing best practices. Examples of workflow included coaching on improved probe-tube stability and demonstrating effectiveness of new tools (eg, probe-tube placement assistant, data transfer using NOAH modules as opposed to manual input, etc).

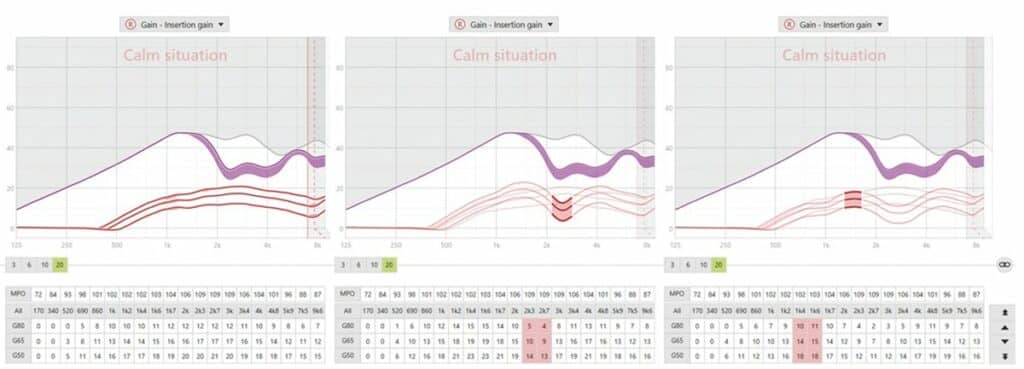

Examples of reinforcing best practices were often centered around needing to account for acoustical differences in the ears used (Figure 4). Opportunities to reinforce best practices were prevalent in all categories, with those performing at “high” levels demonstrating greater awareness. For example, one clinician who excelled at the exercise reported that it was “really useful” and that they “never had the opportunity to see how the same settings would react differently in two patients.” This comment was despite having already demonstrated a theoretical understanding of the principles of accounting for individual ear acoustics earlier in the session.

Figure 4. Pink and light blue tracings illustrate the variation between two CARL ear configurations with no changes made in the manufacturer software.

Interestingly, most individuals performing at a “high” level reported similar subjective benefits. It may be that these individuals appreciated the opportunity for development, positive feedback, and knowing their performance was aligned with expectations. By contrast, half of those with “adequate” performance were ambivalent or failed to see value in the task. It is hypothesized that CARL may be sensitive to certain personality traits, such as personal standards of excellence, trainability, or the value individuals place on REM. These trends also illustrated how clinician attitude and previous experiences had a greater impact on outcomes than years of experience or academic training program. One poignant example of this includes a sample of two individuals with matching training profiles (graduating from the same AuD program and both with 8 years of experience in different practice settings) demonstrating polarized outcomes, with one individual a high performer and the other requiring remedial training.

This hints at one of the most powerful and unintended outcomes from this study; simulation offers valuable opportunities for between-clinician comparison. It was immediately apparent after the pilot study that coaching sessions, which were initially expected to measure a very specific REM skillset, in fact, revealed tremendous insight into an individual’s trainability and their alignment to the organization’s values.9,10 For instance, Hearing Solutions, the organization within which the study was conducted, places a premium on core values that drive customer outcomes, such as professionalism and adherence to evidence-based practice, verification and validation measures, as well as passion and the pursuit of excellence. Individuals whose values do not align might be more willing to cut corners in practice, omit certain tests, and have decreased interest in the exercise.

As such, the simulation exercise appears sensitive in identifying those less likely to be a long-term fit for the organization. This is evidenced by all 3 individuals who needed remedial training having exited the organization within a year. It is unlikely that individuals who graduated with advanced degrees were incapable of these tasks; instead, it is more likely they were unwilling or uninterested. Similarly, individuals whose performance was categorized as “adequate” were more than twice as likely to exit the organization than those who performed at a “high” level.

Figure 5. Adjustment software comparing significant variation between first fit and two CARL ear configurations in which an approximate match to target was obtained.

It is noteworthy that a small subset of three (14%) recently graduated clinicians had some previous exposure to CARL; however, they perceived the experience as different on account of dramatically different protocols. This suggests specific value to the sandbox approach used which appears to offer rich opportunities for feedback tailored to the individual and suggests a distinct value for the use of simulation in private practice separate from its educational value in the academic arena.

Summary

Simulation appears both sensitive to identifying training needs related to REM and other core competencies, such as cerumen management and earmold impressions, as well as capable of providing the necessary outlet for follow-up training. CARL proves an ideal tool for internal certification enabling clinic owners and operators to ensure staff are consistently performing at the highest level and are aligned with organizational values.

As our industry continues to evolve and be exposed to disruption in various forms, such as increased availability of direct-to-consumer devices, there is going to be increased scrutiny and expectation from prospective patients and an increasing need for clinic owners and operators to ensure they are offering consistent value. CARL appears to be well positioned to help private practices deliver on that value proposition by ensuring hearing healthcare providers are viewed as expert professionals rather than technicians. More than ever, hearing healthcare professionals will need to operate at the top of their practice, and it is the responsibility of the clinician and the clinic to promote and maintain these high standard of clinical care.

Correspondence can be addressed to Rob Koch at: [email protected].

Citation for this article: Seelisch A, Norris S, Koch R. Using simulation with CARL to create coaching opportunities and proficiency with REM. Hearing Review. 2022;29(3):40-44.

References

- Mueller GH. Probe-mic measures: Hearing aid fitting’s most neglected element. Hear Jour. 2005;58(10):21-30.

- Mueller GH, Picou EM. Survey examines popularity of real-ear probe-microphone measures. Hear Jour. 2010;63(5):27-32.

- Valente M, Oeding K, Brockmeyer A, Smith S, Kallogjeri D. Differences in word and phenome recognition in quiet, sentence recognition in noise, and subjective outcomes between manufacturer first-fit and hearing aids programmed to NAL-NL2 using real-ear measures. J Am Acad Audiol. 2018;29(8):706-721.

- Vaisberg JM, Macpherson EA, Scollie SD. Extended bandwidth real-ear measurement accuracy and repeatability to 10 kHz. Int J Audiol. 2016;55(10):580-586.

- Scollie S, Koch R. Learning amplification with CARL: A new patient simulator. AudiologyOnline. https://www.audiologyonline.com/articles/learning-amplification-with-carl-new-25840. Published September 16, 2019.

- Billey J. Training & product learning with simulation: A new clinician’s view of the benefits. https://www.audiologyonline.com/interviews/training-product-learning-with-simulation-26857. Published March 30, 2020.

- Folkeard P, et al. An evaluation of the CARL manikin for use in ‘patient-free’ real ear measurement: Consistency and comparison to normative data. Int J Audiol. Submitted paper. 2021.

- Leckenby E, Dawoud D, Bouvy J, Jónsson P. The Sandbox Approach and its potential for use in health technology assessment: A literature review. Applied Health Economics and Health Policy. 2021;19:857-869.

- Seelisch A, Koch R. Clinical utility of CARL, a patient simulator for clinician training, in a private practice setting. Paper presented at: 2019 Canadian Academy of Audiology (CAA) Conference and Exhibition; October 27-30, 2019; Halifax, Nova Scotia, Canada.

- Seelisch A, Koch R. Clinical utility of CARL, a patient simulator for clinician training, in a private practice setting. Paper to be presented at: American Academy of Audiology (AAA) 2020 Annual Conference; April 1-4, 2020 [conference cancelled]; New Orleans, LA.