Summary:

Researchers at Macquarie University have discovered that the human brain rapidly adapts to the acoustic characteristics of spaces, with speech understanding optimized in environments with a “Goldilocks” moderate amount of reverberation.

Key Takeaways:

- Reverberation ‘sweet spot’: Speech comprehension improves most in spaces with about 400 milliseconds of echo—too much or too little reverberation hinders learning.

- Brain mechanisms: The dorsolateral prefrontal cortex is crucial for adapting to different acoustic environments, showing that listening involves active brain learning, not just hearing.

- Implications for design and technology: Findings could inform better hearing devices and the creation of inclusive spaces by leveraging beneficial levels of reverberation rather than eliminating all echo.

Hearing researchers at Macquarie University in Australia have shown that listeners rapidly learn and adapt to the characteristics of acoustic spaces to improve their understanding of speech and have found evidence for the brain mechanisms involved in ‘listening to the room’.

Their findings also indicate the presence of a reverberation ‘sweet spot’ in which learning and adaptation are optimized.

Building on earlier work that showed animals’ brains quickly adapt to changes in sound levels, the new study, published online in eLife and funded by the Australian Research Council, is the first to show how humans adapt to echoey environments to improve their speech understanding.

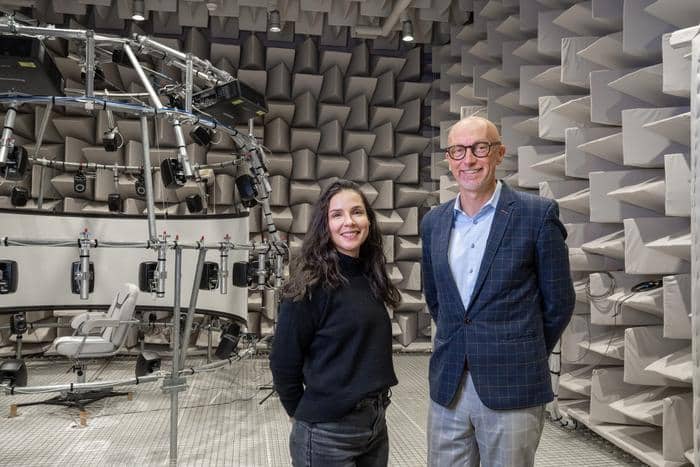

Volunteers with normal hearing were placed in the University’s anechoic chamber, a specially designed space free of reverberation, and listened to short speech commands.

Using recordings from real spaces, including an underground car park, a lecture theatre, and an open-plan office, the researchers simulated different types of background noise and levels of reverberation.

Senior author of the study, Distinguished Professor David McAlpine, says participants’ understanding of speech improved at over time, even in difficult spaces.

“What was surprising was that they learned best in spaces with ‘just the right’ amount of echo – about 400 milliseconds of reverberation, which is typical of many modern spaces like lecture theatres.

“Environments with too much echo, like marble-filled lobbies or underground car parks, both made learning to listen much harder as did, counterintuitively, rooms with no echo at all.

“This sweet spot or ‘Goldilocks’ zone, seems to match the average reverberation of spaces we spend most of our time in, so it’s possible we’ve designed our buildings to fit our brains — or that our brains have adapted to these buildings.”

Listening is more than hearing

First author Dr Heivet Hernández-Pérez says hearing speech in a slightly echoey environment gives the brain time to adjust and recognize patterns.

“Over the course of the 45-minute test, people got better at recognising speech because their brains were learning the ‘sound of the room’,” she says.

“It’s not about remembering the room consciously. It’s about your brain learning the structure of the environment and using that to make sense of speech, even without you realizing it.

“Our ears hear, but our brains listen; they’re constantly adapting through feedback loops, learning and changing on the fly.”

As part of the study, the team used non-invasive magnetic brain stimulation to briefly disrupt activity of the dorsolateral prefrontal cortex, an area of the brain involved in learning.

When they did, participants’ ability to adapt to different sound environments dropped significantly.

“This shows us that there are specific brain circuits responsible for this kind of learning,” Dr Hernández-Pérez says.

“Understanding how they work could help us develop better, more inclusive sound environments, whether we’re talking about public spaces or personalized hearing technology.

“It’s important to recognize that listening is an immersive experience, shaped by our environments, our brains and how the two interact.

“We’re not just hearing sounds; we’re hearing the world through those sounds.”

Shaping technology and inclusive spaces

Professor McAlpine says the team’s findings will feed into the design of hearing and listening devices, such as hearing aids and headphones.

“Most hearing technology tries to eliminate all background noise and echo, but if some reverb helps people hear better, then we may be throwing out something that the brain finds useful.”

The team is now designing new studies to explore how neurodivergent people and people with hearing loss experience reverberation, and whether their Goldilocks zones are different from those of neurotypical listeners.

Featured image: Dr Heivet Hernández-Pérez and Distinguished Professor David McAlpine in the anechoic chamber at Macquarie University Hearing where their research on ‘listening to the room’ was conducted. Photo: Macquarie University