Summary:

A deep transfer learning AI model predicted spoken language outcomes in children one to three years after receiving cochlear implants with 92% accuracy, demonstrating a robust, globally applicable tool to guide earlier, individualized intervention.

Key Takeaways:

- The AI model outperformed traditional machine learning approaches across heterogeneous, multi-site, multilingual MRI datasets.

- Accurate pre-implantation predictions could enable a “predict-to-prescribe” approach, identifying children who may benefit from intensified early speech and language therapy.

- Findings support the feasibility of a single prognostic AI tool for cochlear implant programs worldwide, regardless of imaging protocols or language backgrounds.

An AI model using deep transfer learning – the most advanced form of machine learning – predicted with 92% accuracy spoken language outcomes at one-to-three years after children received cochlear implants, according to a large international study published in JAMA Otolaryngology-Head & Neck Surgery.

Although cochlear implantation is often the best and only effective treatment to improve hearing and enable spoken language for children with severe to profound hearing loss, spoken language development after early implantation is more variable in comparison to children born with typical hearing. If children who are likely to have more difficulty with spoken language are identified prior to implantation, intensified therapy can be offered earlier to improve their speech.

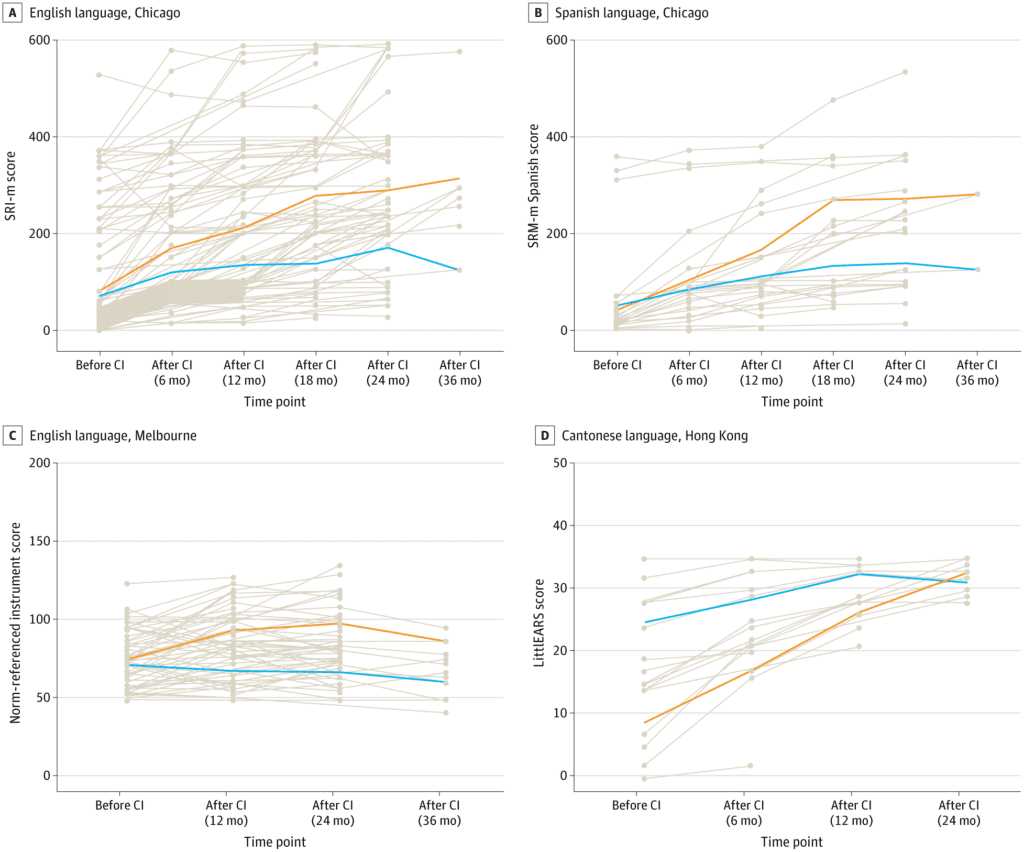

Researchers trained AI models to predict outcomes based on pre-implantation brain MRI scans from 278 children in Hong Kong, Australia, and the United States who spoke three different languages (English, Spanish, and Cantonese). The three centers in the study also used different protocols for scanning the brain and different outcome measures.

Such complex, heterogenous datasets are problematic for traditional machine learning, but the study showed excellent results with the deep learning model. It outperformed traditional machine learning models in all outcome measures, according to the study authors.

“Our results support the feasibility of a single AI model as a robust prognostic tool for language outcomes of children served by cochlear implant programs worldwide. This is an exciting advance for the field,” says senior author Nancy M. Young, MD, medical director of Audiology and Cochlear Implant Programs at Ann & Robert H. Lurie Children’s Hospital of Chicago – the U.S. center involved in the study. “This AI-powered tool allows a ‘predict-to-prescribe’ approach to optimize language development by determining which child may benefit from more intensive therapy.”

This work was supported by the Research Grants Council of Hong Kong Grant GRF14605119, National Institutes of Health R21DC016069 and R01DC019387.