With the proliferation of smartphone usage amongst hearing aid users, good sound quality during streaming is no longer a ‘desire’ but a ‘need.’ Unlike conventional acoustic hearing aids, the Earlens Contact Hearing Aid’s unique mechanism of action allows for the provision of low-frequency output for streamed sources while maintaining a widely vented fitting. Using an industry-wide standard method for evaluation of sound quality, subjective judgments of streamed audio quality of the Earlens compared with other MFi-capable acoustic hearing aids reveals that the Earlens Contact Hearing Aid offers the best streamed audio sound quality for both speech and music.

By Pragati Rao Mandikal Vasuki, PhD; Elizabeth Eskridge-Mitchell, AuD; and Drew Dundas, PhD

Introduction

The hearing aid industry experienced a paradigm shift with the introduction of Made for iPhone (MFi) Bluetooth protocol that allows hearing aids to stream sounds from iPhones, iPads, and iPods. This proprietary high-quality audio streaming protocol, while consuming less power than traditional Bluetooth, allows hearing aid users to listen to phone calls, music, and podcasts and have access to a stereo sound experience. With the growing need for streaming as a desirable feature in hearing aids, it is not surprising that Google has also announced Android specifications for low-energy Bluetooth connectivity with hearing aids.1 There are more than 48 million hearing impaired individuals in America2, prompting an increasing need for better features (such as phone connectivity) and superior audio quality (in audio and streaming) in hearing aids. While most major hearing aid manufacturers now have the capability to stream audio content in their premium-tier devices, the subjective quality of this streamed audio may vary between devices. This was recently demonstrated in a study, in which the Widex BEYOND was judged to have the best streamed audio quality when tested against three other unnamed manufacturers of acoustic hearing aids (AHAs) in a closed dome setting. 3 Although the Widex BEYOND achieved comparatively superior ratings, its mean subjective audio quality was judged to be “fair,” with other aids achieving even less favorable ratings on the subjective scale used. It is a common recommendation to improve the sound quality of streamed audio through an AHA by closing the dome, which provides more low-frequency output and thus a fuller and richer listening experience. However, particularly for those with normal low-frequency hearing, occluding the dome to improve the streaming sound quality may yield objectionable occlusion in the non-streaming listening conditions.

Related article: A Questionnaire to Assess the Subjective Benefit of Extended Bandwidth Amplification Hearing Aids

Earlens offers a novel method of delivering amplification through a Contact Hearing Aid (CHA) using direct-drive technology. The direct-drive technology enables amplified sounds to be delivered over a broader bandwidth (125 Hz – 10 kHz)4 when compared to traditional acoustic hearing aids (AHA) while maintaining a widely vented fitting. In a recent study, hearing impaired individuals reported significant improvements for ease of communication and aversiveness measures for the Earlens CHA when compared to their own previous AHA.5 Music and speech sounds containing information over a wider bandwidth (123 – 10.9 kHz) are also associated with an increased perception of naturalness.6 The broad bandwidth of the Earlens CHA may offer comparatively rich audio quality to listeners due to the provision of low-frequency output with a vented fitting and audible output extending to higher frequencies, even after limitations in the high-frequency cutoff at 7.5 kHz to the streaming MFi codec. The scope of this study is thus to compare subjective judgments of streamed audio quality of the Earlens Contact Hearing Aid against those of other streaming-capable acoustic hearing aids.

In the present study, a double-blind sound quality evaluation of stimuli streamed through 4 premium MFi AHAs and the Earlens CHA was conducted using the Multiple Stimuli with Hidden Reference and Anchor (MUSHRA) protocol based on the ITU-R BS.1534-3 recommendation.7 MUSHRA is intended for the evaluation of audio systems exhibiting intermediate levels of quality across a range of audio sources. Its applications include the test of low-bit-rate audio codecs,8 common codecs for streamed media,9and hearing aids in both streaming3 and non-streaming contexts.10 The method thus allows for direct comparison of sound quality for a number of stimuli at the same time. The hypothesis was that the Earlens Contact Hearing Aid would be rated superior to the other AHAs due to its ability to deliver sound over a broader frequency range.

Methods

A similar experimental design to Ramsgaard et al 3 was used, employing an industry-standard method for subjective audio quality judgments. An additional condition was included: In Ramsgaard et al, only a closed-dome condition was included, whereas in the present study both open- and closed-dome conditions were tested.

Participants

Twenty-two (22) individuals who reported to have normal hearing were recruited externally and internally from the sponsor company. After hearing screening was performed, fifteen (15) individuals (Mean = 30.4 years, SD = 8.5 years, range 23-53 years, 9 females) with hearing thresholds of 20 dB or better at octave frequencies from 250-8000 Hz were selected to participate in the study. All participants were paid $40 for their time.

Stimuli

A total of 14 audio excerpts (Meandur= 9.4 sec, SDdur= 1.5 sec) were chosen as stimuli for the study. These stimuli were selected from a larger sample of 75 commonly streamed audio excerpts by a panel of 4 expert listeners based on their sensory and affective qualities. More details about the stimuli are listed in Table 1.

Table 1: Details of audio stimuli used for sound quality comparison

| Sample | Description | Duration (sec) |

| 1 | String quartet | 11.5 |

| 2 | Orchestra | 10.5 |

| 3 | Rock – instrumental | 8.1 |

| 4 | Classic Rock – male voice | 8.1 |

| 5 | Gaelic | 8.9 |

| 6 | Hip hop/Electronic | 10.6 |

| 7 | Jazz/Latin | 6.7 |

| 8 | Pop – female voice | 10.0 |

| 9 | Child speech | 7.4 |

| 10 | Audiobook – male voice | 8.2 |

| 11 | Podcast – 2 females | 11.2 |

| 12 | Movie – action sound effects | 10.0 |

| 13 | Movie – loud male and female voice | 10.0 |

| 14 | Movie – soft male and female voice | 10.0 |

Stereo audio excerpts of these 14 audio stimuli were streamed from an Apple iPod (iOS 11.0.3) to 4 premium MFi-compatible AHAs and the CHA. The streamed stimuli were recorded from each of these hearing aids to allow for blind and randomized stimuli presentation. For the purposes of recording, all hearing aids were programmed to a flat 20 dB HL audiogram using manufacturers’ default settings. The streaming setting was enabled when applicable; for one hearing aid without a streaming setting, the “music” setting was used. Hearing aid microphones were muted for recording. The AHAs were then placed on a binaural Knowles Electronics Manikin for Acoustic Research (KEMAR) in a sound-treated booth. Stimuli were recorded for two venting configurations: a) open-fit using medium sized KEMAR pinna; and b) closed-fit recorded directly in the coupler to ensure a fully-closed seal from dome of the AHAs. For the CHA, recordings were made through the manufacturer’s simulator, which simulates the signal experienced at the tympanic membrane. Note that only one recording for each of the 14 audio excerpts was obtained from the CHA as closed- and open-fit do not apply to the CHA. All CHA recordings were made using a widely-vented custom earmold as low-frequency output is provided even with a vented fitting. Along with the five recordings from various hearing aids, there were three additional versions of each audio stimulus: a) an original, unaltered stimulus used as the ‘reference’; b) a 3.5 kHz low-pass filtered version used as a hidden ‘low anchor’; and c) a 7 kHz low-passed version used as a hidden ‘mid anchor.’ To avoid any loudness differences between different versions, all stimuli were subsequently loudness-equalized using the model proposed by Glasberg and Moore.11

Procedure

Sound-quality evaluation was based on the MUSHRA protocol and consisted of three blocks. After a training block with 2 trials, participants proceeded to the two test blocks of 14 trials each for open- and closed-fit recordings respectively. Participants proceeded to the test blocks only if they were able to discriminate between different versions of the stimuli in a training block. The order of presentation of stimuli within a given trial and the overall order of presentation of all 14 audio excerpts were randomized for each participant; and the order of presentation of the two test blocks was also counterbalanced across participants. Sounds were presented through headphones at a comfortable listening level determined during the training block.

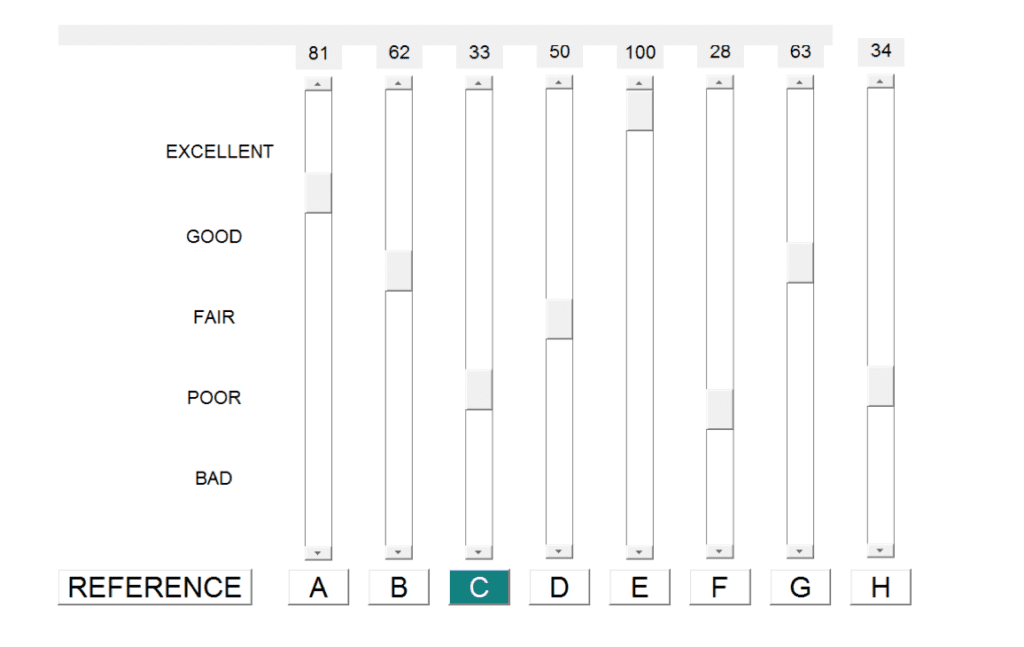

Regardless of the type of block, participants used a graphic user interface (GUI, see Figure 1) to rate all stimuli on a scale of 0-100 on each trial. On each trial, participants compared (and rated) one recording of the stimulus from each of the 4 AHAs, one from the CHA, one high quality ‘reference’ version, and two degraded ‘anchor’ versions which were intended to spread responses across the full range of the scale as per the MUSHRA paradigm. The total duration of the experiment was approximately 1 hour.

Results and discussion

All participants passed the post-screening recommended in MUSHRA protocol ITU BS.1534.3.

Analysis of variance (ANOVA) was used to analyze sound quality ratings with hearing aid types and type of venting as independent variables. Mauchly’s test was used to check for the assumption of sphericity; when violated, degrees of freedom were adjusted using the Greenhouse-Geisser correction. There was a significant main effect of hearing aid type [F (2.38,33.46) = 62.51, p < .001], type of venting [F (1,14) = 84.92, p< .001], and an interaction between hearing aid types and venting [F (2.75, 38.45) = 27.15, p < .001].

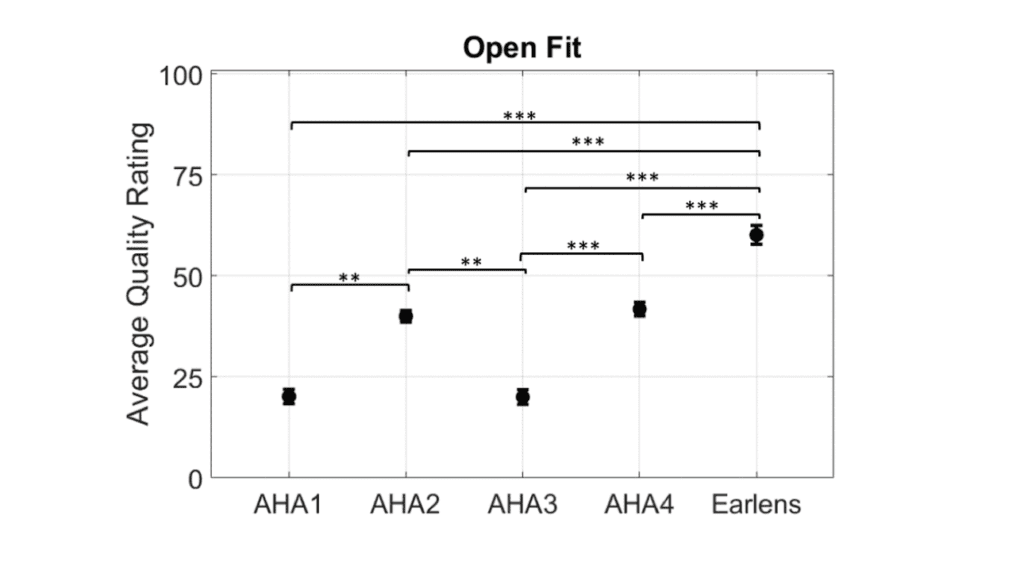

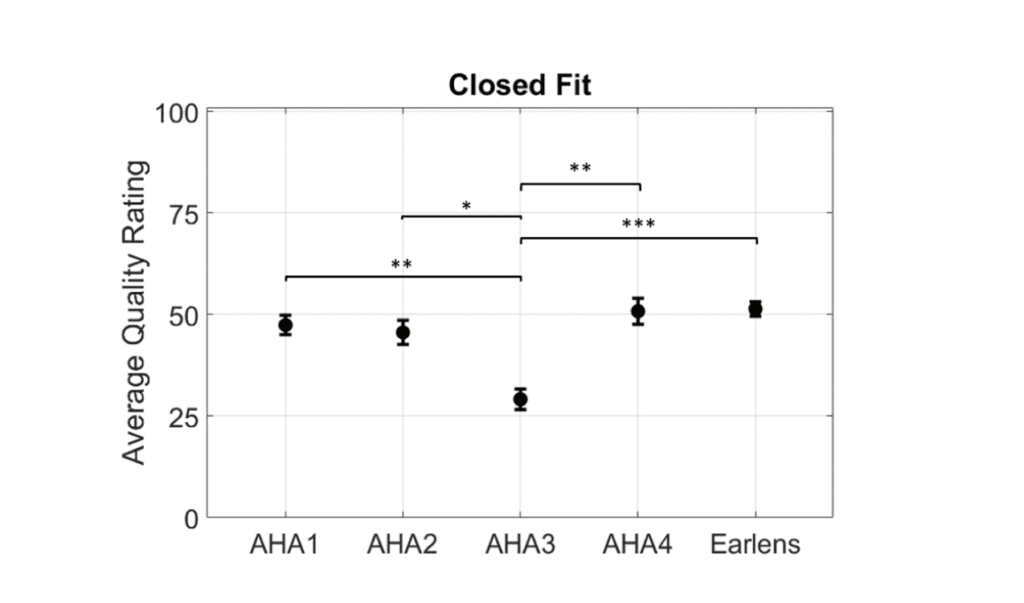

Overall, the Earlens CHA was judged to have superior sound quality when compared to all other AHAs in the open-fit condition (Figure 2). Pairwise comparisons also revealed sound-quality differences amongst different AHAs. In the closed-fit condition, however, the Earlens CHA did not rate superior overall to the AHA devices (Figure 3). Some of the AHA devices individually had statistically significant better ratings than others.

Note: *** p < .001, ** p < .01, * p < .05

Note: *** p < .001, ** p < .01, * p < .05

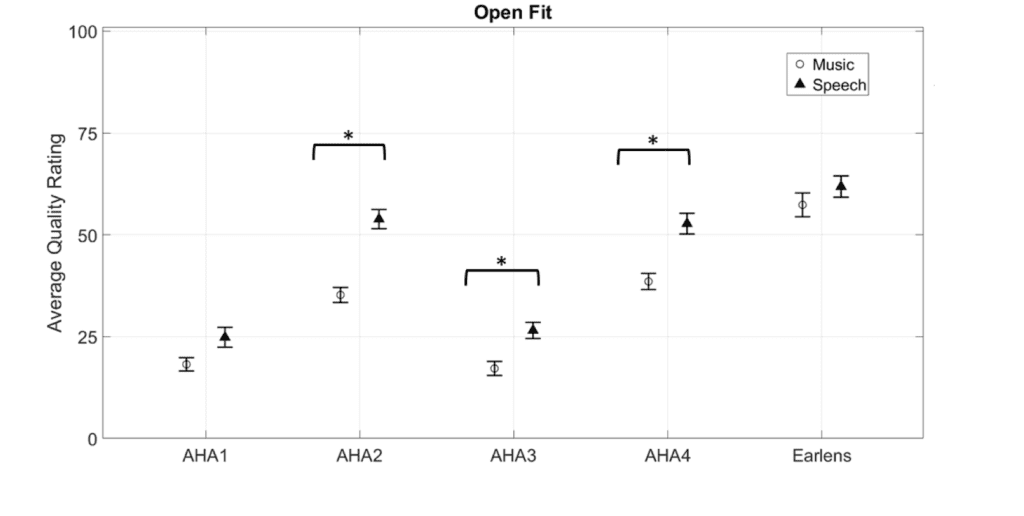

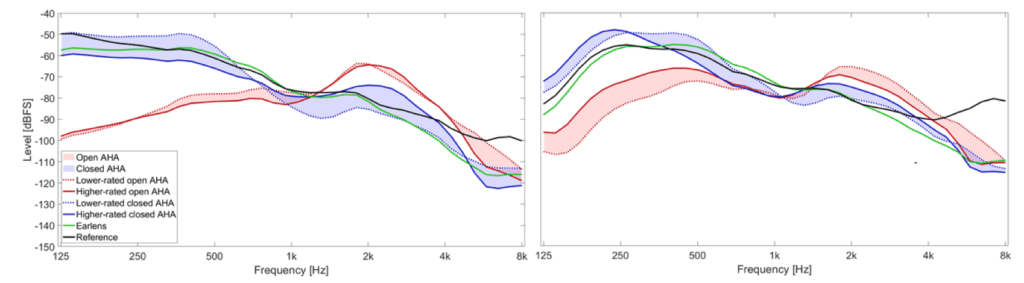

Analysis on whether participants rated speech and music samples similarly for different hearing aids was also of interest. Some hearing aids may have different frequency shaping for speech and music samples. Interestingly, pairwise comparisons showed that ratings were similar and consistently higher for the CHA across speech and music samples. Ratings for AHA2, AHA3, and AHA4 were different for speech and music samples especially in the open fit setting (Figure 4). To explain these differences, we calculated the long-term average spectra (LTSA, smoothed to 3-octave bands) of the highest and lowest-rated AHAs across fitting types for two samples (a hip hop/electronic music sample and a female speech sample), comparing the spectra to each other and to the CHA (see Figure 5).

As can be seen in the left panel of Figure 5, music samples with better low-frequency representation were rated higher by participants. This is supported by Franks (1982) who showed both normal-hearing and hearing-impaired listeners preferred inclusion of more low-frequency components during music listening. More representation of low frequencies in the closed-fit settings also explains why listeners rated the closed-fit version of the stimuli similarly.

Perceptual quality of speech and music can be characterized by dimensions such as clarity, fullness, brightness, sharpness, and spaciousness.12 Normal hearing listeners rate clarity and nearness dimensions as being important for speech quality while fullness and spaciousness are important attributes for music.13 It has further been shown that fullness is favored by a broad frequency range with more emphasis on low frequencies while clarity, spaciousness, and nearness are also favored by a broad frequency range with more emphasis on mid-high to high frequencies.14 Overall, it appears that broad frequency range is important for the perceived quality of both speech and music. The Earlens CHA with its unique direct drive technology and widely vented, open fit offers a broad frequency range for music and speech listening resulting in better sound quality and, therefore, higher quality ratings across music and speech stimuli.

High-fidelity sound quality is of great importance to wearers of hearing aids.15 Using an industry-standard experimental paradigm (MUSHRA) and a popular fitting condition (open-fit dome), the present study showed that Earlens CHA provided significantly higher streamed audio quality than all other premium acoustic hearing aids.

Pragati Rao Mandikal Vasuki, PhD, is a Hearing Scientist and Research Audiologist for Earlens Corporation in Menlo Park, Calif. Elizabeth Eskridge-Mitchell, AuD, is a Senior Research Audiologist at Earlens Corporation in Menlo Park, Calif. Drew Dundas, PhD is the Vice President of Audiology and Product Strategy at Earlens Corporation in Menlo Park, Calif.

References

1. Lee, D. Google is developing native hearing aid support for Android. The Verge. https://www.theverge.com/circuitbreaker/2018/8/16/17701902/google-native-hearing-aid-support-android-gn-hearing. Published August 16, 2018.

2. National Institute of Deafness and Other Communication Disorders (NIDCD). Quick Statistics about Hearing. https://www.nidcd.nih.gov/health/statistics/quick-statistics-hearing. Updated December 15, 2016.

3. Ramsgaard J, Korhonen P, Brown TK, Kuk F. Wireless streaming: Sound quality comparison among MFi hearing aids. Hearing Review. 2016;23(12):36.

4. Struck CJ, Prusick L. Comparison of real-world bandwidth in hearing aids vs Earlens light-driven hearing aid system. Hearing Review. 2017;24(3):24-29.

5. Arbogast TL, Moore BCJ,Puria S. Achieved gain and subjective outcomes for a wide-bandwidth contact hearing aid fitted using CAM2. Ear and Hearing.2019;40(3):741-756.

6. Moore BCJ, Tan CT. Perceived naturalness of spectrally distorted speech and music. J. Acoust. Soc. Am. 2003;114(1):408.

7. International Telecommunication Union-ITU-R. Recommendation ITU-R BS.1534-3: Method for the subjective assessment of intermediate quality level of audio systems. https://www.itu.int/dms_pubrec/itu-r/rec/bs/R-REC-BS.1534-3-201510-I!!PDF-E.pdf. Published 2015.

8. Marston D, Mason A. Cascaded audio coding. EBU Technical Review. Published October 2005.

9. Hines A, Gillen E, Harte N, Kelly D, Skoglund J, Kokaram A. Perceived audio quality for streaming stereo music. Paper presented at: 2014 ACM Multimedia Conference; November, 2014; Orlando, Florida.

10. Vaisberg J, Folkeard P, Parsa V, et al. Comparison of music sound quality between hearing aids and music programs. Audiology Online. https://www.audiologyonline.com/articles/comparison-music-sound-quality-between-20872. Article 20872. Published August 17, 2017.

11. Glasberg B, Moore BCJ. A model of loudness applicable to time-varying sounds. J. Audio Eng. Soc. 2002;50(5):331-342.

12. Gabrielsson A, Sjögren H. Perceived sound quality of sound‐reproducing systems. J. Acoust. Soc. Am. 1979;65(4):1019.

13. Gabrielsson A, Schenkman BN, Hagerman B. The effects of different frequency responses on sound quality judgments and speech intelligibility. J. Speech Lang. Hear. Res.1988;31(2):166-177.

14. Gabrielsson A, Hagerman B, Bech‐Kristensen T, Lundberg G. Perceived sound quality of reproductions with different frequency responses and sound levels. J. Acoust. Soc. Am. 1990;88(3):1359.

15. Kochkin, B. S. MarkeTrak VI: 10-year customer satisfaction trends in the US hearing instrument market. Hearing Review. 2002.