Back to Basics | November 2017 Hearing Review

We tend to be biased, both in our training and in our technologies we use. We tend to look at things based on spectra or frequencies. Phrases such as “bandwidth” and “long-term average speech spectrum” show this bias. The long-term average speech spectrum, which is averaged over time, is indeed a broad bandwidth spectrum made up of lower frequency vowels (sonorants) and higher frequency consonants, but this is actually quite misleading.

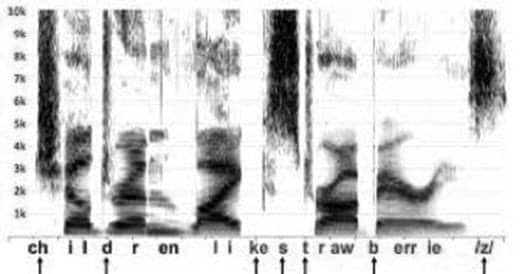

At any point in time, speech is either low-frequency or high-frequency, but not both. A speech utterance over time, may be a low-frequency vowel then a high-frequency consonant then something else, but speech will never be both low-frequency emphasis and high-frequency emphasis at any one point in time. Most vowels and nasals have low energy levels by the time the third formant occurs, and have most of their energy below 2500 Hz; consonants have most of their energy above 2500 Hz.

Figure 1. At any one point in time speech is either low-frequency sonorant (vowels and nasals) OR high-frequency obstruent (stops, fricatives, and affricates) but NEVER both at the same time. In contrast, music is ALWAYS low-frequency fundamental AND high-frequency harmonics.

Speech is sequential. One speech segment follows another in time, but we can never have vowels and consonants occurring at the same time. In contrast, music is broadband and very rarely narrow band. Additionally, music is always concurrent—low-frequency fundamentals or tonics are always associated with higher frequency harmonics at the very same time.

With the exception of percussion sounds, music is made up of a low-frequency fundamental note (or tonic) and then a series of progressively higher frequency harmonics whose amplitudes and exact frequency location define the timbre, and help with the pitch. At any one point in time, it is impossible for music to be a narrow band signal.

It is actually a paradox that: 1) Hearing aids have one receiver that needs to have similar efficiency at both the high-frequency and the low-frequency regions, and 2) Musicians “prefer” in-ear monitors and earphones that have more than one receiver. If anything, it should be the opposite. I would suspect that the musicians’ preference for more receivers (and drivers) is a marketing element where “more” may be perceived as “better.”

At any one point in time, musicians should be wearing a single receiver, single microphone, and single bandwidth driver in-ear monitors (or hearing aids). This will ensure that what is generated in the lower frequency region (the fundamental or tonic) will have a well-defined amplitude (and frequency) relationship with the higher frequency harmonics. This can only be achieved with a truly single-channel system—a “less-is-more solution”—a technology which no longer exists, but perhaps, whose time has returned.

This same set of constraints does not hold for speech. If speech contains a vowel or nasal (collectively called a sonorant), it is true that there are well-defined harmonics that generate a series of resonances or formants. However, for good speech intelligibility, one only needs to have energy up to about 3500 Hz (indeed, telephones only carry information up to 3500 Hz). If speech contains a sibilant consonant, also known as an obstruent (‘s’, ‘sh’, ‘th’,’f’,…) there are no harmonics and there is minimal sound energy below 2500 Hz. Sibilant consonants can extend beyond 12,000 Hz, but never have energy below 2500 Hz. Speech is either low-frequency sonorant (with well-defined harmonics) or high-frequency obstruents (no harmonics), but at any one point in time it’s one or the other, but not both. In contrast, music must have both low- and high-frequency harmonics, and the exact frequencies and amplitudes of the harmonics provide much of the definition to music.

Table 1. A summary of the similarities and differences between speech and music. Frequency transposition can work well with speech because it merely lowers some of the high-frequency frication that is spread over a wide range (eg,‘s’) whereas it cannot work on music because any alteration in the high-frequency narrow band harmonics can change the pitch and the timbre. At any one point in time, speech is narrowband but music is always wideband.

This also has ramifications for the use of frequency transposition or shifting. It makes perfect sense to use a form of frequency transposition or shifting for speech. This alters the high-frequency bands of speech where no harmonics exist. Moving a band of speech (eg, ‘s’) to a slightly lower frequency region will not alter any of the harmonic relationships. However, for music, which is defined only by harmonic relationships in both the lower and the higher frequency regions, frequency transposition or shifting will alter these higher frequency harmonics.

Clinically, for a music program, if there are sufficient dead cochlear regions or severe sensory damage, reducing the gain in a frequency region is the correct approach, rather than changing the frequency for a small group of harmonics.

Adapted by the author from: http://hearinghealthmatters.org/hearthemusic/2017/speech-not-broadband-signal-music/ (Oct. 10, 2017).

Original citation for this article: Chasin M. Speech is not a broadband signal…but music is. Hearing Review. 2017;24(11):10.

It is important to understand the fact that speech and music are two separate things that hold their own importance in our life. One is important to convey our thoughts to the world whereas the other is related to songs and speaking out your heart. In addition to this, some people consider music as an escape from the worldly matters to a place where there are no worries and no tensions.

Speech in its literal meaning is the act of representing thoughts through articulate sound, but a more direct approach would be to affiliate it with the sound produced when we communicate with our vocal cords. If that is the case then what about beat boxing where talented individuals make multiple sounds using their larynx aka ‘voice box’? Plus, even everyday speech has high and low frequency differentiation, the way Cardi B. speaks is one example of it.

I understand that even though listening to every single beat separately will offer its own intrinsic benefit to some listeners out there, there is no doubt that it is the sum of the whole which brings about its final beauty to behold. The rightly mixed amalgam of all individual elements contributing towards a finished product is what makes it ultimately more appreciable. In short, I find this article, simply showing how important multitasking is nowadays.

With regard to the limits, or negative impact, on music when using frequency lowering would a ” cut and paste” scheme or a “copy and paste ” scheme be equally problematic?