Tech Topic | January 2023 Hearing Review

Focusing on cognition and brain function, a new interdisciplinary field of Cognitive Audiology emerged recently, representing the intersection of clinicians, speech and hearing scientists, and cognitive psychologists.

By Carolyn J. Herbert, AuD; and David B. Pisoni, PhD

The conventional approach to assessing hearing in clinical audiology focuses on measuring audibility as a necessary prerequisite for successful speech recognition. While the typical clinical assessment protocol includes pure-tone and speech audiometry followed by interventions to improve audibility in the form of amplification and/or cochlear implantation, measures of audition alone do not provide an adequate reflection of functional speech recognition and listening ability in everyday, real-world environments. Measures of audibility are not sufficient to account for the robust nature of human speech recognition and language comprehension under a wide range of adverse and challenging listening conditions. Additional cognitive information processing operations and encoding of speech into phonological and lexical representations in memory are necessary for robust spoken word recognition and language understanding.

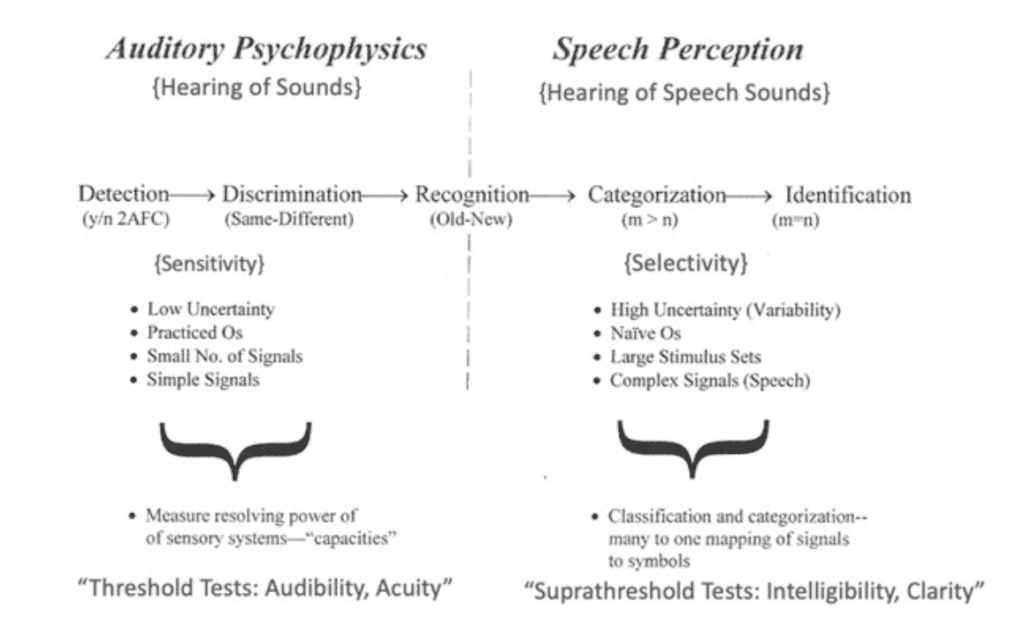

Figure 1 illustrates the relations between auditory psychophysics (hearing of sounds) and speech perception (hearing of speech). As shown on the left, hearing and audibility are typically assessed with threshold-tests involving the detection and discrimination of simple non-speech signals (pure tones) using low uncertainty tasks with highly practiced observers to obtain measures of audibility reflecting the resolving power or auditory capacity and acuity of hearing. In contrast, as shown on the right, speech perception and hearing of speech are routinely assessed using supra-threshold tests of selectivity that involve recognition, classification, categorization, and identification of speech and speech-like signals. These measures of speech perception are designed to measure an observer’s clarity of speech recognition using high-uncertainty open-set tests with naive untrained listeners.

Whereas use of the clinical pure-tone audiogram may provide objective guidance for improving a listener’s audibility by measuring detection thresholds, the conventional audiogram does not provide detailed insights into the contribution of the other neural and cognitive processes needed for robust speech recognition and language understanding. Consider how often two patients with similar audiograms may differ in their speech-recognition outcomes following intervention, particularly after cochlear implantation.1,2 With highly variable outcomes, researchers have been working to elucidate the contribution of the central auditory system and higher-order cognitive and linguistic processes to improve speech recognition outcomes.3-5 Focusing on cognition and brain function, a new interdisciplinary field of Cognitive Audiology emerged recently, representing the intersection of clinicians, speech and hearing scientists, and cognitive psychologists.6-8 The core foundation of this collaboration is the information processing approach to cognition, which studies complex processes such as perception, attention, memory, learning, and thought, providing new insights into what is necessary for robust speech recognition and spoken language processing. The dramatic shift in philosophy and orientation from thinking about speech perception as a direct reflection of hearing and audibility to the view that speech perception is a form of information processing that reflects the operation and function of both ear and brain working together in solving the problem of recovering the talker’s intended linguistic message is one of the most important developments in the field of speech perception today, with direct implications for clinical audiology.9 For clinicians interested in learning more about the emerging field of cognitive audiology, the information processing approach can be used to help explain and understand not only the information-processing system as a whole, but also the strengths and weaknesses of individual listeners in complex, challenging listening environments in the real world.

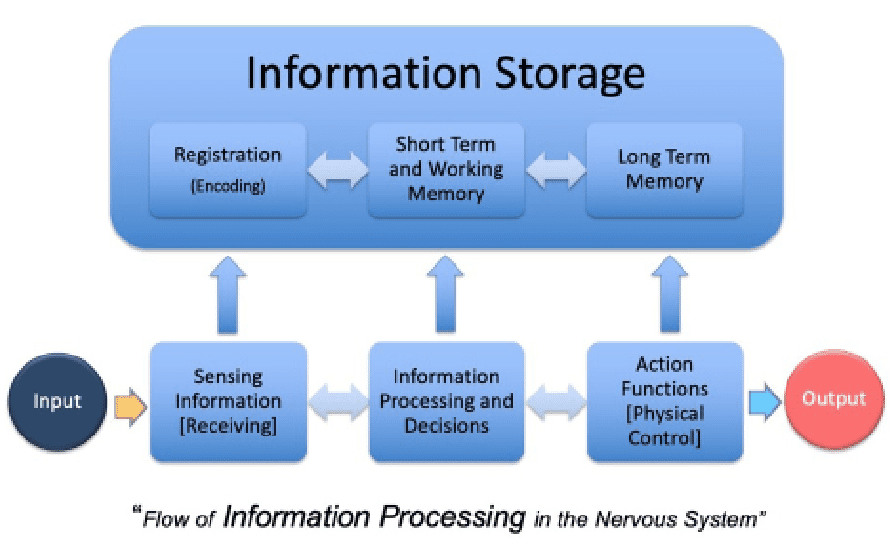

To better understand speech perception, which includes processes such as sensory encoding, recognition, identification, and perceptual memory, it has become necessary to acknowledge that sensation, perception, and memory are all processes that are tightly interconnected and linked together, reflecting the operation of the whole information processing system and neural architecture working together as an integrated system.9,10 After all, hearing and speech perception do not function as independent, autonomous streams of information or discrete processes that take place in isolation from the structure and functioning of the whole information-processing system (see Figure 2). Thus, problems related to hearing and hearing loss are also problems that involve cognition, information processing, and brain function reflecting the response of the entire information processing system, not just the output of the auditory system disembodied from the rest of the system.11,12 As Carol Flexer proposed a few years ago, “hearing loss is primarily a brain issue, not an ear issue.”13 We believe this is a valuable mantra to keep in mind.

Figure 2 shows a block diagram of the flow of information in the nervous system based on ideas developed in systems theory from human factors engineering and design14 and the human information processing approach in cognitive psychology.10,15 As shown in this figure, information storage and retrieval are assumed to play major roles in all aspects of information processing from input to output. Information processing involves four basic functions: (1) sensing and receiving information as input and sensory evidence, (2) information storage, encoding, and registration in sensory, short-term, and long-term memory, (3) information processing and decision-making in working memory, and (4) action and output functions that control manual responses like a button press or speech motor functions used to control speech articulation in repeating back words and sentences. An important component of this approach to human information processing are “control processes,” processes that an observer can initiate and control that govern the content and flow of information in the system such as attention, coding, verbal rehearsal, memory search and retrieval, and response execution and output.16

Although speech recognition and spoken language processing seem practically seamless in a listener with normal hearing, the process of speech perception has a time-course during which the information in the acoustic signal is processed by the ear and the brain. The initial sensory information in the signal from the ear is transformed, reduced, and elaborated, and then brought into contact with prior information and linguistic knowledge in long-term memory. The synthesis of these two streams of information (combining up-stream sensory coding and down-stream predictive processing) is then used to carry out an information processing task or accomplish a specific goal, such as recognizing words or comprehending the talker’s intended message. According to this view, a human listener can be thought of as an input-output device that consists of elementary subsystems that encode, store, process, retrieve, and use information in specific tasks.14,16 These core subsystems, which include sensory memory, short-term/working memory, and long-term memory, are considered to be the fundamental building blocks of cognition providing the foundational infrastructure that supports robust speech recognition and spoken language processing under a wide range of adverse and challenging conditions.

The information processing approach to cognition was developed by cognitive psychologists in the late 1960s and is based on three major theoretical assumptions that can be applied directly to problems in speech recognition and spoken language processing.10,15 First, the perception of speech is not immediate. Information processing, including the neural coding of the filtered signal through the auditory nerve to the brain to interface with previous experience, takes time. Second, information being processed is constrained by the inherent limited capacity of the processing system, i.e., not all information coming into the system is able to be processed simultaneously. Third, all aspects of cognitive processing – ranging from sensation to perception to learning and complex thought – involve attention, memory storage, and neurocognitive control processes that encode, store, and process selected aspects of the initial sensory stimulation.10,15

Each of the three assumptions of the information processing approach to cognition are domains that are susceptible to communication breaks down. Because speech perception is not immediate, the speed at which the information is processed may vary between listeners. If the signal is unable to make contact with previous experience and knowledge in long-term memory, comprehension and language understanding may be significantly compromised. Because the capacity of the information processing system is limited, we may see substantial variability in listening effort and/or speech perception in other adverse listening conditions, such as listening to multiple talkers, listening to speech in noise or multi-talker babble, or listening to non-native speech.17 The limited capacity of the system also becomes a significant factor when listening effort is increased by poor acoustic quality or decreased audibility.18

Clinical audiology has relied heavily on the endpoint, product measures of speech recognition to assess patient outcomes, particularly following amplification with hearing aids or cochlear implants.2 Endpoint or product measures like monosyllabic speech recognition scores provide a global picture of the complete information processing system, but these measures do so without reflecting the underlying intermediate processing operations carried out by the system’s subdomains. In contrast, process measures that reflect the integrity of core sub-processes that operate during speech recognition, may be an area for future research and intervention by the field of cognitive audiology. Examples of process measures used in cognitive research include measures of working memory capacity, inhibitory control, scanning and retrieval from short-term memory, verbal rehearsal speed, speed of lexical/phonological access, and nonverbal fluid reasoning.2 These additional process measures seek to explain rather than just describe speech recognition and perception by measuring more complex, higher-level processing operations of the system such as speech comprehension and language understanding rather than only audibility and word recognition accuracy.

Because process measures help explain the functioning and operations of the information processing system, particularly the intermediate subcomponents, they can reveal additional factors that may significantly impact a patient’s speech recognition in everyday listening environments that are not measured clinically by conventional word recognition scores alone. The clinical audiologist can also use knowledge gained from the information processing approach for service quality improvement by allowing the process of each cognitive subdomain to guide intervention capitalizing on the patient’s perspective. This shift in focus moving beyond just measures of audibility may help clinicians adopt patient-centered measures that assess the patient’s cognitive strengths, weaknesses, and milestones relative to normative scores. Further research and development of a diagnostic battery of measures is necessary for identifying these strengths and weaknesses for further intervention and/or auditory rehabilitation. In cognitive audiology, auditory rehabilitation not only includes improving audibility, but may also include processing strategies that improve attention, inhibition, cognitive control, and working memory, reduce stress, and modify listening behaviors and environments, as well as socio-emotional domains that are known to influence communication success.19 While the traditional clinical approach to audibility can obviously aid in speech recognition, the novel approach of exploring the additional information processing domains proposed by cognitive audiology may allow for more areas of intervention and auditory rehabilitation by acknowledging and recognizing the close links between the ear and brain.13 Because clinical services provided to patients can be limited by time and financial constraints, further consideration of a diagnostic inventory is necessary to effectively and efficiently guide individualized intervention that addresses specific weaknesses with appropriate communication strategies to supplement audibility or additional support with an interdisciplinary team approach to assist in providing more rich and fulfilling communication.

This shift in perspective from focus on the ear to the brain is also consistent with the shift from viewing speech as an auditory signal to acknowledging that speech is a multisensory and multimodal event.20-22 Although the benefit of visual information in speech recognition has been well-documented for some time,23-25 previous research did not address the significant benefits of visual information to speech recognition with degraded auditory signals resulting from hearing loss. Although speech audibility is one of the core modalities of speech, it is only one stream of information integrated with other sensory modalities of speech (i.e., visual information processing) for effective speech recognition.20,26,27

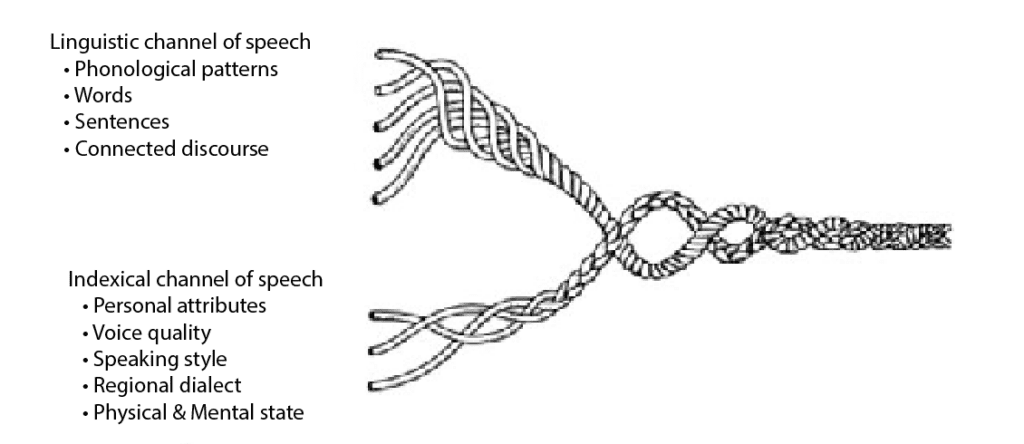

The information processing approach to cognition has stimulated additional directions for novel research on hearing and hearing loss. Further work in three areas may be of interest. First, additional research devoted to assessing the recognition of indexical properties of speech signals would provide more information about the processing of “extralinguistic” properties of speech signals that convey important information about the speaker’s personal identity, gender, regional dialect, and physical and mental states.28,29 Figure 3 is a diagram of the “rope analogy of speech” first proposed by Abercrombie (1967) that illustrates the encoding of two channels of information in speech — (1) the “linguistic” channel that encodes and carries information about the sound patterns, words and sentences of the language (top) and (2) the “indexical” or “extralinguistic” channel that encodes information about the vocal sound source (bottom) – the talker’s voice which captures the interactions between these two parallel channels of information in the speech signal. The linguistic and indexical channels of speech are closely linked and intertwined together in the production of speech and numerous speech perception studies have established that these two parallel channels, streams or pathways of information are also processed together in speech perception and spoken word recognition by the listeners.30-32 Perception of indexical proprieties of speech has also been found to be closely linked to processing of the linguistic attributes of speech.33,34 Future development of perceptually robust speech perception tests for clinical use that assess indexical properties of speech may be useful clinically; some efforts have already been made in this direction with the development of PRESTO, the Perceptually-Robust English Sentence Test – Open Set sentence recognition test.35,36

Second, assessments of the perceptual learning and adaptation that occur when a listener becomes familiar with the voice of a novel talker would expand our current understanding of speech perception and the information processing approach. Numerous studies have uncovered close interactions and processing dependencies between the processing of the indexical properties of speech associated with the talker’s voice and the linguistic analysis of the speech used in recognizing words and understanding sentences, suggesting that speech perception may be carried out in a “talker-contingent” manner.37-39

Third, research on mental workload and listening effort using novel techniques like pupillometry in real-time listening scenarios may provide further insights into the effective use of the full capacity of the information processing system. Cognitive activity indexed by pupil dilation reflects processing operations beyond audition alone.40 Additional work on novel process measures of mental workload may not only reflect the product measure of speech recognition accuracy, but also the processing mechanisms and effort used to complete the task.40,41

Future research investigating the information processing approach, its domains, and other novel neurocognitive measures should provide a solid foundation for more personalized clinical assessments and interventions. This foundation can support audibility alongside cognition and the common processes that work together as part of a complex information-processing system that support speech recognition and robust spoken language processing; cognitive audiology.

Citation for this article: Herbert CJ, Pisoni DB. Information processing and cognitive audiology. Hearing Review. 2022;30(1):20-23.

References

- Moberly AC, Bates C, Harris MS, Pisoni DB. The enigma of poor performance by adults with cochlear implants. Otol Neurotol. 2016;37(10):1522-1528.

- Moberly AC, Castellanos I, Vasil KJ, Adunka OF, Pisoni DB. “Product” versus “process” measures in assessing speech recognition outcomes in adults with cochlear implants. Otol Neurotol. 2018;39(3):e195-e202.

- Appler JM, Goodrich LV. Connecting the ear to the brain: Molecular mechanisms of auditory circuit assembly. Prog Neurobiol. 2011;93(4):488-508.

- Eggermont J, ed. Hearing Loss: Causes, Prevention, and Treatment. Academic Press; 2017: 71-90.

- Hockley A, Wu C, Shore SE. Olivocochlear projections contribute to superior intensity coding in cochlear nucleus small cell J Physiol. 2022;600(1):61-73.

- Pichora-Fuller MK, Kramer SE, Eckert MA, et al. Hearing impairment and cognitive energy: The Framework for Understanding Effortful Listening (FUEL). Ear Hear. 2016;37 (Suppl 1):5S-27S.

- Rönnberg J, Rudner M, Lunner T. Cognitive hearing science: The legacy of Stuart Gatehouse. Trends in Amplification. 2011;15(3):140-148.

- Pisoni, DB. Cognitive audiology. In: Pardo JS, Nygaard LC, Remez RE, Pisoni DB, eds. The Handbook of Speech Perception. 2nd ed. John Wiley & Sons, Inc; 2021: 697-732.

- Obleser J, Wise RJS, Dresner MA, Scott SK. Functional integration across brain regions improves speech perception under adverse listening conditions. J Neurosci. 2007;27(9):2283-2289.

- Haber RN. Information-processing Approaches to Visual Perception. Holt, Rinehart and Winston; 1969.

- Wilson M. Six views of embodied cognition. Psychonomic Bulletin & Review. 2002;9:625-636.

- Clark A. Supersizing the Mind: Embodiment, Action, and Cognitive Extension: Oxford University Press; 2008.

- Flexer C. Cochlear implants and neuroplasticity: Linking auditory exposure and practice. Cochlear Implants Int. 2011;12(Suppl 1):S19-S21.

- Sanders MS, McCormick EJ, eds. Human Factors in Engineering and Design. 7th ed. McGraw-Hill, Education; 1993.

- Neisser U. Cognitive Psychology. Appleton-Century-Crofts; 1967.

- Atkinson RC, Shiffrin RM. Human memory: A proposed system and its control processes. Psychology of Learning and Motivation. 1968;2:89-195.

- Mattys SL, Davis MH, Bradlow AR, Scott SK. Speech recognition in adverse conditions: A review. Language and Cognitive Processes. 2012;27(7-8):953-978.

- Wingfield A. Evolution of models of working memory and cognitive resources. Ear and Hearing. 2016;37:35S-43S.

- Pichora-Fuller MK. Cognitive aging and auditory information processing. International Journal of Audiology. 2003;42(S2):26-32.

- Rosenblum LD. Speech perception as a multimodal phenomenon. Curr Dir Psychol Sci. 2008;17(6):405-409.

- Rosenblum LD, Dias JW, Dorsi J. The supramodal brain: Implications for auditory perception. Journal of Cognitive Psychology. 2017;29(1):65-87.

- Rosenblum LD. Audiovisual speech perception and the McGurk Effect. Oxford Research Encyclopedia of Linguistics. 2019.

- Sumby WH, Pollack I. Visual contribution to speech intelligibility in noise. Journal of the Acoustical Society of America. 1954;26(2).

- McGurk H, MacDonald J. Hearing lips and seeing voices. Nature. 1976;264:746-748.

- Summerfield Q. Some preliminaries to a comprehensive account of audio-visual speech perception. In: Dodd B, Campbell R, eds. Hearing by Eye: The Psychology of Lip-Reading. Lawrence Erlbaum Associates; 1987: 3-51.

- Dias JW, McClaskey CM, Harris KC. Audiovisual speech is more than the sum of its parts: Auditory-visual superadditivity compensates for age-related declines in audible and lipread speech intelligibility. Psychol Aging. 2021;36(4):520-530.

- Rosenblum LD. A confederacy of senses. Sci Am. 2013;308(1):72-75.

- Abercrombie D. Elements of General Phonetics. Edinburgh University Press; 1967:1-17.

- Kreiman J, Sidtis D. Foundations of Voice Studies An Interdisciplinary Approach to Voice Production and Perception. Wiley-Blackwell Publishing; 2011.

- Nygaard LC, Pisoni DB. Talker- and task-specific perceptual learning in speech perception. Presented at: The XIIIth International Congress of Phonetic Sciences; August 14-19,1995; Stockholm, Sweden.

- Sommers MS, Nygaard LC, Pisoni DB. Stimulus variability and spoken word recognition. I. Effects of variability in speaking rate and overall amplitude. J Acoust Soc Am. 1994;96(3).

- Nygaard LC, Sommers MS, Pisoni DB. Speech perception as a talker-contingent process. Psychological Science. 1994;5(1):42-6.

- Pisoni DB. Some thoughts on ‘normalization’ in speech perception. In: Johnson K, Mullennix JW, eds. Talker Variability in Speech Processing. Academic Press; 1997.

- Theodore RM, Monto NR. Distributional learning for speech reflects cumulative exposure to a talker’s phonetic distributions. Psychon Bull Rev. 2019;26:985-992.

- Gilbert JL, Tamati TN, Pisoni DB. Development, reliability, and validity of PRESTO: a new high-variability sentence recognition test. J Am Acad Audiol. 2013;24(1):26-36.

- Tamati TN, Gilbert JL, Pisoni DB. Some factors underlying individual differences in speech recognition on PRESTO: A first report. J Am Acad Audiol. 2013;24(7):616-634.

- Nygaard LC, Pisoni DB. Talker-specific learning in speech perception. Percept Psychophys. 1998;60:355-376.

- Cleary M, Pisoni DB, Kirk KI. Influence of voice similarity on talker discrimination in children with normal hearing and children with cochlear implants. Journal of Speech, Language, and Hearing Research. 2005;48(1):204-223.

- Cleary M, Pisoni DB. Talker discrimination by prelingually deaf children with cochlear implants: Preliminary results. Ann Otol Rhinol Laryngol Suppl. 2002;1111(5_suppl):113-118.

- Winn MB, Wendt D, Koelewijn T, Kuchinsky SE. Best practices and advice for using pupillometry to measure listening effort: An introduction for those who want to get started. Trends in Hearing. 2018;22.

- Gianakas SP, Winn MB. The impact of prior topic awareness on listening effort during speech perception. Journal of the Acoustical Society of America. 2022;151(4).