Tech Topic | June 2022 Hearing Review

By Petri Korhonen, MSc, Francis Kuk, PhD, Chris Slugocki, PhD, and Greg Ellis, PhD

Anecdotally, wearers of Widex ZeroDelay technology report that sounds are perceived as “more three-dimensional,” “having more layers,” and “spacious.” This article explores the effects of reverberation on hearing aid listening and offers an explanation about why wearers may have made such observations.

Natural sounds contain cues that inform beyond their explicit meaning. Spoken words provide cues that identify the talker (eg, a familiar voice vs an unknown person), reveal their emotional state (eg, happiness, sadness, anger), and indicate their intent (eg, question, sarcasm, irony). Natural sounds also contain cues that inform you about your environment—your location in the room, your position relative to the speaker, the acoustic properties of the room, the social purpose of the room, and much more. Preserving natural cues is important because listeners’ behaviors and experiences are shaped by them.

The acoustics of a space provide listeners with cues about the social purpose of a space, their location within that space, and the aesthetic properties of that space.1 The sounds we hear in any environment suggest aural privacy or promote social cohesion. Footsteps in a hotel lobby echo around the room, announcing a person’s arrival, whereas that same person’s footsteps in the hotel bar are dampened by the carpeting, furniture, and draperies. The reverberant hotel lobby is meant to be inspiring (the visitor is in an elegant space) and informative (the hotel greeters know someone has arrived). The hotel bar is designed to be a place for private conversation and the dampened footsteps reflect that. Theaters and concert halls are designed to enhance performances, causing those spaces to become extensions of the performances within them. Thus, thoughtful acoustic design adds aural richness to a space. Sounds also help listeners orient in and navigate through space. Listeners moving in dark spaces and individuals with visual impairment can recognize open doors, walls, and other obstacles using sound alone. Thus, those natural properties must be preserved in order for listeners to appreciate the designed purpose of the space and navigate inside it.

Consequences of unnatural sounds. If the acoustic characteristics a listener associates with a space are not what is heard, the listener will report the experience as “unnatural.” They may find the space aversive, feel disconnected, or have a “strange feeling.” A cathedral, for example, is acoustically designed to evoke a sense of grandeur and awe. Distorted acoustic cues may cause one to feel that the space feels relatively dull, small, or flat. In situations where visual information is limited, distorted auditory cues may suggest a different layout of the room, resulting in trips or falls. Thus, it is important to preserve the natural spatial characteristics of the environment and the sense of awareness of one’s own position in the environment.

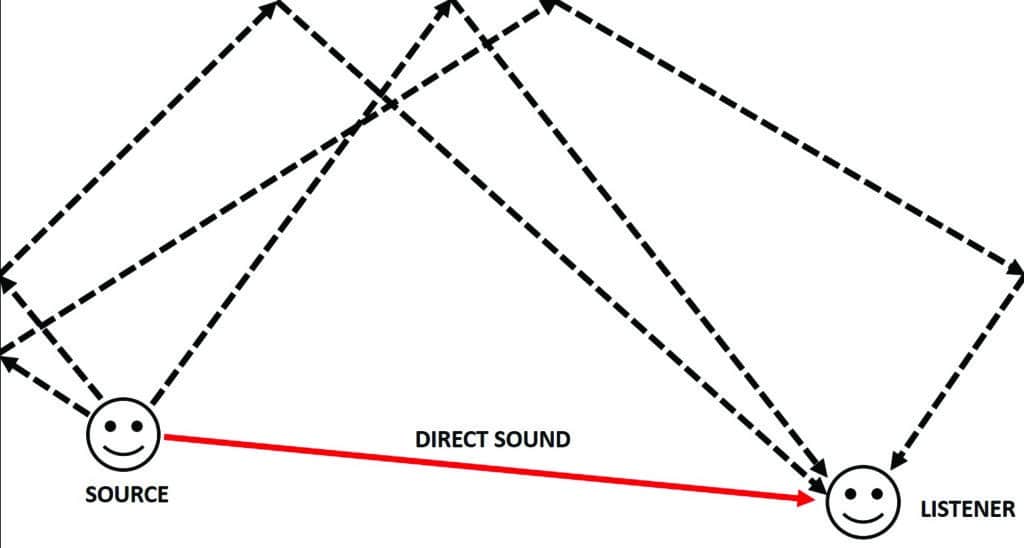

Reflection patterns characterize a space. Though a sound produced in a room is typically perceived as a single sound, this single sound (eg, one word) is comprised of the same sound repeated many times within such a short period that our hearing system integrates it into one sound. The direct sound travels in a straight line from the source to the listener. In addition, some sound is reflected off the various surfaces in the room before it arrives at the ear (Figure 1). These reflections are classified based on how much time has passed after the arrival of the direct sound.

Figure 1. A sound in a room reaches a listener’s ears via several pathways. The direct sound propagates in a straight line from the source to the listener. Any other sound is reflected off surfaces present in the room. Because the distance traveled by the reflected sound is greater than that of the direct sound, the reflected sounds arrive at the listener’s ears later and lower in amplitude.

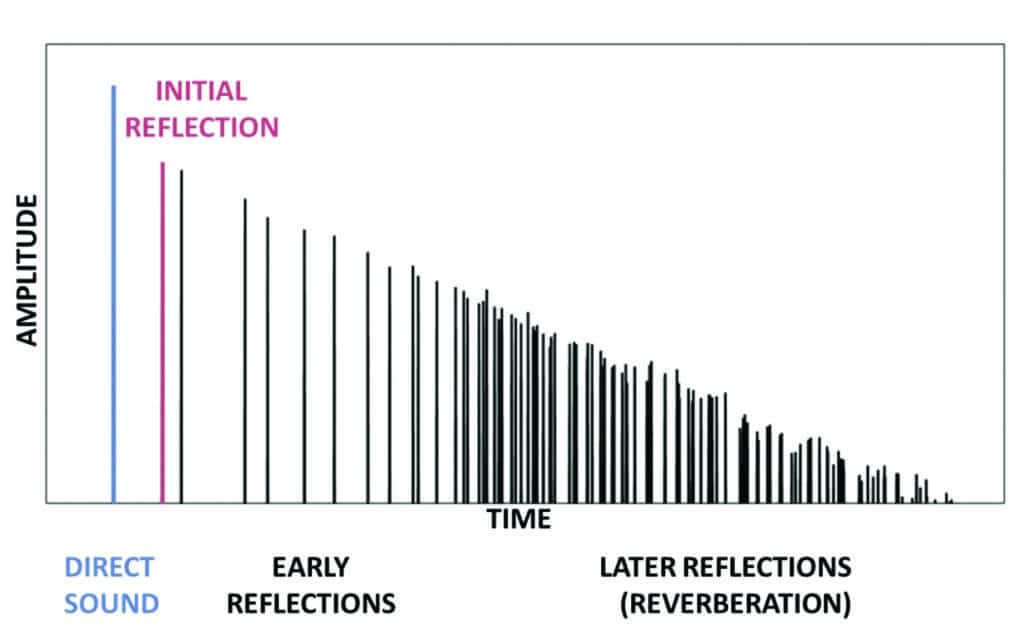

The first set of reflections are referred to collectively as early reflections—or the initial reflection—typically occurring within the first 80 ms.2 The time delay between the direct sound and the initial reflection is the initial time delay gap (or ITDG).

The late reflections are reflections that occur after the last early reflection.3 Reflections are delayed in time and lower in amplitude relative to the direct sound. The lower amplitudes are due to attenuation from the air and surface absorption within the room. The specific timing and levels of the reflections depend on the size and shape of the room, the materials of the walls, the objects in the room, the materials of those objects, and the position of the source and the listener within the room.

We can visualize the direct sound and the reflections by measuring and plotting a room impulse response (RIR). The RIR describes how a sound produced at the source location is changed by the room before it reaches the listener’s location. It can be thought of as the “fingerprint” for specific source and listener locations within a room. When plotted as a function of time, as shown in Figure 2, we can see the direct sound, initial reflection, early reflections, and late reflections. As discussed above, each of these components provides information about the spatial characteristics of the listening environment, and each of these components has a perceptual consequence.

Figure 2. The room impulse response in the time domain shows the direct sound, followed by distinct initial and early reflections, and finally diffuse later reflections decaying to silence.

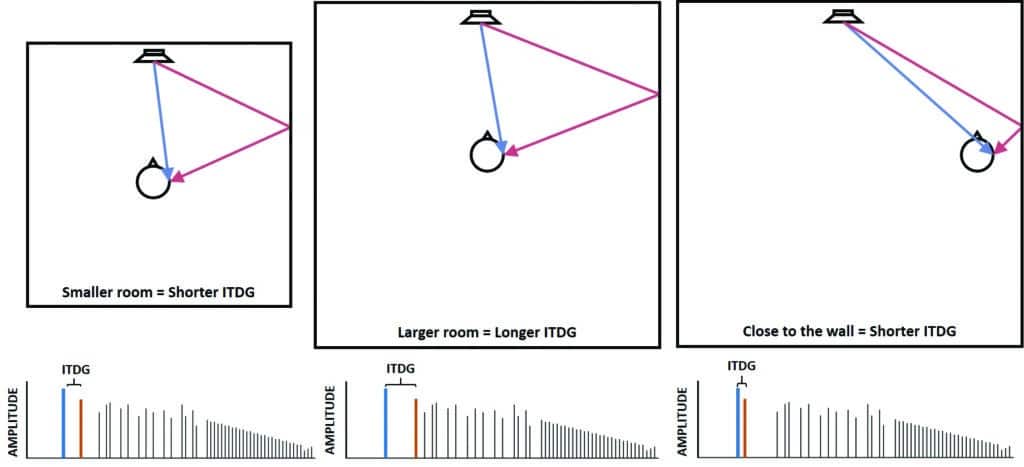

The entire RIR provides listeners with information on the size of the room and their location.4 The direct sound helps the auditory system determine the direction of the incoming sound.5 The pattern of the early reflections provides information about the physical characteristics of the room. The ITDG provides information about the size of, and the listener’s location within, a room (Figure 3).

Figure 3. The initial time delay gap (ITDG) is shorter for small rooms (left) than for larger rooms (center), and when the listener is closer to a wall than when positioned further from the wall (right). This cue could be used by listeners to glean information about their location in a room.

Early reflections help improve speech intelligibility.6 They also provide important cues to judge the naturalness of spatial impression like the width of an auditory source.7 The level of the late reflections relative to direct sound provides a cue for listeners to judge distance.8 Thus, a lot of information about our surroundings is provided to the auditory system within the first 100 milliseconds.

Digital hearing aids can distort reflection patterns. Anything that affects the reflection pattern of a room can disrupt the natural cues one uses to “acoustically feel” an environment. Although digital hearing aids have provided tremendous benefits to people with a hearing loss, they can potentially distort the reflection pattern because they take time to process sounds.

The amount of time taken to process sounds is called processing delay. Most hearing aids have a processing delay under 10 ms, which is not problematic in closed-fittings because very little direct sound enters the ear canal. In open fittings, however, direct, unprocessed sound interacts with delayed, processed sound in the ear canal, resulting in an audible artifact known as comb-filtering.9 Perceptually, sounds can become “hollow” or “metallic” and can be quite bothersome.

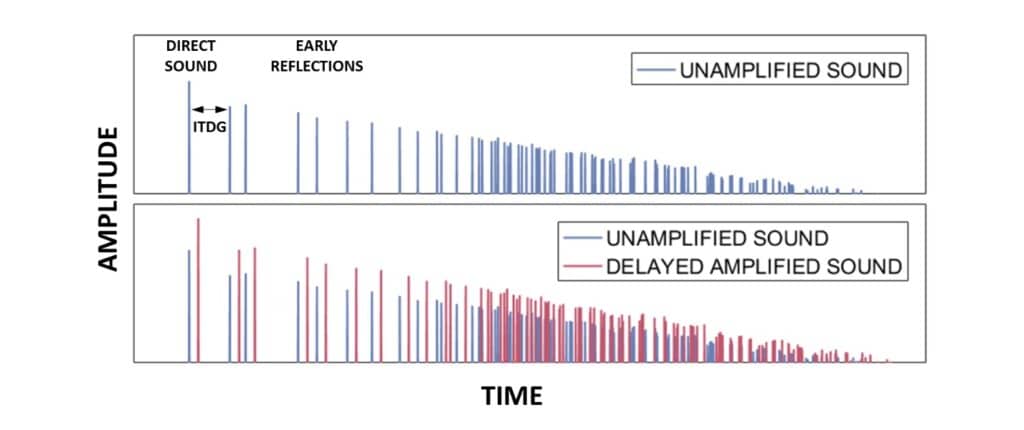

Shorter processing delay has shown improvement in listening preference10 and sound quality.11 We speculate that its effects may extend to spatial perception also. Particularly, we predict that processing delay interferes with natural initial and early reflections.

Consider the RIR shown in Figure 4. The unamplified sound (blue, top) includes clearly defined direct sound, followed by the first reflection (and the associated ITDG), and early reflections that characterize the spatial qualities of the listening space. The mix of unamplified sound and delayed amplified sound (blue & red, bottom) creates a shorter ITDG, as well as greater density of early reflections. The amplitudes of the early reflections will no longer be consistent with typical sounds heard in the same room. The distorted RIR suggests a different space than the one the listener is in. For example, large spaces engineered to evoke feelings of spaciousness or awe may feel relatively confined and restricted. A listener may feel closer to a wall. The listeners’ impression of the space around them becomes unnatural.

Figure 4. Room impulse response for unamplified sound (top) and the mix of unamplified and delayed amplified sound (bottom), showing the distortion of reflection pattern by addition of extra reflections.

Preserving natural reflection patterns. Because hearing aid processing delay affects the time course of the reflection patterns, it is reasonable to expect that shortening the processing delay could preserve the natural cues and improve this spatial perception. Typically, in commercial hearing aids, processing delay ranges from about 2.5 ms to 8 ms.9

Recently, Widex introduced ZeroDelay technology with a processing delay as short as 0.5 ms across frequencies. This suggests that the reflection patterns measured while using this technology will be very similar to the natural reflection patterns. Perceptually, listeners wearing this technology experience more spaciousness. Sounds should also be more externalized when compared to hearing aids with longer processing delays.

We have noted previously12 that the PureSound program on the Widex MOMENT, which is based on ZeroDelay technology, provides a more robust envelope following response (EFR), indicating better preservation of natural input. Thus, we expect that ZeroDelay technology will result in better spatial perception than hearing aids with longer delays. The current study was undertaken to examine if the ZeroDelay technology used in the PureSound program results in better listener ability to discriminate spatial locations than competitive premium hearing aids with longer delays.

Study Methods

Participants. A total of 15 older hearing-impaired listeners (M = 71.4, range = 53-85) participated. Participants had bilaterally symmetric mild-to-moderate sensorineural sloping hearing losses. None of the hearing aid wearers used any of the study aids at the time of fitting. All participants passed cognitive screening using the Montreal Cognitive Assessment (average score = 27.7). The protocol was approved by an external institutional review board. Informed consent was obtained from all participants.

Hearing aids. The Widex MOMENT hearing aid was compared to premium products from two other manufacturers (manufacturer #1 delay = 8 ms; manufacturer #2 delay = 6 ms). Comparison was conducted using recorded hearing aid (HA) output from a KEMAR manikin. During the recording, KEMAR was placed at different locations of a classroom. HAs were adjusted to meet the NAL-NL2 target for different standard hearing loss configurations (N1, N2, N3, and S1 audiograms13). Participants heard the test materials that most closely matched their own audiogram. Advanced hearing aid features were left on at their default settings. Hearing aids were coupled to KEMAR using open-fit ear tips with full insertion so that the ear tip was as close to KEMAR’s ear canal terminus as possible.

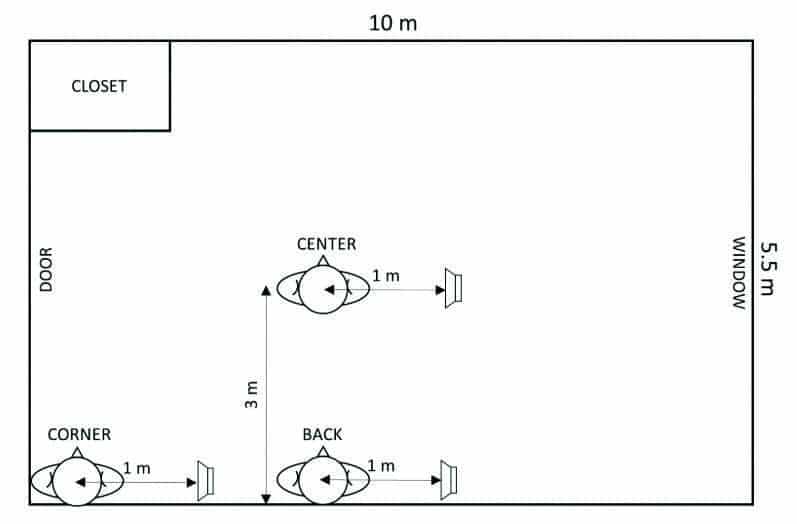

Experiment. Hearing aid output was recorded using KEMAR positioned at three locations within a classroom (10 x 5.5 x 2.3 m; RT60 = 556ms). The locations included center of the room, back against the long wall of the room, and corner of the room (Figure 5). The presentation loudspeaker was positioned 1 m from the center of KEMAR’s head at 90° to the right. The stimulus was a recording of the natural speech token/batch presented at 65 dB SPL. Stimulus was recorded with KEMAR in each room location.

Figure 5. The listening locations across the room in the study.

Listeners’ ability to discriminate between recordings made at different KEMAR positions was assessed using a three-alternative-forced-choice (3AFC) task with insert-earphone presentation. On each trial, listeners heard three test stimuli presented sequentially, each recorded with one of the three HAs (or unaided presented at MCL). HA conditions were interleaved and pseudo-randomized across trials within a block. In two of the stimuli on a trial, the recordings presented were made with KEMAR in the center position. In one of the stimuli, the recording presented was made with KEMAR in a different position (ie, back or corner). Listeners were asked to indicate which of the three stimuli was different on a touch screen monitor. Only one position contrast (ie, center versus back or center versus corner) was assessed per test block. Test blocks for each contrast type were later retested to ensure reliable performance.

Results

The discrimination abilities of individual participants on the discrimination task are summarized across hearing aid conditions in Figure 6. In general, discrimination performance was best in the PureSound condition for the “center” versus “back” contrast. Performance in the PureSound condition was also on par with unaided performance in the “center” versus “corner” contrast for most participants.

Figure 6. Discrimination performance (percent correct) of individual participants (colored points/lines) in the discrimination task. The left panel shows performance for the “center” vs “corner” contrast. The right panel shows performance for the “center” vs “back” contrast. Participants’ hearing loss profiles are denoted by the shape of the points.

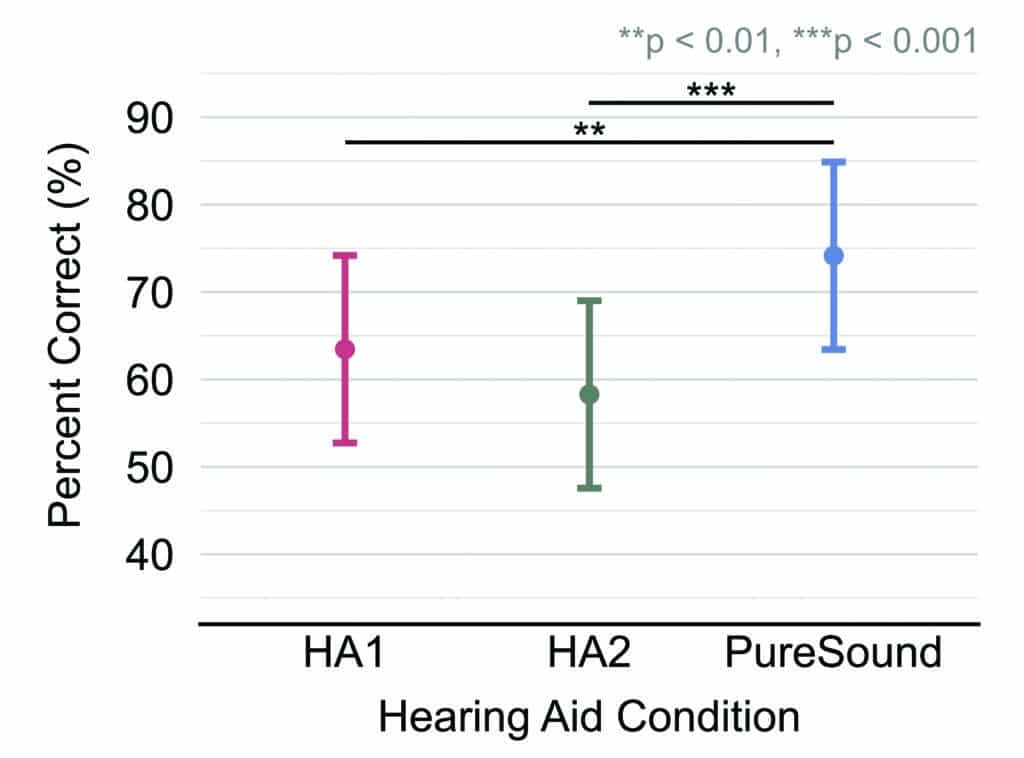

Linear mixed effects models were used to assess the fixed effects of location contrast (2 levels: center vs corner and center vs back) and hearing aid condition (3 levels: HA1, HA2, and PureSound), as well as their interaction, on participants’ location discrimination abilities. The results of the analysis are summarized graphically in Figure 7. Listeners’ abilities to discriminate between listening positions within classroom were significantly affected by HA condition only. Post-hoc analyses confirmed that listeners discriminated their location correctly more often when they wore the PureSound than in the other HA conditions.

Figure 7. Listeners’ abilities to discriminate the location in which KEMAR recordings were made within a reverberant classroom with three different hearing aids. Horizontal bars denote significance.

Discussion

Listeners were better able to discriminate between different listening positions within a reverberant room when listening through the Widex hearing aids with ZeroDelay technology compared to other premium hearing aids with longer processing delays. This suggests that this technology preserves the natural cues that listeners use for spatial perception better than hearing aids with longer processing delays. The longer processing delays likely smeared the initial and early reflections, making it more difficult for the listeners to discriminate. The ultra-low delay preserves the natural reflection of the room, resulting in a more natural, spacious, layered, or three-dimensional feeling.

It is important to note that the benefits of ZeroDelay technology—that of preserving the naturalness of sounds—are also evident in its speech-in-noise performance. Indeed, our previous study14 comparing the performance of the MOMENT PureSound hearing aid to the premium hearing aids from the same manufacturers used in the present study showed no difference in their speech-in-noise performance on the Quick RRT.

Along with other unique features on the Widex hearing aids such as the unique wind noise program,15 ZeroDelay technology is an “icing on the cake” feature that goes beyond what other current premium hearing aids can provide.

ZeroDelay technology may facilitate the acceptance of hearing aids, especially for those with a mild-to-moderate hearing loss. A reason for non-acceptance is unnatural sounds and minimal perceived benefit.16 If hearing aids can preserve most, if not all, of the naturalness in the processed sounds, new hearing aid wearers may have a more positive first impression of the recommended hearing aids. This will likely lead to greater satisfaction, quicker acceptance, and longer continued use of hearing aids.

Correspondence can be addressed to Petri Korhonen at: [email protected].

Citation for this article: Korhonen P, Slugocki C, Ellis G. Low processing delay preserves natural cues and improves spatial perception in hearing aids. Hearing Review. 2022;29(6):20-25.

References

- Blesser B, Salter L-R. Spaces Speak, Are You Listening? The MIT Press; 2006.

- International Standards Organization (ISO) website. ISO 3382:1997. Acoustics–Measurement of the reverberation time of rooms with reference to other acoustical parameters. Published1997.

- Blauert J. Spatial Hearing: The Psychophysics of Human Sound Localization. 2nd ed. MIT Press Direct; 1997.

- McGrath C, Blythe J, Krackhardt D. Seeing groups in graph layouts. Connections. 1996:19(2):22-29.

- Wallach H, Newman EB, Rosenzweig MR. The precedence effect in sound localization.The American Journal of Psychology. 1949;62(3):315-336.

- Arweiler I, Buchholz J, Dau T. Speech intelligibility enhancement by early reflections. Proc Int Symp Auditory and Audiol Res (ISAAR). 2009;2:289-298.

- Barron M. The subjective effects of first reflections in concert halls—The need for lateral reflections. J Sound Vibr. 1971;15(4):475-494.

- Bronkhorst AW, Houtgast T. Auditory distance perception in rooms. Nature. 1999;397:517-520.

- Balling LW, Townend O, Stiefenhofer G, Switalski W. Reducing hearing aid delay for optimal sound quality: A new paradigm in processing. Hearing Review. 2020;27(4):20-26.

- Balling LW, Townend O, Helmink D. Sound quality for all: The benefits of ultra-fast signal processing in hearing aids. Hearing Review. 2021;28(9):32-35.

- Schepker H, Denk F, Kollmeier B, Doclo S. Subjective sound quality evaluation of an acoustically transparent hearing device. Conference Proceedings of the AES Int Conf on Headphone Technology. August 2019;27-29. San Francisco, CA. https://www.aes.org/e-lib/browse.cfm?elib=20517.

- Slugocki C, Kuk F, Korhonen P, Ruperto N. Neural encoding of the stimulus envelope facilitated by Widex ZeroDelay technology. Hearing Review. 2020;27(8):28-31.

- Bisgaard N, Vlaming MSMG, Dahlquist M. Standard audiograms for the IEC 60118-15 measurement procedure. Trends in Amplif. 2010;14(2):113-120.

- Kuk F, Ruperto N, Slugocki C, Korhonen P. Efficacy of directional microphones in open fittings under realistic signal-to-noise ratios using Widex MOMENT hearing aids. Hearing Review. 2020;27(6):20-23.

- Korhonen P, Kuk F, Seper E, Mørkebjerg M, Roikjer M. Evaluation of a wind noise attenuation algorithm on subjective annoyance and speech-in-wind performance. J Am Acad Audiol. 2017;28(1):46-57.

- Kochkin S. MarkeTrak V: “Why my hearing aids are in the drawer:” The consumer’s perspective. Hear Jour. 2000;53(2):34-41.