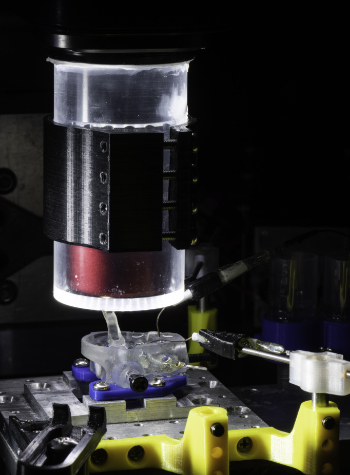

Close-up of a 3D-printed, scaled model of a gerbil cochlea. The cochlea of the inner ear is where incoming sound waves trigger minute vibrations of the hair cells. These vibrations are then converted into neurosignals that are delivered to the brain. (University of Rochester photo / J. Adam Fenster)

By Bob Marcotte, Senior Communications Officer for Science, Engineering and Research, University of Rochester

Will it ever be possible for hearing aids to compensate for hearing loss to the same degree that eyeglasses and contact lenses correct our vision? Will hard-of-hearing people eventually be able to separate out a single conversation at a crowded party, hearing the voices as clearly as corrective glasses and contact lenses can help us see a single tree in a forest?

Despite recent advances in hearing aids, a frequent complaint among users is that the devices tend to amplify all the sounds around them, making it hard to distinguish what they want to hear from background noise, said Jong-Hoon Nam, a researcher at the University of Rochester.

Nam, a professor of both mechanical and biomedical engineering, believes a key part of the answer to the problem lies inside the cochlea of the inner ear. That’s where incoming sound waves trigger minute vibrations of the hair cells, sensori-receptor cells in the inner ear. These mechanical vibrations are then converted into neurosignals that are delivered to the brain. An article detailing the research appears on the University’s website.

“The mission of our laboratory is to explain the precise moment when that conversion happens,” said Nam. That determination could provide the basic science needed for hearing devices to become fully capable of compensating for the unique degrees of hearing loss that occur from one individual to another, and from the left ear to right ear, in each individual.

“No two hearing aids should be the same,” Nam said.

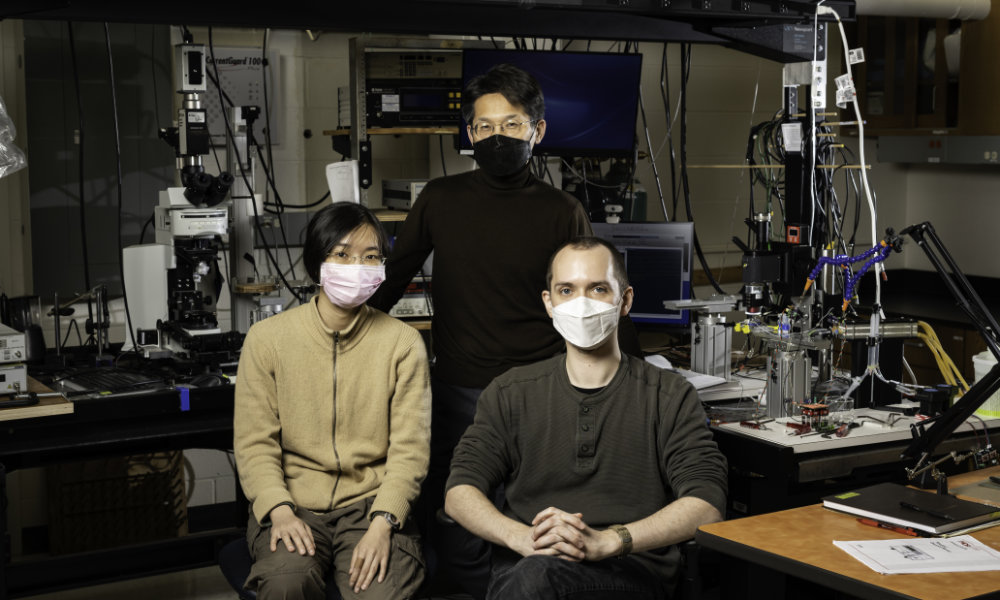

“No two hearing aids should be the same,” said Jong-Hoon Nam (center), pictured in his lab with research engineer Jonathan Becker ’15, ’17 (MS) (right) and PhD student Wei-Ching Lin. (University of Rochester photo / J. Adam Fenster)

Nam’s research has been funded by a recently renewed National Institutes of Health (NIH) grant, which will total $4 million though 2025, plus nearly $800,000 in National Science Foundation (NSF) funding. Both grants have helped Nam support seven mechanical engineering and biomedical engineering PhD students and allow him to hire three to four undergraduate research assistants each summer.

His collaborations with colleagues in the Departments of Mechanical Engineering and Biomedical Engineering, the University of Rochester Medical Center, and the University of Wisconsin School of Medicine, have resulted in numerous papers. Recent highlights include:

- How outer hair cells in the cochlea, contrary to prevailing views, can both amplify and reduce vibrations to enhance cochlear tuning;

- A computer model that can be used to interpret and analyze how the response to one tone can be reduced by the presence of another tone in a healthy cochlea—a capability that disappears when the cochlea loses its sensitivity in persons with hearing loss;

- Simulations showing that imbalances of Ca2+, a calcium ion that controls a variety of cellular processes, may contribute to making outer hair cells, especially those in the high-frequency region of the cochlea, most vulnerable to damage;

- How extended silence could harm rather than help hearing health—a finding that could have applications for inner-ear drug delivery.

Nam’s research group uses a specially designed microfluidic chamber to image cochlear tissue and see what is happening at the cellular level. (University of Rochester photo / J. Adam Fenster)

Optical coherence tomography spurs quantum leap in hearing research

Nam is using the same tool that helped ophthalmologists achieve major advances in vision correction. Optical coherence tomography is an imaging technique that allows researchers to capture micrometer-resolution, two- and three-dimensional images from within biological tissues.

The technology has provided a quantum leap forward in cochlear research, said Nam, whose lab occupies a unique niche in the field.

Other research groups use the imaging technology to study the vibration of cochlear tissues in live animals. However, the optical beam loses power as it moves through skin and bone. Instead, Nam’s group images the cochlear tissues in a specially designed microfluidic chamber, enabling his group to see what is happening at the cellular level. “We can provide further details that other researchers could not see,” he said.

Other labs also tend to focus on either animal models or computer simulations. As a result, they often encounter difficulty in interpreting the findings of the theoretical simulation groups, and vice versa. “That miscommunication is very costly, and often adds confusion instead of progress in research,” said Nam.

Nam’s lab combines animal models and computer simulations. As a result, “we can be more confident in our findings; we can make new hypotheses that otherwise could not be tested,” Nam said.

Hearing devices have improved during the last decade or so. The latest digital hearing aids, for example, have automatic features that can adjust the volume and programming for improved hearing in different environments. Moreover, Apple’s latest generation of attractive bidirectional earplugs have reduced the stigma of wearing hearing aids, even among young people.

“Now it looks cool,” Nam said. However, these hearing devices still fall short of the performance standards that have been achieved in correcting vision.

According to Nam, there is still a long road ahead. But the new imaging and computer modeling technologies make this an exciting time for cochlear research, offering the promise of a comparable quantum leap in more effective aids and implants for the hearing impaired.

Source: University of Rochester

Images: University of Rochester/ J. Adam Fenster