|

| Lee D. Hager |

Appropriate selection of a hearing protection device (HPD) is based on individual preference related to comfort (see the article by Behar in this issue of The Hearing Review) and sufficient attenuation as compared to individual noise exposure. While no standardized method for assessing comfort has yet been derived, methods for determining HPD attenuation and performance have been standardized for some time. Questions linger regarding the appropriateness of some evaluation methodologies, but the methods and protocols for testing are well understood.

The Two Evaluation Approaches: REAT & MIRE

Two general approaches to HPD evaluation are commonly employed. Real-ear attenuation at threshold (REAT) is the “gold standard” of HPD testing. REAT consists of a hearing test with ears open and a hearing test with ears occluded by HPDs. The difference between the two measures is assumed to be the amount of protection offered by the HPD.

Although REAT testing is widely employed, and is the basis for most global standards regarding HPD evaluation, it is subject to some complicating variables. Test conditions can be a significant variable. Since earplug effectiveness varies greatly based on proper use, the REAT measures are subject to variability depending on how well (and by whom) the earplug is inserted. Experimenter-fit HPDs tend to test at higher attenuation values than do subject-fit; experienced test subjects likewise tend to provide higher test results, as their experience with the test protocol and with earplug use tends to provide an optimal evaluation.

Since REAT is a subjective threshold test, it is subject to the variability inherent in any test that requires human detection and response to stimulus. The variability found in pure-tone audiometric testing is an inherent variable in REAT HPD testing. Additionally, if conducted in low sound level environments, the REAT may be subject to contamination by physiological noise. The sounds generated by a living human (blood flow, digestion, etc.) may interfere with the perception of test signals, especially at low frequencies.

The other primary approach is called microphone in real-ear (MIRE) testing. This process involves measurement of sound pressure level outside the earplug and under the earplug while in place. The difference yields either insertion loss (if measurements are done sequentially) or noise reduction (if done simultaneously). Neither insertion loss nor noise reduction are directly comparable to REAT findings, because the subjective nature of REAT testing inherently includes effects such as physiological noise (described above) and the acoustic effects of the outer ear (ie, impedance of the occluded ear canal, resonant frequency of the ear canal, and other variables collectively referred to as the transfer function of the outer ear).

MIRE techniques have built-in challenges as well. “Instrumenting” an ear—placing a microphone between the interior end of the HPD and the eardrum—can be problematic. Microphone cables that run around or through the HPD can cause acoustic leaks, leading to inaccurate measurements of protection. Careful and consistent placement of the microphone close to the eardrum is challenging; slight changes in microphone location and orientation can have a significant effect on measurement results. Until recently, the equipment necessary to conduct MIRE testing has been relatively fragile and expensive. In general, this limited MIRE testing to research laboratories.

Both the REAT and MIRE tests are “point tests,” meaning that they assess HPD performance in a given single situation (ie, one HPD fit, noise environment, etc). To achieve reliable estimates of performance, the tests should be repeated numerous times until the results approach a reasonable mean.

Laboratory Testing

|

| See the article by Lee Hager, “A Better Way: New Developments in Hearing Conservation” in the October 2005 HR Archives. |

For purposes of labeling and rating, HPD assessments are made under controlled laboratory conditions dictated in the United States by ANSI standards. While the most recent ANSI standard (ANSI S12.6-1997(R2002)) dates from 1997, the US law regulating HPD testing and labeling refers to an even earlier version of the standard (ANSI S3.19-1974). Because this US rule (40CFR211 Subpart B) makes direct reference to the 1974 standard, the standard is essentially “frozen” until conscious action is taken to update it. Unfortunately, the Environmental Protection Agency (EPA), the body responsible for this regulation, has not updated the rule since its promulgation in the early 1980’s.

Having acknowledged the above, many of the differences between the older and newer standards are substantive. For example, the 1974 rule requires two rounds of REAT testing on a panel of 10 subjects with the statistically massaged average of the 20 test results serving as the noise reduction rating (NRR) found on the HPD packaging. The updated standard contains a similar procedure with some slight modifications called “Method A,” but also includes a subject-fit procedure called “Method B.” Method B consists of a test panel of 20 subjects who are audiometrically experienced (ie, they understand the hearing test and have been demonstrated to give reliable responses to test stimuli) but HPD-naive, meaning that they are not regular HPD users and have not received recent training in HPD use. The subjects are then provided the HPD and the manufacturer’s instructions, and the experimenter is prohibited from further intervention. The subjects don the HPD as they see fit based on the instructions, and the devices are tested.

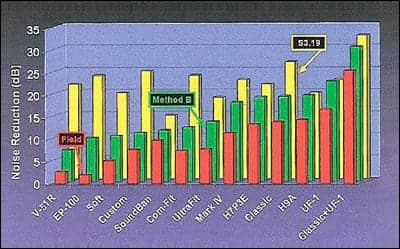

Not surprisingly, Method B results tend to be consistently lower than Method A or S3.19 results, and may be a better indication of what level of protection actual workers can expect to receive from the HPD (Figure 1).

|

| Figure 1. Comparison of HPD test protocols from NIOSH, 1998. The yellow bars represent current NRR (S3.19) ratings, the green bars represent the “Method B” results (no formal instruction/intervention by the tester permitted), and the red bars represent values commonly seen in the field. |

Rating and Labeling HPDs

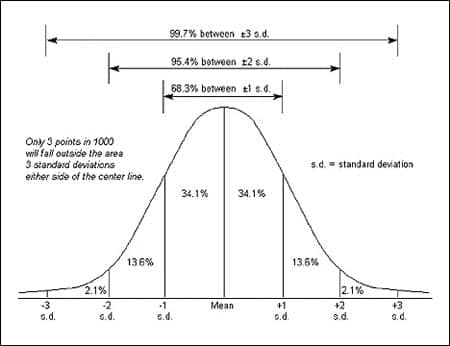

Under current regulations, the NRR—which is required on the packaging of all HPDs sold in the United States—is derived from S3.19 laboratory results, statistically massaged in an attempt to better estimate actual field performance. The NRR label reflects mean S3.19 test results minus two standard deviations, with the statistical assumption that this approach will provide at least the labeled protection for 98% of users (Figure 2). In reality, as reflected in Figure 1, the NRR still misses the mark significantly.

|

| Figure 2. Standard deviation distribution. |

The EPA plans to reconsider its HPD labeling rule in the near future. Research is being conducted and data collected to assist the EPA in bringing 40CFR211 up to date, with current timelines indicating that the rule will be reconsidered this year. Among the issues under consideration by the EPA are:

Given the wide variability, even in laboratory test results, is a single protection value appropriate for the label, or should the label reflect a range of values? One approach is to label HPDs with 20th and 80th performance percentile values, indicating that the lower value (the protection level met or exceeded by 80% of the test subjects) is representative of typical use, and the higher value (met or exceeded by 20% of the test subjects) is attainable by well-trained and motivated users.

Should test results be reported using Method A (experimenter assisted testing) or Method B (subject-fit testing)? Method A may be a good indication of the potential performance of HPD, given that the experimenter is allowed to optimize fit, but Method B may be a better reflection of actual end-user performance.

How can new and emerging technologies be assessed? The sound restoration earmuff, for example, is designed to provide a consistent level of sound beneath the earmuff cup by amplifying quiet sounds and quieting loud sounds electronically (see article in this issue of The Hearing Review). Currently the devices can only be assessed in their “off” or passive mode, but this is not a good reflection of actual use. Similarly, the active noise cancellation earmuffs that generate “anti-noise” to cancel incoming offensive sounds, cannot be evaluated in their intended application under the current rule.

Finally, if it is important to understand how HPDs work on individual users, it may be best to test HPDs in a way that reflects individual use. New technologies are emerging to enable quick and simple field testing of HPDs, and these are described by Berger in this issue of The Hearing Review.

Conclusion

HPD selection is critical for effective hearing loss prevention. Only by understanding how HPD are evaluated and used can hearing conservation professionals effectively select HPD appropriate for noise exposed workers.

Lee D. Hager is a hearing loss prevention consultant for Sonomax Hearing Healthcare Inc, Toronto. Correspondence can be addressed to HR or Lee D. Hager, Sonomax Hearing Healthcare Inc, 248 Church St, Portland, MI 48875; email: .