Bilingual clients with hearing loss might benefit from language-specific hearing aid settings, depending on which languages they speak.

By Marshall Chasin, AuD

When a client who needs hearing aids speaks two different languages, the issue arises whether their devices’ programming can, or should, be set differently depending on the language. I began collecting data on this subject over 15 years ago and currently there are two hearing aid manufacturers that use those findings 1-2 in their hearing aid fitting software. From a clinical perspective, program #1 can be set for one language (e.g., English) and program #2 can be set for a second language.

But do hearing aids need to be set differently to be optimized for different languages?

Phonemic Spectra

In 1995 an interesting article appeared in the literature showing that the long- term average speech spectrum was similar (at least for the purposes of fitting hearing aids) for a wide range of languages.3 This, of course, makes sense since we are all human and speakers of any language will generate speech from similar vocal tracts. Speakers of all languages have noses, oral cavities that are similar in length and volume, teeth, soft tongues, and lips. It is not surprising that the long-term average speech spectra are similar from language to language.

But this is the physical “phonetic” spectrum. What about the differing acoustic cues that vary from language to language, perhaps resulting in differing speech intelligibility index (SII) values indicative of an underlying “phonemic” spectra?

Tonal and Morae Timed Languages

Some early work in this area examined the features of tonal languages where pitch differences that occur on the low frequency vowels generated SII measures that were more biased toward requiring more low frequency gain.4 The usual example is Chinese which, unlike English, uses tones to create different meanings. The Chinese word “ma” can have four different meanings depending on the pitch contour during the articulation of the vowel [a]. This suggests that there should be more low frequency gain (and perhaps output) for lower frequency vowels than for a language such as English, and that this can be clinically accomplished by having two programs: one for English and another program for a second language with more low frequency gain being prescribed.

Another example of phoneme differences is one where the length of the phoneme—usually a vowel—is linguistically meaningful. Japanese is one such language where the length of the vowel (or morae) is linguistically important and, like for speakers of tonal languages, Japanese speakers would require more low-frequency gain than for non-morae timed languages.

However, tonal (and morae timed) languages are “low hanging fruit” and linguistic changes such as this due to single phoneme levels will only result in static frequency response changes. There are other linguistic differences that manifest themselves at the level of the word, and even the sentence.

Word-Level (Morphological) Differences

The vast majority of languages spoken around the world do not have overly restrictive “morphological rules,” and this is certainly true of English. We can have two or even three consonants in a row, and one or two vowels that can be side-by-side. The ordering and restrictions of phonemes is not overly restrictive in languages such as English.

However, there are a few languages such as Japanese where a strict Consonant-Vowel-Consonant-Vowel (or CVCV…) structure is required. This is such a strong morphological requirement in Japanese that even “borrowed” English words such as “handbag” become “hanodobago,” which is consistent with the CVCV morphological restriction. And in languages such as this, the utterance of a word would be soft (C)-loud (V)-soft (C)-loud (V)-soft… according to the CVCV… alteration. The attack and release times of the compression circuitry would need to be sufficiently shorter in Japanese (compared to in English) in order for the intervening quieter consonants to have sufficient gain to have the desired audibility.5 This could be an additional electro-acoustic programming factor that differs between programs 1 and 2 for such a bilingual client.

Sentence-Level (Syntax) Differences

In addition to phoneme-level differences (frequency response changes) and morphological differences (dynamic characteristics of the compression circuitry), there can also be sentence-level differences that require software programming changes for hearing aids.

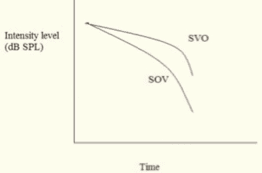

The majority of “Western” languages such as English have a Subject-Verb-Object (SVO) word order where a sentence begins with a noun and frequently ends with a noun. Nouns tend to be at a higher sound level than other syntactic forms. And if the subject or object is a proper noun, the sound level is much higher than surrounding verbs or other ancillary words such as prepositions, adjectives, and adverbs. All sentences fall off in sound level from the beginning to the end simply because we gradually run out of air. But having a sentence final noun (e.g., object) mitigates this gradual fall-off of sound level near the end of a sentence in English.

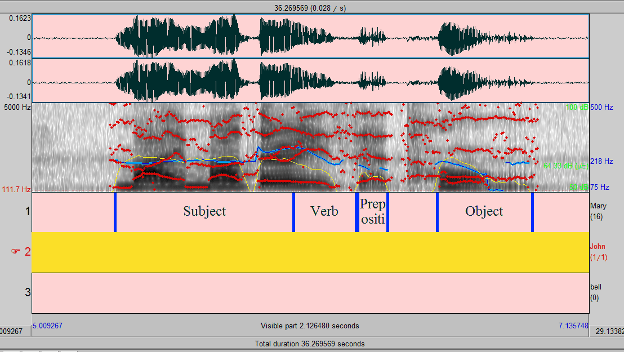

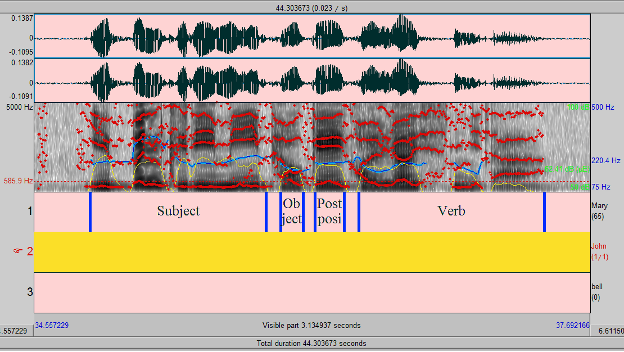

A stylized depiction of this is shown in Figure 1. Figures 2a and 2b show this same phenomenon in English (2a), a SVO language, and in Korean (2b), which is a SOV language.

Figures 2a and 2b: The top part of these two spectrograms shows the higher level sounds (2a) where the sentence ends in an object (e.g., English) versus when the sentence ends in a quieter verb (2b) (e.g., Korean).

However, there are a wealth of other languages that have a Subject-Object-Verb (SOV) word order where sentence final utterances are generally of a lower sound level than in English because of the lack of a sentence final noun/object. Some examples of SOV word order languages are Japanese, Korean, Hindi/Urdu, Bengali, Tamil, Turkish, Armenian, and Mongolian. In these languages, more gain would be required for sentence-final sounds. Soft sentence-final sounds implies that more gain is required for soft sounds than for an “equivalent” English program.

Returning to the Japanese example one last time, for an English/Japanese speaker, the Japanese program would differ from the English program by having: (1) more low frequency gain (morae timed language), (2) faster attack and release times (rigid CVCV morphology), and (3) more gain for softer level inputs (SOV syntactic structure).

Programming Optimization for Bilingual Clients

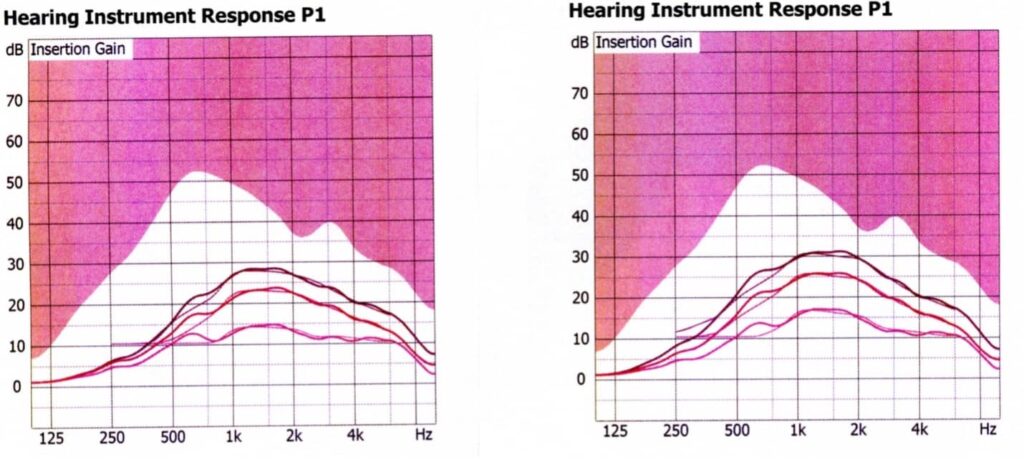

To date, two manufacturers have adopted these software modifications, but even if this is not (yet) available with one’s preferred manufacturer, the simple rules of thumb mentioned above would be sufficient to optimize the programs for bilingual clients with hearing loss. As an example, Figure 1 is a screenshot comparing two hearing aid programs for the same bilingual client who speaks both English and Russian.

Marshall Chasin, AuD, is an audiologist and the director of auditory research at the Musicians’ Clinics of Canada, adjunct professor at the University of Toronto, and adjunct associate professor at Western University. You can contact him at [email protected]

References

1. Chasin M. How much gain is required for soft level inputs in some non-English languages? Hearing Review.2011;18(10):10-16.

2. Chasin M. Hearing aid settings for different languages. In: Goldfarb R, ed. Translational Speech-Language Pathology and Audiology: Essays in Honor of Dr. Singh. San Diego: Plural Publishing Group; 2012:193-198.

3. Byrne D, Dillon H, Tran K, et al. An international comparison of long-term average speech spectra. J Acoust Soc Am. 1994;96(4):2108–2120. Doi: 10.1121/1.410152

4. Wong LL, Ho AH, Chua EW, Soli SD. Development of the Cantonese speech intelligibility index. J Acoust Soc Am. 2007;121(4):2350-2361. doi:10.1121/1.2431338

5. Kewley-Port D, Burkle TZ, Lee JH. Contribution of consonant versus vowel information to sentence intelligibility for young normal-hearing and elderly hearing-impaired listeners. J Acoust Soc Am. 2007;122(4):2365-2375. doi:10.1121/1.2773986

Really interesting read. I always wondered if hearing aids had to be tweaked for people who speak more than one language. Are there major differences for common pairs like English and Spanish, or does it mostly affect just tonal languages like Chinese? Just curious if you’ve seen this make a big impact day to day for users.