The use of automatic directionality and digital noise reduction in pediatric fittings is still controversial. One of the reasons for this is that information concerning the automatic decision-making process employed by manufacturers is often vague. This article addresses the decision-making process employed in select Oticon hearing instruments.

|

|

|

| This article was submitted to HR by George Lindley, PhD, AuD, manager of pediatric training and education for Oticon Inc, Somerset, NJ; Donald J. Schum, PhD, vice president of audiology and professional relations for Oticon Inc; and Merethe Lindgaard Fuglholt, MA, is an audiologist at Oticon AS, Smoerum, Denmark. Correspondence can be addressed to George Lindley at . | ||

There are few amplification-related topics that generate greater differences of opinion than the issue of the application of advanced signal processing, such as automatic directionality and digital noise reduction, to pediatric hearing aid fittings. Opinions run the gamut from “never use until they are teenagers” to “always use even with infants.”

Like it or not, these decisions will be made more frequently in today’s dispensing environment. With pediatric hearing aid fittings, there is a high likelihood of third-party reimbursement, and these providers are increasingly approving the fitting of mid to high level technology. In the past, these options may simply not have been present on the devices fitted to many children. Today, however, options such as automatic directionality and digital noise reduction are becoming available even on basic models.

Unfortunately, there are many opinions but few concrete guidelines on this topic. The 2003 Pediatric Amplification Protocol1 published by the American Academy of Audiology states that directionality is not appropriate for young children since they cannot reliably switch between omnidirectional and directional settings as needed. However, in the interim, automatic directionality features have become more prevalent. The protocol also states that insufficient evidence exists to support the use of digital noise reduction in pediatric fittings. In the absence of such data, many dispensers understandably take a cautious approach when working with children.

It is important to examine the reasons why there is caution in implementing environmentally adaptive systems in hearing aids fit to children. It is not that these systems are inherently inappropriate in hearing aids; rather, the concern is that these systems, which work effectively for adults who have already developed speech and language competence, may disrupt access to important speech information that the developing child needs. If a closer examination of the performance of these systems provides evidence that they can effectively protect access to crucial speech information, then the concerns about use in the pediatric population should be reduced.

The purpose of this article is to review the implementation of features such as automatic directionality and digital noise reduction in view of the unique needs of children with hearing loss. Rather than recommending an age at which these features should be enabled (an impossible task given the limited empirical data and variability among children), the intent is to increase the comfort level of dispensers when considering this technology. Further, nothing in this article should be construed as suggesting that advanced hearing aid technology should take the place of FM usage in the classroom environment. Rather, the potential benefit of advanced hearing aid technology in classroom situations when FM is not being used and for situations outside of the classroom will be addressed.

Can Children Benefit?

In deciding whether to implement a feature such as directionality or digital noise reduction, a logical first question is whether the child will potentially benefit from such a feature. Fortunately, there is some research available that addresses at least the potential for children to benefit. Kuk et al,2 for example, found objective and subjective improvements in speech understanding in noise in a group of 7- to 13-year-olds fitted with directional hearing aids when compared with their analog omnidirectional devices.

Recently, Ricketts and Galster3 compared speech intelligibility in noise under omnidirectional versus directional settings using the same hearing aids in both conditions. The children wore the hearing aids for 1 month while adjusted for an omnidirectional response and for another month using a directional response. Objective measures of speech understanding were obtained in a simulated classroom environment while subjective preference of both the children and parents were obtained using the Children’s Home Inventory for Listening Difficulties (CHILD) and a questionnaire developed specifically for the study.

The results of the study demonstrated that in certain situations directionality was beneficial when background noise was present. Conversely, in situations where the signal of interest was from the rear, directionality had a negative effect. Similar patterns were noted in the subjective data. Based on patterns obtained during complex listening situations (eg, signal of interest shifts from front of child to rear), the authors suggest that going into a directional mode inappropriately may be more detrimental than staying in an omnidirectional mode inappropriately. This type of finding provides guidance on how to evaluate whether modern automatic switching algorithms can provide the benefits of directionality yet still protect against loss of important speech information.

Can children benefit from directionality? Based on the evidence available, the answer appears to be a qualified “yes.” Children can benefit from directionality under the same acoustical environments in which adults benefit, namely, when:

- The target signal is relatively close and to the front;

- The noise is primarily to the sides and back; and

- Reverberation is not excessive.

In addition, benefit is most likely obtained when the child is old enough to consistently orient to the speaker of interest. Fortunately, Ricketts and Galster4 have recently provided evidence that children as young as 4 years of age can consistently orient accurately in a classroom setting.

Automatic Directionality and the Decision-Making Process

Since children (and many adults) are unable to consistently choose the correct microphone settings in specific environments, automatic switching is likely necessary in a pediatric fitting. Unfortunately, information concerning the automatic decision-making process employed by manufacturers is often vague, and many dispensers are concerned that an incorrect setting will be implemented. In order to demystify the issue, the automatic decision-making process employed in select Oticon hearing instruments will be reviewed.

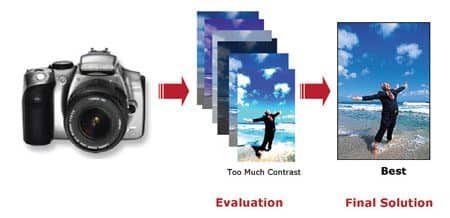

The decision-making process used in many of today’s hearing aids is analogous to that used with digital cameras. The most common setting chosen by the photographer is likely the “automatic” setting. Under this scenario, the user lets the camera decide which setting will yield the best picture. As illustrated in Figure 1, once the picture is snapped, the camera can compare what the picture would look like using any given number of parameters. Whatever combination of settings yields the best outcome (eg, best contrast) is then used.

|

| FIGURE 1. A digital camera uses a combination of criteria in selecting the settings that will yield the best picture. |

With regard to automatic decision making and pediatric hearing aid fittings, the critical issue relates to the criteria employed in making the choice between omni or directional. Possible criteria could include the overall level of sound reaching the hearing aid, the spectral characteristics of the incoming signal, and/or modulation characteristics of the incoming signal. Which criterion is given more precedence will be affected by the primary purpose for implementing the feature. If comfort is the primary goal, then a simpler criterion such as overall loudness level might suffice. Conversely, if maintenance and/or improvement of speech intelligibility is the primary goal, a more complex criterion is necessary.

Consider the scenario of child wearing hearing instruments during a small group classroom activity. The child is seated at a small table with other groups of children working nearby, resulting in a loud overall classroom level. The teacher is circulating around the room, sometimes standing behind the individual student being addressed. If overall loudness level were the only criterion employed in deciding if directionality should be implemented, the instruments may stay in a directional mode even when the teacher is speaking to the hearing aid wearer from behind. This would not qualify as a criterion that has the goal of maximizing/maintaining speech intelligibility.

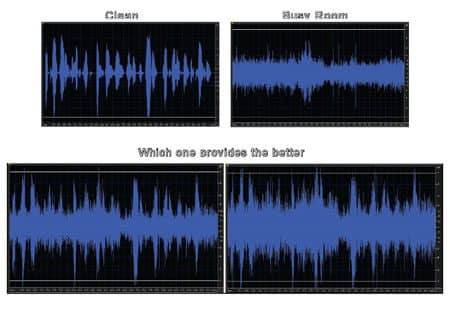

Alternatively, modulation characteristics could also be considered in determining appropriate settings. By comparing the amount of modulation present in the signal in an omnidirectional versus a directional setting, the possibility of reducing a primary signal (eg, teacher) arriving from the rear is significantly lessened. As shown in the upper-left panel of Figure 2, speech is a highly modulated signal. Background noise (including speech and non-speech sounds) serves to fill in the valleys in a speech envelope, reducing the degree of modulation measured (see upper right panel of Figure 2).

|

| FIGURE 2. The top panels show modulation characteristics associated with speech in quiet (left) versus noise obtained in a busy room (speech and non-speech noise). In determining whether omnidirectional or directional setting is appropriate, the impact on degree of modulation could be considered (bottom panels). |

Instead of using “clarity” as the primary criterion like the digital camera, the hearing instruments can use modulation characteristics. In that case, the combination of parameters that yields the response in the bottom left panel would be preferred to that which yields the less modulated response in the bottom right.

By analyzing which setting (omnidirectional versus directional) yields the signal with the greatest amount of modulation, it becomes less likely that a primary speech signal from behind would be attenuated. In the small-group situation discussed above, consider the outcome of a modulation analysis when the teacher was speaking to the student wearing hearing instruments from behind. If the hearing instruments were to implement a directional setting, the level of the teacher’s voice would be reduced in relation to the surrounding background noise. In this case, the degree of modulation would lessen, indicating that directionality would not be the preferred outcome (even though overall SPL would likely be lower).

By going beyond basic characteristics, such as overall loudness level, a more sophisticated automatic switching approach for directionality can be employed in order to minimize the potential for a primary speaker from the rear to be inappropriately attenuated. In addition, one of the major improvements to digital hearing instruments over the past few years is the speed at which this type of decision can be made. Analysis occurs continuously and decisions are made in the span of milliseconds.

|

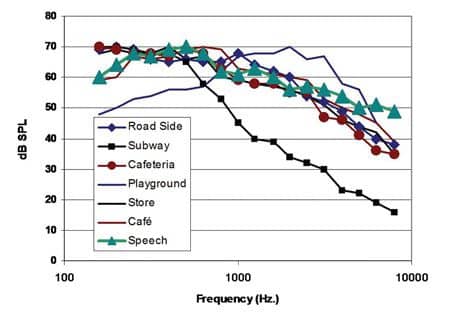

| FIGURE 3. Long-term average spectra of speech and common back- speech noise, similar to that used in some ground noises. |

Digital Noise Reduction

Automatic decision making is also used when implementing digital noise reduction. The primary goal of digital noise reduction is increasing listening comfort in background noise. Given the amount of spectral overlap between speech and common background noises, the potential for improvements in speech intelligibility is limited.5 As evident in Figure 3, reduction of any of the background noises in certain frequency regions would also result in reduction of the primary speech signal. However, there are situations in which the signal in a given frequency region is dominated by noise, and therefore gain reduction, while not improving speech intelligibility, also will not decrease it.

The individual may benefit from a more comfortable signal. The devil, of course, is in the details. Digital noise reduction that is too aggressive may result in increased comfort, but at the cost of reducing available speech information. The amount of noise reduction that may be appropriate for an adult who develops hearing loss later in life may not be appropriate for a child still acquiring speech and language. As with directionality, an intelligent approach to digital noise reduction is necessary in order to ensure that critical speech information is not lost due to noise reduction.

Many digital noise reduction algorithms are based on some type of modulation analysis in individual channels.6 Additional factors such as overall level and spectral characteristics of the signal can also play a role. The relative importance of these factors and others in the decision-making process varies among manufacturers. Therefore, the effect of any given DNR algorithm on a specific signal can vary substantially among different hearing aids. Hearing care professionals involved in the fitting of pediatric patients need to have an understanding of the techniques used to ensure the approach is not overly aggressive.

Synchrony Detection

Most Oticon hearing instruments employ a novel approach in the implementation of digital noise reduction. This approach, called Synchrony Detection, goes a step further than traditional modulation-based approaches when determining the relative contribution of speech versus noise in a given channel.5 In traditional approaches to noise reduction, decisions are based primarily on the amount of modulation occurring in each individual channel. In channels with little modulation, the assumption is that the energy in that region is primarily associated with background noise and that little to no speech information is present. As such, gain reductions are applied in order to increase listening comfort.

With synchrony detection, the algorithm looks for modulation that is occurring across channels, rather than in each channel independently. The existence of co-modulation is a strong speech-like characteristic. Consider the production of the vowel /a/. When an /a/ is produced, there is significant energy at three frequencies (formants) centered at approximately 710 Hz, 1100 Hz, and 2400 Hz. Different vowels and speakers will exhibit energy at different frequencies. What is important, however, is that the energy at these frequencies will occur simultaneously during production of the vowel.

By looking for co-modulation across channels—as opposed to the more traditional approach of looking at modulation characteristics in each channel independently—synchrony detection can find speech in greater levels of background noise. Since gain reduction is minimized whenever speech is detected within the background noise, the functional result is a more speech-focused approach to noise reduction where preservation of speech information is given priority over reduction in gain.

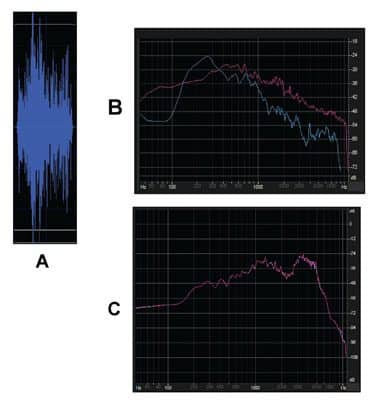

|

| FIGURE 4. Panel A shows the modulation characteristics associated with sounds in a busy office; Panel B shows the long-term average spectra of speech versus office noise; and Panel C shows the impact of digital noise reduction in this environment. |

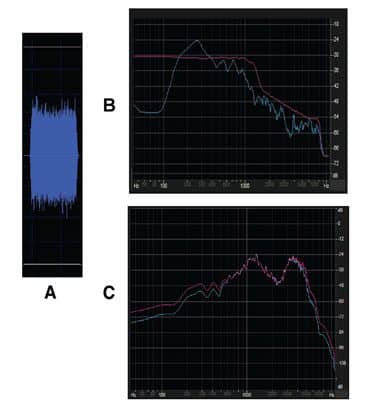

|

| FIGURE 5. Panel A shows the modulation characteristics associated with speech-weighted noises; Panel B shows the long-term average spectra of speech versus speech-weighted noise; and Panel C shows the impact of digital noise reduction in this environment when using Vigo Pro hearing aids. |

Figure 4 demonstrates the impact of digital noise reduction in a busy office setting. Figure 4a shows the modulation characteristics of the combined speech and noise signal reaching the hearing aid microphones. In Figure 4b, the blue line represents the long-term average spectrum of the speech signal, and the red line represents the long-term average spectrum of the background noise consisting of both speech and non-speech sounds. Because speech is being detected in all channels, the impact of digital noise reduction is negligible even though the noise level is higher than the speech at most frequencies. Figure 4c shows the response with digital noise reduction in a Vigo Pro hearing aid turned off (red line) versus on (blue line).

In Figure 5, the background noise is speech noise, similar to that used in some real-ear units. In this case, although the noise has a similar frequency weighting to speech (except for the low frequencies), it is steady-state in nature and does not demonstrate the modulation characteristics of actual speech. Gain reduction is applied in the lower frequencies, since the noise is significantly more intense than the actual speech and modulation is not being measured. Because the gain for low frequency noise (and speech) is reduced, the person may experience greater listening comfort, although speech intelligibility will not likely improve from DNR alone. As with the Oticon approach to directionality, this approach to noise reduction is designed to provide the benefits of signal processing without threatening vital speech information.

The Systems in Action

The preceding sections have described the decision-making approach taken with directionality and noise reduction in Oticon hearing instruments. In the following section, the implementation of this decision-making will be demonstrated using simulated listening environments. The goal of this activity was to verify that appropriate decision-making was taking place and to verify that there was not a reduction in the audibility of the target speaker.

Test environment. Testing was done in a large soundbooth with KEMAR situated in the center of the room. Six speakers were placed at 0°, 60°, 120°, 180°, 240°, and 300° azimuth. A KEMAR mannikin was fitted with a pair of Vigo Pro BTEs. Throughout testing, real-ear measurements were made using an Audioscan Verifit unit, and digitized signal recordings were made from the KEMAR ear canals. Signal presentation and analysis was conducted using Adobe Audition.

Several signals were presented throughout the testing. The speech stimulus consisted of target sentences from the QuickSin. These were spoken by a female speaker, and the breaks between sentences were removed in order to simulate continuous discourse. Noise consisted of either a speech babble stimulus or pink noise. The intensity levels of the speech and noise varied (indicated specifically in the examples below).

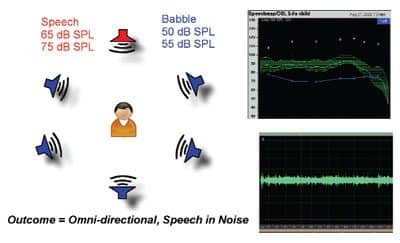

Scenario 1: Target signal from behind. One situation of concern regarding the use of directionality is that it will inappropriately be implemented when the signal of interest originates from behind the listener. However, if the decision to go into a directional mode is primarily based on modulation characteristics, the potential for this to occur is reduced.

|

| FIGURE 6. Directionality and digital noise reduction settings obtained in noise when target signal is presented from the rear. |

In Scenario 1, a 65 dBSPL speech signal was presented from directly behind the KEMAR while 50 dBSPL of noise was presented from the remaining speakers as shown in Figure 6 (overall noise level was approximately 56 dBSPL). As expected, the hearing aids remained in an omnidirectional mode. An identical outcome was obtained when higher speech and noise levels were employed.

Figure 6c shows the modulation characteristics of the combined speech and noise signal. If the hearing aids had gone into a directional mode, the level of the signal arriving from the rear would have been reduced, resulting in a lower intensity level. However, the modulation characteristics associated with the target signal also would have been reduced, a negative consequence. Therefore, the hearing instruments correctly remained in an omnidirectional setting. When speech was presented from the side, omnidirectional was again correctly chosen.

With regard to digital noise reduction, the system has detected a combined speech and noise signal (options are speech only, speech in noise, and noise only). Because speech has been detected, any noise reduction applied would be minimal. As evident in the speech mapping outcome (Figure 6b), audibility has not been negatively impacted by having the digital noise reduction algorithm activated.

|

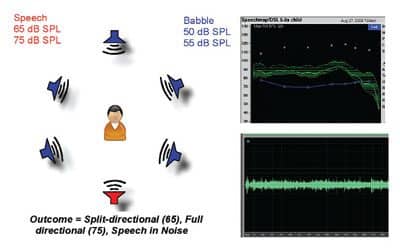

| FIGURE 7. Directionality and digital noise reduction settings obtained in noise when target signal is presented from the front. |

Scenario 2: Target signal from front. In Scenario 2, the target speech signal was presented from the front and the speech babble was presented from the sides and back (Figure 7). In this situation, directionality could prove beneficial and a directional response was implemented by the hearing instruments. When the speech and noise levels were moderate (speech at 65 dBSPL, overall noise level approximately 56 dBSPL), a “split-directional” response was implemented.

Split Directionality is an Oticon feature where the instrument remains in omnidirectional mode in the low frequencies (<1000 Hz) but directional in the higher frequencies. There are several benefits to providing this type of processing. When directionality is implemented across all frequencies, there is a reduction in low frequency gain for sounds arriving from the front as well as the sides/back. This reduction in low frequency gain can have a negative impact on sound quality, and for children with low frequency hearing loss, it can result in less speech audibility for sounds arriving from the front.

By remaining omnidirectional in the low frequencies, this issue is avoided without a significant decrement in the benefit provided by directionality. A more traditional approach to dealing with this low frequency cut involves providing an “equalized” or “compensated” low frequency response when in directional mode. While this improves low frequency audibility, it can increase the level of circuit noise so that directionality is not implemented until higher levels of background noise are present. Because circuit noise is less of an issue with Split Directionality, directionality can be used in lower levels of background noise.

As the level of background noise and target speaker increase, however, the issue of low frequency audibility and circuit noise becomes less apparent. In this case, a full-directional response (directionality at all frequencies) can be implemented. As seen in Scenario 2, a full-directional response is chosen when the speech and noise levels were increased. The ability to choose between omnidirectional, split-directional, and full-directional allows the instruments to use directionality intelligently while minimizing potential negative outcomes such as decreased low frequency audibility and/or sound quality.

In Scenario 2, a directional response was selected for two reasons. First, when comparing output between omnidirectional and directional settings, the output is lower when a directional response is selected since signals arriving from the rear and sides are attenuated. However, this criterion in and of itself was not sufficient to implement directionality. The modulation characteristics also improve in this situation as there is less background noise to fill in the “valleys” in the primary speech envelope. Since output is reduced and modulation characteristics have improved, a directional response was selected.

|

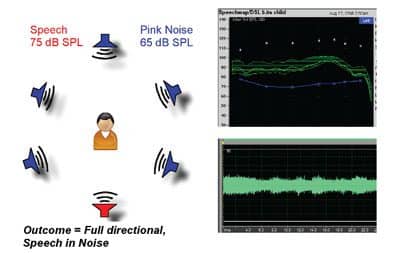

| FIGURE 8. Directionality and digital noise reduction settings obtained in noise when target signal is presented from the front. |

Scenario 3: High non-speech noise levels. In Scenario 3, the speech babble noise was replaced with pink noise (Figure 8). Speech was presented at 75 dBSPL, and the surrounding pink noise was presented at an overall level of approximately 71 dBSPL. Again, because the modulation characteristics of the speech improved when directionality was implemented, a directional response was selected. Although the non-speech noise level is quite high, the DNR mode selected was “Speech in Noise,” resulting in little gain reduction and maintaining audibility as evident in the speech mapping curve.

Note the change in the speech envelope characteristic as directionality and digital noise reduction are implemented. After about 5 seconds, the envelope takes on a greater degree of modulation as the impact of reducing the noise from behind and to the sides becomes evident. This outcome again supports the characterization of the automatic processing in Oticon hearing instruments as speech-focused in nature. It takes more than a reduction in overall signal level for directionality to be selected. In addition, the modulation characteristics need to improve. A benefit of this approach is a much lower likelihood that directionality will be implemented when the speech signal of interest arrives from the back or sides.

When viewed together, the above exercises provide evidence that the decision-making process used in Oticon hearing instruments is conservative in nature and has a “speech first” focus as opposed to “comfort first.” However, it is impossible to simulate every possible situation a child may encounter in a laboratory setting.

Fortunately, Oticon’s Genie fitting software contains several tools that dispensers can use in deciding if they are comfortable with the decision-making process. The “Live Demonstration” feature can be used to determine what decision the hearing instruments are making in any environment. Dispensers can simulate a specific environment in the clinic and view in real time the decision-making results from the hearing instruments while they are worn by the child (and connected via a Noah Link). As wireless programming connections become more prevalent, it also becomes possible to use Genie Live in real-world environments (eg, school cafeteria) to assess the decision-making process.

Another tool is the “Memory” feature built into the fitting software. Through this feature, the dispenser can see what percentage of the time a given feature (eg, split directionality) is implemented. For the child in school using an FM system, advanced features such as DNR and directionality should be used a relatively small percentage of the time. This is especially true when a speech-focused approach is employed.

So When Should These Features Be Activated?

As discussed at the beginning of this article, there are no specific guidelines regarding at what age features such as directionality and noise reduction should be implemented. With directionality, it is important that the child is regularly facing the signal of interest in most communication situations without the need for prompting on the part of the partner.

In addition, a needs-assessment can be conducted to determine if there are situations in which directionality and/or noise reduction could be beneficial. Pediatric focused tools such as the CHILD, COW, etc, can be used to help identify specific situations of need. If the child, caregiver, and/or teacher identifies situations in which the child is struggling and which could be improved via directionality, a trial with these features enabled could be considered. The same assessment tools can be used to determine if improvement in performance has resulted from implementation of specific features. Situations in which improvement could be obtained would be the same as those for adults (ie, noise predominantly from the sides and back and less reverberant environments).

Ultimately, however, the clinician needs to be comfortable with the decision-making process employed by the hearing instruments. No algorithm will be correct 100% of the time. However, by using a smart set of decision-making criteria, the potential for error can be minimized. And by using a conservative speech-focused approach, when a mistake is made, it will likely be that a particular feature set was not implemented when potential benefit may have been obtained. Future research will address the appropriateness of automatic implementation of advanced features in real-world settings.

References

- American Academy of Audiology. 2003 Pediatric Amplification Protocol. Available at: www.audiology.org/resources/documentlibrary/Documents/pedamp.pdf. Accessed April 27, 2009.

- Kuk FK, Kollosfski C, Brown S, Melum A, Rosenthal A. Use of a digital hearing aid with directional microphones in school-aged children. J Am Acad Audiol. 1999;10(10):535-548.

- Ricketts TA, Galster J. Directional benefit in simulated classroom environments. Am J Audiol. 2007;16:130-144.

- Ricketts TA, Galster J. Head angle and elevation in classroom environments: Implications for amplification. J Am Acad Audiol. 2008;51(2):516-525.

- Schum DJS. Noise reduction in hearing aids: What works and why? News From Oticon. April 2003.

- Bentler R, Chiou L. Digital noise reduction: An overview. Trends Amplif. 2006;10(2):67-82.

Citation for this article:

Lindley G, Schum D, Fuglholt M. Directionality and Noise Reduction in Pediatric Fittings Hearing Review. 2009;16(5):34-43.