|

| Ryan J. Mills, MA, is a staff audiologist at the Department of Veterans Affairs, Cincinnati, Ohio, and a PhD student at the University of Cincinnati. Doug Martin, PhD, is an adjunct associate professor at the University of Cincinnati. |

It is common knowledge to hearing health care professionals that individuals with hearing impairment have a great deal of difficulty understanding speech in the presence of background noise, even with appropriately fitted hearing aids. This was especially true when hearing aids consisted of linear amplifiers that tended to degrade speech when embedded in background noise due to “distortion, narrow bandwidth, irregular response, and inappropriately adjusted (or available) frequency response.”1

Since the advent of both digital signal processing (DSP) and directional microphone systems, hearing aid manufacturers and dispensing professionals are sometimes tempted to make claims that, in aggregate, give the consumer the impression that these systems can normalize hearing and make communication in noisy situations an easy task. While directional microphone systems, when partnered with DSP, do appear to be a great benefit to hearing aid users confronted with background noise, it is important to understand both the potential benefits and continuing limitations of these technologies.

The combination of a directional microphone system and a DSP system is an attempt to achieve two goals. The first is managing the comfort level of noise. This is achieved by the DSP system—typically accomplished by using a combination of sound isolation, compression, and output limiting based on a predetermined algorithm. Alone, this may not improve speech understanding, per se; rather, it maintains the current level of audibility by not distorting the speech signal. The second goal is focusing the directionality of audition toward the signal and away from the noise.

The concept of using directional microphones in hearing aids is certainly not new. Directional microphones first emerged in the early 1970s.2,3 Although hearing aid users with directional systems demonstrated significant benefit in background noise,4,5 they were not used routinely in clinical practice through the mid-1980s. In 1983, Mueller, Grimes, and Erdman6 reported that only 20% of behind-the-ear (BTE) hearing instruments were dispensed with directional microphones, even though the technology was readily available. The primary reason for this low rate of acceptance was the lack of a manual omnidirectional switch in most early directional aids and the inability for these instruments to function in an omnidirectional mode—which has since been shown to be the most used mode even in directional aids.

More modern directional microphones have been tested, proven, and have become more common in clinical practice. Directional microphone systems have proven more successful in improving signal-to-noise ratio (SNR) when compared to omnidirectional microphone strategies in both children7,8 and adults.9,10 Additionally, they have become more convenient for the user, since many hearing instrument manufacturers offer directional microphone systems that automatically engage in noisy environments, eliminating the need for a manual switch.

Examining Directional Systems

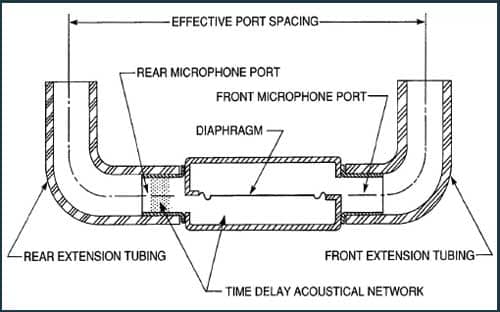

Before investigating the advantages or disadvantages of different types of directional microphones, it is important to understand how they work. A single fixed-directional microphone system consists of a pressure microphone with two sound ports connected to rubber or vinyl tubing (Figure 1).11 Sound pressure travels from the outside of the hearing aid, follows each tubing pathway through the sound ports, then arrives in two separate cavities. The cavities are on opposite sides of the microphone diaphragm. When air pressure impinges on the two surfaces of the diaphragm, the difference in pressure results in differential movement of the diaphragm and generates an electrical output. If the two sources of air pressure impinging on the opposing surfaces of the diaphragm are identical in magnitude and phase, they cancel each other out and no electrical output is produced.

|

| FIGURE 1. The mechanics of a single fixed-directional microphone as described in Knowles Electronics’ Technical Bulletin 21.11 |

To achieve a front-to-back directional effect, a time delay network is applied to the rear port of the microphone (facing to the rear of the user). If the ends of the tubing are the correct distance apart from each other, a sound wave passing through the opening of the rear tubing port progressing toward the front tubing port and to the diaphragm will take the same amount of time as a signal entering the rear port, passing through the time-delay network and to the diaphragm. This delay causes the sound pressure to reach both sides of the diaphragm at the same time, resulting in no electrical output for that signal.11 This basic microphone strategy results in a cardioid pattern, which can be affected by the wearer’s head and torso, positioning of the microphone in the hearing aid case and the size and shape of the case,2 as well as changes in barometric pressure.11

Instead of using a single microphone system, Phonak ushered in a new era of directional microphone systems for hearing aids in 1995 by using two independent microphones calibrated to work together.12 With this type of system, the time-delay network can be electronically applied by the digital programming in the hearing instrument. The use of programmable technology with this type of system allows for increased flexibility because the time delay can be adjusted by the programmer. Different polar plots can be used for different noise environments. The downside is that the variability of distance between the two microphones for custom hearing instruments adds another variable, and room for error. This is not a problem for BTE instruments because the design of these instruments is standardized.

It might be worthwhile noting that the human ear isn’t necessarily configured the same as a directional hearing device. The ears of humans have probably evolved in response to both our advanced communication needs and our need for survival in a world full of predators and bodily threats. Several physical acoustical measurement studies—some going as far back as 30 years ago—have shown that sound pressure levels at the tympanic membrane are not strongest for sounds arising from directly in front of the listener. Sound selectivity varies with frequency, but generally speaking, our ears have a bias for those sounds that are greater than 500 Hz in the region to the side and partly around the back of the ear’s ipsilateral side (for a more detailed discussion, see Schweitzer and Jessee13). In other words, the ear’s natural preference is for higher frequencies (eg, the snap of a twig or shout of a walking companion) at our sides and toward the rear rather than for face-to-face communication (the direction in which our vision is safeguarding us). This fact should not be taken to mean that the engineering rationale for directional-microphone hearing aids is misguided or flawed; however, it might cast directional microphones in the role of being an “evolutionary compensator” for a modern world that is not (as much) dominated by lions, tigers, bears, and bandits.

Fixed directional microphone systems provide a single static response pattern that focuses the directionality toward the front of the listener. This strategy depends on the assumption that the listener is most likely facing the speaker, and the noise will be spatially separated from the speaker. Obviously this is not always the case, as the noise may occur in any direction, including the front of the listener, and the target signal may not necessarily originate from the front of the listener.

Adaptive Directionality

This situation brought about the advent of adaptive directional microphone systems. Adaptive directional microphones attempt to locate both the signal and the noise, focusing the directionality toward the signal and away from the noise. The idea behind this system is to alleviate the need for the listener to look toward the signal, considering that the desirable speech signal originates from a direction other than the front of the listener more than 20% of the time.14 These systems function on the principle that the noise is a relatively constant sound, while speech is variable in frequency, intensity, and duration. Once a signal is identified as “speech,” the time delay will be adjusted such that the microphone will demonstrate its strongest directional effect toward the target source.

Given the additional level of complexity introduced by these adaptive directional systems, a number of recent studies have attempted to determine the most effective system for improving SNR for hearing aid users. Bentler et al15 looked at five microphone conditions: omnidirectional, cardioid, hypercardioid, supercardioid, and “monofit” (ie, one hearing aid and one unaided ear). In the study, the left hearing aid was set to omnidirectional and the right hearing aid was set to hypercardioid, and subjects were tested under three stimulus presentation conditions: speech in quiet, speech in noise, and music. The Hearing in Noise Test (HINT) and Connected Speech Tests (CST) were used as speech stimuli. All test conditions were subjectively rated by the test subjects, and the testing was conducted in a diffuse soundfield using eight speakers to introduce the speech signal and the background noise. The speakers were arranged so that they formed the corners of a cube. Not surprisingly, the omnidirectional condition was found to be significantly worse than all other conditions, indicating that any type of directionality was an improvement over none at all. However, there were no significant differences found among the different directional microphone conditions. Oddly, even the “monofit” condition was statistically equivalent to the other directional microphone conditions. The results of this study suggested that, while there does seem to be an advantage of directionality over an omnidirectional strategy, the specific type of directional strategy used does not seem to matter.

Kuk et al16 evaluated the function of a “fully adaptive” directional microphone system, compared to an omnidirectional and a fixed (hypercardioid) directional microphone strategy. The study was an attempt to “demonstrate the sensitivity limitation of a fixed directional microphone, as well as to evaluate the clinical efficacy of the fully adaptive directional microphone.” In this study, five speakers were placed in a soundfield at 0º, 45º, 90º, 135º, and 180º azimuths. Aided audiometric thresholds were assessed at 500, 1,000, 2,000, and 4,000 Hz at all azimuths. Overall, thresholds were found to be worse using the fixed directional microphone strategy, especially at the 135º azimuth position (the null of the hypercardioid polar pattern), while the omnidirectional and adaptive directional strategies were essentially the same. Additionally, 500- and 1,000-Hz thresholds were worse, even at the 0º azimuth presentation with the fixed directional microphone. Speech recognition testing was conducted at 50, 65, and 75 dB SPL in quiet using the computer assisted speech perception assessment (CASPA) test. This testing revealed that phoneme scores were similar for the omnidirectional and adaptive directional microphones at all levels and azimuths. Performance in noise was similar between the fixed and adaptive directional microphone strategies, although there did seem to be an azimuth effect with the fixed directional microphone system in that word recognition was significantly worse in the 45º, 90º, 135º, and 180º azimuths in quiet, with 135º (the null) being the worst azimuth for the signal source. This effect was most prominent at 50 dB SPL presentation level. Performance improved with increased presentation level. The adaptive directional and omnidirectional systems functioned essentially the same in quiet.

Available studies raise questions as to the advantages offered by the use of state-of-the-art directional microphone systems in hearing aids. While they should offer substantial benefits, at least in theory, the reality tends not to live up to the hope. It was noted that directional microphone systems help focus on the desired signal when it originates from the front. But what if the signal and the noise both originate from the front? Additionally, what if the noise is also a speech signal? Is either system helpful in these situations?

The goal of the remainder of this review is to determine if one type of system seems to provide a greater benefit to the listener, and under what conditions. We will discuss how a normally functioning auditory system deciphers multiple sounds and the problems with duplicating this process artificially.

Directional Mics: One Element in a Complex Auditory Processing System

When considering how a normal human auditory system deciphers various incoming sounds, a process referred to as auditory scene analysis,17 we find that this is a very complex process and, actually, not completely understood. In fact, Bregman17 devoted an entire text to this topic, yet many questions remain unanswered.

Perhaps a point of greatest significance to this discussion is the observation that characteristics of auditory signals such as pitch, timbre, and spatial location of voice are easily detectible by a normally functioning brain and auditory system and can be used to perceptually segment many simultaneously occurring sounds into perceptually distinct auditory streams. Even a newborn baby can identify her mother’s voice in the presence of many other sounds. To date DSP and directional microphone systems have failed to duplicate this ability artificially. This is where the difficulty lies in creating a maximally effective directional microphone system. Currently available technology allows for the partial separation of signals originating from spatially distinct locations but cannot yet duplicate streaming of signals based on other physical properties of the sound.

Bregman brings to light the complexity of the human auditory system and that directionality is a very minute part of auditory scene analysis. If a sound processor could duplicate this entire process, an amplification system could likely become a “cure,” rather than an “aid,” by zeroing in on one speaker’s voice and disregarding all other sounds. It is unlikely that such a system will become available in the near future.

In the book and the Oscar-winning movie Black Hawk Down,18,19 there is a pivotal scene where a child is used as a lookout to relay information regarding flight patterns of US Army Black Hawk helicopters. The child passes this information on to the Somalian warlord using only a cellular telephone; no special equipment was used to detect the helicopters.

This remains a common practice in the battlefield today because there is no equipment in existence that can relay more reliable information about sound than a human ear. A civilian, a child no less, with presumably normal hearing can detect, a Black Hawk miles before it reaches the lookout point. Such an observer can relay information about where the aircraft appears to be traveling without even seeing it. Undoubtedly, the world’s military forces are trying to devise a detection system that can accomplish this. However, just like the shortcomings of directional microphone systems, it is unlikely that any type of system can interpret sound information with a similar amount of complexity as the human auditory system.

It appears that the reason directional microphones do not seem to benefit hearing aid users as much as we would like is because there are too many unanswered questions about how we process complex sound information. In our highly advanced technological society, it is almost shocking that science has failed to produce an amplification system that can allow hearing impaired listeners to understand speech in background noise with no difficulty whatsoever. If this is the ultimate goal we wish to achieve with hearing instruments, it is clear that we have a lot more work to do.

We certainly do not wish to imply that use of directional microphones in hearing aids is moot, as this is clearly not the case. Although the most effective type of directional strategy to use is not clear, the benefits of using any directional rather than an omnidirectional strategy are accepted fact.

References

- Killion MC. Hearing aids: past, present, future: moving toward normal conversations in noise. Br J Audiol. 1997;31(3):141-148.

- Buerkli-Halevy O. The directional microphone advantage–a review of recent research literature. Phonak Focus. 1986;3.

- Mudry A, Dodele L. History of the technological development of air conduction hearing aids. J Laryngol Otol. 2000;114(6):418-423.

- Sung GS, Sung RJ, Angelelli RM. Directional microphone in hearing aids. Effects on speech discrimination in noise. Arch Otolaryngol. 1975;101(5):316-319.

- Nielsen HB, Ludvigsen C. Effect of hearing aids with directional microphones in different acoustic environments. Scand Audiol. 1978;7(4):217-224.

- Mueller HG, Grimes A, Erdman S. Subjective ratings of directional amplification. Hear Instrum. 1983;34(2):14-16,47.

- Gravel JS, Fausel N, Liskow C, Chobot J. Children’s speech recognition in noise using omni-directional and dual-microphone hearing aid technology. Ear Hear. 1999;20(1):1-11.

- Kuk FK, Kollofski C, Brown S, Melum A, Rosenthal A. Use of a digital hearing aid with directional microphones in school-aged children. J Am Acad Audiol. 1999;10(10):535-548.

- Yueh B, Souza PE, McDowell JA, et al. Randomized trial of amplification strategies. Arch Otolaryngol Head Neck Surg. 2001;127(10):1197-1204.

- Bentler R, Palmer C, Mueller HG. Evaluation of a second-order directional microphone hearing aid: I. Speech perception outcomes. J Am Acad Audiol. 2006;17(3):179-189.

- Kock WE. Directional Hearing Aid Microphone Application Notes. Itasca, Ill: Knowles Electronics. Knowles Technical Bulletin TB-21;1950.

- Valente M, Fabry, DA, Potts, LG Recognition of Speech in Noise with Hearing Aids Using Dual Microphones. Phonak Focus. 1995;19.

- Schweitzer C, Jesse SK. The value proposition of open-fit hearing aids. Hearing Review. 2006;13(10):10-19.

- Ricketts T, Henry P, Gnewikow D. Full time directional versus user selectable microphone modes in hearing aids. Ear Hear. 2003;24(5):424-439.

- Bentler RA, Egge JL, Tubbs JL, Dittberner AB, Flamme GA. Quantification of directional benefit across different polar response patterns. J Am Acad Audiol. 2004;15(9):649-659.

- Kuk F, Keenan D, Lau CC, Ludvigsen C. Performance of a fully adaptive directional microphone to signals presented from various azimuths. J Am Acad Audiol. 2005;16(6):333-347.

- Bregman AS. Auditory Scene Analysis: The Perceptual Organization of Sound. Cambridge, Mass: MIT Press; 1990.

- Bowden M. Black Hawk Down: A Story of Modern War. Berkeley, California: Atlantic Monthly Press; 1993.

- Scott R. Black Hawk Down [Motion Picture]. Revolution Studios; 2001.

Correspondence can be addressed to HR or Ryan Mills at .