Directional microphones are the only technology proven to enhance the signal-to-noise ratio for hearing impaired individuals.1-3 The underlying principle for any directional microphone system is based on the necessity for the noise and signal to be spatially separated. The assumption is then made that the important signal (eg, speech) is from the front while noise is from the rear of the listener and can therefore be attenuated.

Recent advances have delivered increasingly complex directional systems. For example, it is now relatively common to find hearing aids where the directional system is both automatic and adaptive. Automatic refers to the ability of the hearing aid to automatically switch between the omni and directional microphone settings (as opposed to manual systems where the listener presses a button). Adaptive refers to the shape of the polar plot when the hearing aid in is a directional mode. Adaptive polar plots are continually updated in order to provide the greatest reduction of noise. Therefore, the directional pattern is able to follow a noise source behind or to the sides of the listener to provide the greatest attenuation. The ability of the polar pattern to adapt to the unique configuration of each sound environment provides improved performance over systems where the polar response remains fixed to a certain predetermined pattern.4

While this technology is impressive and has the potential to provide significant benefits to hearing-impaired individuals, it is crucial that these systems switch between the omni and directional modes in accordance with the wishes of the user.5,6 It should be remembered that directional systems are not suitable for all listening situations and have inherent downsides, such increased microphone noise, susceptibility to wind noise, and audibility issues related to low frequency roll-off. Therefore, the system should be designed in such a way to ensure that the directional mode is only implemented when it will provide a benefit.

Fortunately, the work of Walden and his colleagues5 provide important information about when directional microphones provide benefits in everyday listening situations. The authors concluded that the benefits of directional systems occur when background noise is present, and speech is present from the front and near to the listener. Through diary recoding, the authors concluded that the situations ideal for directional microphone use occur 31% of the listening day.

Based on this work, it is possible to make some conclusions about directional microphone benefit. First, directional microphones can provide benefit in many commonly encountered noisy listening situations. Second, the omnidirectional state is the preferred state for a hearing instrument to be in when there is not a clear benefit of directionality. Therefore, omnidirectional should be preferred unless the directional mode can be shown to make an improvement. This rule is the guiding principal for all automatic directional systems.

Decision-Making in Directional Hearing Instruments

While manufacturers of hearing aids label technology differently, there are essentially two methods of decision making in directional microphone systems. The first is through making predictions of best performance based on laboratory performance measures. The second is through using parallel processing or Artificial Intelligence (AI) to process all of the possible processing options and arrive at the optimal solution.7

Prediction-based systems. The basis of a prediction-based system is to conduct an analysis of the environment and compare the results of that analysis to predetermined laboratory-based decisions about whether directionality is best in that environment. Depending on the product, this analysis can range from a relatively simple estimation of the sound level to a comprehensive analysis of the sound scene.8 An example of such a rule based system could be that 1) if the input level is above a certain minimum (eg, 50-60 dBSPL), and 2) there is noise present, then it may be assumed that directionality provides a benefit.9

Parallel processing systems. AI does not use laboratory-based predictions. Instead, the hearing aid itself calculates the actual speech-to-noise ratio (SpNR) in each possible microphone mode and implements the mode that provides the highest SpNR. The promised benefit is that the system will only switch to a directional mode when a calculated improvement in SpNR is determined.

As these two systems take different philosophical approaches, it raises the question of what are the actual processing differences resulting from the two systems. In order to carry out this investigation, we compared two Oticon Syncro hearing aids: one incorporating AI, and one that was adapted specifically for use in this study to mimic prediction-based systems.

Testing the Two Methods

Automatic multiband adaptive directional microphone technology is employed in the Oticon Syncro hearing instrument. The decisions regarding which mode to deploy, and the configurations of the four frequency bands, are determined by measuring the SpNR in all possible configurations and then selecting the mode with the best SpNR.

The hearing instrument also allows the selection between two directional modes, Full-directional and Split-directional. Full-directional simply means that directionality is applied across the full bandwidth of the device. In other words, directionality is taking place across all frequency regions. In Split-directionality, low frequencies stay in an omni-directional mode, and only frequencies above 1000 Hz employ directionality. The benefits that split-directional provide are through removing the annoyance of microphone noise, the necessity to compensate for roll-off, and a reduction in the effects of wind noise. All of these benefits allow Split-directional to operate where Full-directional may not be preferred, thus providing the user with more directional benefit. Importantly, this approach results in little loss of directivity in moderate level sound environments, as directionality is provided across the frequencies important for speech understanding.

For comparison, a Syncro was adapted so that the AI system was replaced by a system relying on prediction-based benefit. Here the system relied on input-level criterion set to 62 dBSPL to determine when to switch between the two directional states. Split-directional was not an option, as the ability to implement this unique mode relies on the SpNR calculation only available in AI-based systems.

The identical hearing aid platform was selected to ensure that there were no interfering variables such as transition times or directionality kneepoints which, while important, are not part of decision making per se. Similarly, the use of Oticon Syncro enabled the actual directional state to be viewed in the Syncro Live section of the Genie fitting software and for noise management settings to be turned off.

To ensure robustness of the evaluation, the performance of the modified Syncro was confirmed in an evaluation of four other premium prediction-based systems to ensure it consistently provided similar decision-making outcomes.

Laboratory Evaluation of the Two Decision-Making Methods

To evaluate the decision making, we first conducted a laboratory evaluation of six different listening situations where the position of the speech and noise sources were systematically varied. These were designed to replicate six common situations from the Walden et al5 study where the decision regarding directional mode was fairly clear cut.

For each test, the hearing aid was placed on right ear of a Head and Torso Simulator (HATS) in a soundproof booth with loudspeakers at 0° (front) and 180° (back). The speech material was the running speech of the Dantale-I speech test with the noise being unmodulated white noise. Except for Condition 3, the presentation level of speech and noise was 75 dBA (eg, 0 dBSNR) to replicate a difficult listening situation. The current state of the prediction-based and the parallel-processing systems were viewed in the SyncroLive software which allows an actual readout of the settings.

Based on general logic and the results from Walden et al,5 we would expect directionality to be appropriate in Conditions 3 and 4, when speech was present from the front and noise was from behind. In the remaining conditions, directionality would neither be needed (Condition 1) nor effective (Conditions 2, 5, and 6). As concluded by Walden et al,5 when directionality does not improve the SpNR, patients prefer an omni-directional setting.

|

TABLE 1. Summary of the six listening conditions comparing the directional decisions of the prediction-based and AI-based (parallel processing) directionality systems. The shaded area indicates when the decisions were in accordance with the preference as provided by Walden et al.5

The directionality decisions for Oticon Syncro are presented in Table 1. It is interesting to note that Syncro chooses Split Directionality in Condition 3 whereas Full Directional was selected in Condition 4. The likely reason for this decision has to do with signal levels. At moderate signal levels, Syncro chooses Split Directionality to provide both the benefits of directionality in the mid and high frequencies but the sound quality benefits of omni-directionality in the lows. With a greater noise level in Condition 4 compared to Condition 3, the overall level was higher and thus led to the change to Full Directionality mode. For Conditions 5 and 6, the AI analysis reported that there was no benefit to be obtained by switching to a directional mode and therefore Syncro remained in omnidirectional mode. Therefore, this experiment clearly demonstrates how the parallel-processing system of Syncro makes decisions and that these decisions match the needs of the listener.

Interestingly, when in the prediction-based system mode, various errors were noticed. First, if the level was high and the signal from the rear this was unfortunately mistaken for a situation in which directionality was beneficial, due to the lack of the SpNR analysis to determine front vs back. Similarly, for Conditions 2 and 6— where speech was from the rear—the directional microphone was selected, thus decreasing speech understanding. Fortunately, these situations only occur 13.1% of the time, but they do represent situations such as being at a cocktail party or children in a classroom listening to a question from a child seated behind them. Therefore, when using prediction-based technology, it is crucial to evaluate how the hearing instrument reacts to important signals originating from behind in difficult listening situations.

This work is consistent with previous independent reports that demonstrated that prediction-based systems are liable to make errors of decision making10 and that, in certain situations, users need to override the automatic settings. These errors come from the fact that the best microphone response for everyday listening environments cannot be predicted in the laboratory. Increasingly, these predictive-based systems have implemented additional measures of the input signal to control the selection of directional mode, such as modulation characteristics and spectral content of the input signal. Yet, despite the inclusion of more parameters, these systems are still predicting potential benefit rather than actually knowing the final SpNR. The result is that predictive systems are liable to be either in directional mode too often or too little of the time.

Evaluation of Decisions in the Real World

Given the capability of today’s hearing instruments to record the performance of the hearing aid in the user’s actual listening environments,11,12 it was instructive to create an Envirogram over the listening day of one individual. An Envirogram is a recording of the actual sound levels with additional information about the workings of the automatic systems (in this case directionality).12

|

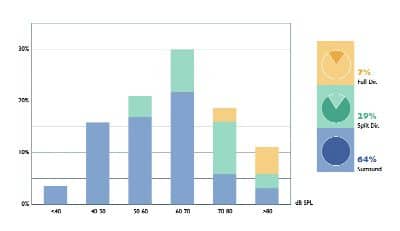

| FIGURE 1. Envirogram from AI-based decisions indicating the directional mode used in different listening situations. The AI-driven selections between full-, split-, and surround (omni) can be easily observed. |

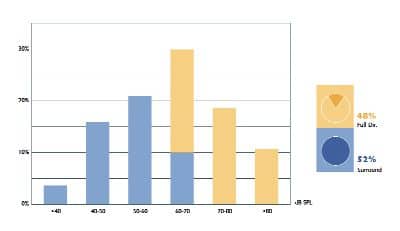

From the Envirogram data (Figures 1 and 2), it can be seen that, in terms of the amount of directionality, the AI-based system was in a directional mode a total of 36% of the time compared with 48% of the time for the prediction-based system. The use of an AI-based system allows the judicious application of directionality, only when it makes a quantifiable benefit in speech understanding. This can be observed when comparing the higher input levels. The prediction-based system is always in directional mode because it makes the assumption that, if the sound scene is loud and complex, then directionality will most likely provide a benefit. The AI-based system remains free to select between the three microphone modes in any listening situation and to select the one that provides the best SpNR. When no SpNR benefit can be obtained, then the hearing aid stays in the surround or omnidirectional mode.

|

| FIGURE 2. Envirogram from prediction based decision making indicating the implementation of full-directional in louder listening situations. |

Unique to AI-based systems is the use of the Split-directional mode. To recap, split-directionality combines directionality in the mid and high frequencies to improve speech information while remaining in an omnidirectional setting below 1000 Hz in order to preserve sound quality and audibility. With prediction based systems it could be hypothetically possible to include more parameters so that split-directionality could be possible. The problem with this approach is that we are complicating an inherently inefficient approach to device decision making. It is not about the complexity of the model, rather it is about ensuring the directional mode is selected via a knowledge-based approach that selects between the directional mode that provides the best possible speech understanding no matter what the communication situation We can see the benefit of Split-directional mode to the patient in that it was chosen 80% of the time when the hearing aid selected directionality. This is consistent with previous findings that split-directional provides little loss of information while preserving good sound quality.14 Therefore, for most complex situations, split-directional provides the same benefit as the full-directional microphone. However, in 20% of the most complex listening environments, the full-directional mode is enacted to further improve speech understanding.

Summary

A key aspect to understanding directional microphone technology is knowledge about the underlying decision-making process. Studies such as those conducted by Walden and colleagues5 present compelling evidence that people prefer different microphone settings in different communication environments and that these preferences cannot be simplified to whether or not noise is present in the signal.

Similarly, it is crucial that hearing instrument users are able to benefit from a system that is able to make the correct judgments about whether the signal of interest is coming from the front or the back. By evaluating the resulting SpNR, AI-based systems are able to correctly judge whether the directional system is taking away the speech signal. This allows the system to quickly switch to the surround (omni) mode when the speech is from behind, such as when a friend trying to get your attention at a cocktail party. Similarly, this capability for the system to ensure that no speech is removed is crucial for deciding when to use directional microphone systems for children. For instance, the front-vs-back detector ensures that questions and answers from children behind the listener in a classroom will not be needlessly attenuated.

Parallel processing is implemented in the Oticon Syncro to overcome some of the limitations observed in predictive approaches. AI uses a confirmation-based approach to controlling the selection of directional modes. This approach is not only based on input level but, more importantly, is driven by which of the three available modes actually provides the best SpNR. This analysis is designed to ensure that at any moment in time, the device is set to provide the signal with the best SpNR while protecting against excessive wind noise, reduced sound quality, and avoiding the use of directionality when the predominant speech signal is coming from behind.

Directionality is one area where Artificial Intelligence provides a quantifiable improvement in decision making. Relative to its assessment of optimal SpNR, the use of AI and the ability to process multiple streams of information in parallel is designed to provide a more accurate and simpler method for selection of the directionality mode than the complex requirements of trying to categorize listening environments into a limited set of sound scenes.

| This article was submitted to HR by Mark C. Flynn, PhD, director of product concept definition at Oticon A/S, Smørum, Denmark. Correspondence can be addressed to HR or Mark C. Flynn, PhD , Oticon A/S, Kongebakken 9, Smørum, DK 2740, Denmark; email: [email protected]. |

References

1. Bentler RA. Effectiveness of directional microphones and noise reduction schemes in hearing aids: A systematic review of the evidence. J Am Acad Audiol. 2005;16(7):473-84.

2. Walden BE, Surr RK, Cord MT. Real-world performance of directional microphone hearing aids. Hear Jour. 2003;56(11):40-7.

3. Flynn MC. Maintaining the directional advantage in open fittings. The Hearing Review. 2004;11(11):32-6.

4. Ricketts T, Henry P. Evaluation of an adaptive, directional-microphone hearing aid. Int J Audiol. 2002;41(2):100-12.

5. Walden BE, Surr RK, Cord MT, Dyrlund O. Predicting hearing aid microphone preference in everyday listening. J Am Acad Audiol. 2004;15(5):365-96.

6. Ricketts T, Henry P, Gnewikow D. Full time directional versus user selectable microphone modes in hearing aids. Ear Hear. 2003;24(5):424-39.

7. Flynn MC. Maximizing the Voice-to-Noise ratio (VNR) via Voice Priority Processing. The Hearing Review. 2004;11(4):54-9.

8. Chung K. Challenges and recent developments in hearing aids. Part I. Speech understanding in noise, microphone technologies and noise reduction algorithms. Trends Amplif. 2004;8(3):83-124.

9. Kuk F, Keenan D, Lau CC, Ludvigsen C. Performance of a fully adaptive directional microphone to signals presented from various azimuths. J Am Acad Audiol. 2005;16(6):333-47.

10. Gabriel B. Study measures user benefit of two modern hearing aid features. Hear Jour. 2002;55(5):46-50.

11. Fabry DA, Tchorz J. Results from a new hearing aid using "acoustic scene analysis". Hear Jour. 2005;58(4):30-6.

12. Flynn MC. Envirograms: Bringing greater utility to datalogging. The Hearing Review. 2005;12(11):32-28.

13. Flynn MC. Datalogging: A new paradigm in the hearing instrument fitting process. The Hearing Review. 2005;12(3):52-7.

14. Flynn MC, Lunner T. Clinical evidence for the benefits of Oticon Syncro. News from Oticon. 2004;November:1-10.